当前位置:网站首页>Detailed explanation of Milvus 2.0 quality assurance system

Detailed explanation of Milvus 2.0 quality assurance system

2022-04-23 16:57:00 【Zilliz Planet】

Editor's note : This article introduces in detail Milvus 2.0 Workflow of quality assurance system 、 Execution details , And the optimization scheme to improve efficiency .

General introduction to quality assurance

The focus of the test content

How the development team and the quality assurance team work together

Issue The management process of

Publish standards

Introduction to test module

General introduction

unit testing

A functional test

The deployment of test

Reliability test

Stability and performance test

Efficiency improvement methods and tools

Github Action

Performance testing

General introduction to quality assurance

Architectural drawings are equally important for quality assurance , Only fully understand the tested object , In order to formulate a more reasonable and efficient test scheme .Milvus 2.0 It's a cloud native 、 Distributed architecture , The main entrance passes through SDK Get into , There are many layers of logic inside . So for users ,SDK This end is a very noteworthy part , Yes Milvus When testing , First of all, I will be right SDK Function test on this end , And pass SDK To discover Milvus Possible internal problems . meanwhile Milvus It's also a database , Therefore, various system tests on the database will also involve .

Cloud native 、 Distributed architecture , It will bring benefits to the test , It also introduces challenges . The advantage is that , It is different from the traditional local deployment , stay k8s The deployment and operation in the cluster can ensure the consistency of software development and testing environment as much as possible . The challenge is that distributed systems become complex , Introduce more uncertainty , The increase of test workload and difficulty . For example, after microservicing, the number of services increases 、 There will be more nodes on the machine , The more intermediate stages , The more likely it is to make a mistake , Each situation needs to be considered when testing .

The focus of the test content

According to the nature of the product 、 User needs ,Milvus The test contents and priorities are shown in the following figure .

First, in the function (Function) On , Focus on whether the interface can meet the design expectations .

Second, deploy (Deployment) On , Focus on standalone perhaps cluster Whether the restart and upgrade in mode can succeed .

Third, performance (Performance) On , Because it is a real-time analysis database integrating flow and batch , So we will pay more attention to speed , Will pay more attention to inserting 、 Index 、 Query performance .

Fourth, in stability (Stability) On , Focus on Milvus Normal operation time under normal load , The expected goal is 5 - 10 God .

Fifth, reliability (Reliability) On , Focus on when an error occurs Milvus The performance of the , And whether error elimination still works .

The sixth is the configuration problem (Configuration), You need to verify whether each open configuration item works normally , Whether the change can take effect .

Finally, the compatibility issue (Compatibility), It is mainly reflected in hardware and software configuration .

It can be seen from the picture that , Functionality and deployment are at the highest level , performance 、 stability 、 Reliability is placed in the second level , Finally, put configuration and compatibility in the third place . however , The importance of this level is also relative .

How the development team and the quality assurance team work together

General users will think that the task of quality assurance is only assigned to the quality assurance team , But in the process of software development , Quality needs multi team cooperation to be guaranteed .

The initial stage consists of developing design documents , The quality assurance team writes the test plan according to the design documents . These two documents need the joint participation of testing and development to reduce understanding errors . The goal of this version will be set before release , Including performance 、 stability 、bug To what extent does the number need to converge . In the development process , Development focuses on the realization of coding function , After the function is completed, the quality assurance team will test and verify . These two stages will be patrolled many times , The quality assurance team and the development team need to keep information synchronized every day . Besides , In addition to the development and verification of its own functions , Open source products will also receive a lot of questions from the community , It will also be solved according to priority .

In the final stage , If the product meets the release standard , The team will select a time node , Release a new image . Before publishing, you need to prepare a release tag and release note, Focus on what this version does , What has been fixed issue, The later quality assurance team will also issue a test report for this version .

Issue The management process of

The quality assurance team pays more attention to... In product development issue.Issue In addition to the members of the quality assurance team , There are also a large number of external users , Therefore, it is necessary to standardize each issue Fill in your information . Every issue There's a template , Ask the author to provide some information , For example, the current version , Machine configuration information , Then what are your expectations ? What is the actual return result ? How to reproduce this issue, Then the quality team and the development team will continue to follow up .

Creating this issue after , First of all be assign To the head of the quality assurance team , Then the person in charge will be responsible for this issue Make some status flow . If issue Set up and have enough information , There will be several states in the future , Such as : Whether it has solved ; Whether it can reproduce ; Whether there is a repetition with the previous ; Probability of occurrence ; Priority size . If defects are confirmed , The development team will submit PR, Related to this issue, Make changes . After verification , This issue Will be closed , If there is still a problem later , just so so reopen. Besides , In order to improve the issue Management efficiency of , Tags and robots will also be introduced , Used to deal with issue Classification and status flow .

Publish standards

Whether it can be released mainly refers to whether the current version can meet the expected requirements . For example, the above figure is a general situation ,RC6 To RC7,RC8 and GA Standards for . With the development of the version , Yes Milvus Put forward higher requirements for the quality of :

- From the beginning 50M The order of magnitude , Gradually evolved to 1B The order of magnitude

- In stable task operation , From single task to mixed task , From... Hours to... Days

- For code , It is also gradually improving its code coverage

- ……

- Besides , As the version changes , Other test items will also be added . For example, in RC7 When , It is proposed to have a compatibility item , Be compatible when upgrading ; stay GA When , Introduce more tests on chaotic Engineering

Introduction to test module

The second part is about some specific details of each test module .

General introduction

The industry has written code is to write bug My banter , You can see it in the picture below ,85% Of bug By coding Introduced in the stage .

From a testing point of view , From code writing to version release , In turn, you can pass Unit Test / Functional Test / System Test To discover bug; But as the stage goes on , Repair bug The cost of will also increase , Therefore, they tend to find and repair early . however , Each stage of testing has its own focus , It's impossible to find all... By just one test bug.

The stage of development from code writing to code merging to the main branch will be from UT、code coverage and code review To ensure code quality , These items are also reflected in CI in . Submitting PR Into the process of code merging , Need to pass static code check 、 unit testing 、 Code coverage criteria and reviewer Code review .

When merging code , The integration test also needs to pass . In order to guarantee the whole CI It won't take long , In this integration test , Main operation L0 and L1 These have high priority tags case. After passing all the checks , You can go there. milvusdb/milvus-dev Publish this in the warehouse PR Build the image . After the image is released , A scheduled task will be set to test the latest image : Full amount of original function test , Functional testing of new features , The deployment of test , Performance testing , Stability test , Chaos test, etc .

unit testing

Unit testing can find software problems as early as possible bug, At the same time, it can also provide verification standards for code refactoring . stay Milvus Of PR In the access criteria , Set the unit test of the code 80% Coverage targets .

https://app.codecov.io/gh/milvus-io/milvus/

A functional test

Yes Milvus Function test , Mainly through pymilvus This SDK As an entry point .

Functional testing mainly focuses on whether the interface can work as expected .

- When inputting normal parameters or adopting normal operation ,SDK Whether it can return the expected results

- When the parameter or operation is abnormal ,SDK Yes no handle Live with these mistakes , At the same time, it can return some reasonable error information

The following figure shows the current functional testing framework , Overall, it is based on the current mainstream testing framework pytest, Also on pymilvus It's packaged once , It provides the ability of interface automatic test .

Adopt the above test framework , Not directly pymilvus Native interface , This is because some common methods need to be extracted during the testing process , Reuse some common functions . It also encapsulates a check Module , It is more convenient to check some expected and true values .

At present tests/python_client/testcases The functional test cases under the directory already have 2700+, It basically covers pymilvus All interfaces of , And includes positive and negative use cases . Functional testing as Milvus Basic function guarantee of , Through automation and continuous integration , Strictly control every submitted PR quality .

The deployment of test

Deployment testing , Support Milvus Deployment forms include standalone and cluster , The deployment methods are docker perhaps helm. After deployment , The system needs to be restart and upgrade The operation of .

Restart test , It mainly verifies the persistence of data , That is, whether the data before restart can continue to be used after restart ; Upgrade test , Mainly to verify the compatibility of data , Prevent unknowingly introducing incompatible data formats .

Restart test and upgrade test can be unified into the following test process :

If it is a restart test , The two deployments use the same image ; If it is an upgrade test , The first deployment uses the old version image , The second deployment uses the new version image . The second deployment , Whether it's a reboot or an upgrade , The test data after the first deployment will be retained ( Volumes Folder or PVC ). stay Run first test In this step , Will create multiple collection, And for each collection Perform different actions , Put it in a different state , for example :

- create collection

- create collection --> insert data

- create collection --> insert data -->load

- create collection --> insert data -->flush

- create collection --> insert data -->flush -->load

- create collection --> insert data -->flush --> create index

- create collection --> insert data -->flush --> create index --> load

- ......

stay Run second test There are two types of validation in this step :

- Previously created collection Various functions are still available

- I can create a new one collection, Similarly, various functions are still available

Reliability test

Currently, it is aimed at cloud native , Reliability of distributed products , Most companies will test through chaos Engineering . Chaos engineering aims to nip failures in the bud , That is to identify faults before they cause interruptions . By actively creating faults , Test the behavior of the system under various pressures , Identify and fix problems , Avoid serious consequences .

In execution chaos test when , I chose Chaos Mesh As a fault injection tool .Chaos Mesh yes PingCAP The company is testing TiDB Incubated in the process of reliability , It is very suitable for the reliability test of cloud native distributed database .

In the fault type , The following fault types are realized :

- The first is pod kill, The test scope is all components , Simulate node downtime

- secondly pod failure, Mainly focus on work node In the case of multiple copies of , There is one pod Can't work , The whole system is still working

- The third is memory stress , Focus on memory and CPU The pressure of the , Mainly injected into work node The node of

- the last one network partition , namely pod And pod A communication isolation between .Milvus It's a storage computing separation , A multi-layer architecture in which work nodes and coordination nodes are separated , There is a lot of communication between different components , Need to pass through network partition Test their interdependence

By building a framework , More automatically realize Chaos Test.

technological process :

- By reading the deployment configuration , Initialize a Milvus colony

- State of the cluster ready after , First, a e2e test , verification Milvus Features available

- function hello_milvus.py, It is mainly used to verify the persistence of data , A... Will be created before fault injection hello_milvus Of collection, Insert data ,flush,create index,load,search,query. Be careful , Will not collection release and drop

- Create a monitoring object , This object is mainly used to open 6 Threads , Execute continuously create,insert,flush,index,search,query operation

checkers = {

Op.create: CreateChecker(),

Op.insert: InsertFlushChecker(),

Op.flush: InsertFlushChecker(flush=True),

Op.index: IndexChecker(),

Op.search: SearchChecker(),

Op.query: QueryChecker()

}- Make the first assertion before fault injection : All operations are expected to succeed

- Injection failure : Analyze and define the fault yaml file , adopt Chaos Mesh, towards Milvus Inject fault into the system , For example, make query node Every time 5s By kill once

- A second assertion is made during fault injection : Judge the... During the fault Milvus Whether the results returned by each operation performed are consistent with expectations

- Delete fault : adopt Chaos Mesh Delete previously injected faults

- Fault deletion ,Milvus After resuming service ( all pod all ready ), Make a third assertion : All operations are expected to succeed

- Run one e2e test , verification Milvus Features available , Because the third assertion , Some operations will be in chaos Blocked during injection , Even after the fault is eliminated , Still blocked , As a result, the third assertion cannot be fully successful as expected . Therefore, this step is added to assist in the judgment of the third assertion , And temporarily e2e Test as Milus Criteria for recovery

- function hello_milvus.py, Load previously created collection, And collection Perform a series of operations , Judge whether the data before the fault is recovered after the fault , Whether it is still available

- Log collection

Stability and performance test

Stability test

The purpose of the stability test :

- Milvus Under normal pressure load , Set duration of smooth operation

- At run time , The resources used by the system remain stable ,Milvus Our service is normal

Two load scenarios are mainly considered :

- Intensive reading :search request 90%,insert request 5%, other 5%. This scenario is mainly offline , After data import , Basically not updated , It mainly provides query services

- Write intensive : insert request 50%,search request 40%, other 10%. This scenario is mainly online , You need to provide the service of inserting and querying

Check the item :

- Memory usage smoothing

- CPU Usage smoothing

- IO Delay smoothing

- Milvus Of pod Normal state

- Milvus Service response time is smooth

Performance testing

Purpose of performance test :

- Yes Milvus The performance of each interface shall be investigated

- Through performance comparison , Find the best parameter configuration of the interface

- As a performance benchmark , Prevent performance degradation in later versions

- Find performance bottlenecks , Provide reference for performance tuning

The main performance scenarios to consider :

- Data insertion performance

- Performance indicators : throughput

- Variable : Number of insertion vectors per batch ,......

- Index building performance

- Performance indicators : Index build time

- Variable : Index type ,index node Number ,......

- Vector query performance

- Performance indicators : response time , Number of query vectors per second , Requests per second , Recall rate

- Variable :nq,topK, Data set size , Dataset type , Index type ,query node Number , Deployment mode ,......

- ......

Test framework and process

- Resolve and update the configuration , Define indicators

- server-configmap The corresponding is Milvus Single machine or cluster configuration

- client-configmap The corresponding is the test case configuration

- Configure the server and client

- Data preparation

- Request interaction between client and server

- Reporting and display of indicator data

Efficiency improvement methods and tools

It can be seen from the above , Many steps in the test process are the same , The main thing is to modify Milvus server End configuration ,client End configuration , The incoming parameters of the interface . In multiple configurations , By arranging and combining , It takes many experiments to cover all kinds of test scenarios , So code reuse 、 Process reuse 、 Testing efficiency is a very important issue .

- A comparison of the original method api_request Decorator package , Set it to be similar to a API gateway, Unified to receive all API request , Send to Milvus Then receive the response uniformly , Return to client. This makes it easier to capture some log information , For example, the parameters passed 、 The result returned . At the same time, the returned results can be through checker Module to verify , It is convenient to define all inspection methods in the same checker modular

- Set default parameters , Encapsulate several necessary initialization steps into a function , Functions that originally needed a lot of code can be realized through an interface . This setting can reduce a lot of redundant and repetitive code , Make each test case simpler and clearer

- Each test case is unique to the association collection To test , Ensure the data isolation between test cases . At the beginning of the execution of each test case , Create a new collection Used for testing , The corresponding... Will also be deleted after the test collection

- Because each test case is independent of each other , When executing test cases , Can pass pytest Plug in for

pytest -xdistConcurrent execution , Improve execution efficiency

Github Action

GitHub Action The advantages of :

- And GitHub Deep integration , Native CI/CD Tools

- Uniformly configured machine environment , At the same time, a wealth of common software development tools are pre installed

- Support multiple operating systems and versions :Ubuntu, Mac and Windows-server

- There is a rich market for plug-ins , Provides a variety of out of the box functions

- adopt matrxi Arrange and combine , Reuse the same test process , Support concurrent job, To improve efficiency

Both deployment testing and reliability testing require an isolated environment , It's very suitable for GitHub Action Small scale data volume test on . Run regularly every day , Test the latest master Mirror image , Play the function of daily inspection .

Performance testing tools

- Argo workflow: By creating a workflow, Realize task scheduling , Connect the processes in series . As can be seen from the picture on the right , adopt Argo Multiple tasks can be run at the same time

- Kubernetes Dashboard: visualization server-configmap and client-configmap

- NAS: Mount commonly used ann-benchmark Data sets

- InfluxDB and MongoDB: Save performance index results

- Grafana: Server resource indicator monitoring , Client performance indicator monitoring

- Redash: Performance charts show

Please stamp the full version of the video explanation :

Deep dive#7 Milvus 2.0 Detailed explanation of quality assurance system _ Bili, Bili _bilibili

If you are using , Yes Milvus Any improvement or suggestion , Welcome to the GitHub Or keep in touch with us through various official channels ~

Zilliz With the vision of redefining Data Science , Committed to building a global leading open source technology innovation company , And unlock the hidden value of unstructured data for enterprises through open source and cloud native solutions .

Zilliz To build the Milvus Vector database , To speed up the development of the next generation of data platform .Milvus The database is LF AI & Data The foundation's Graduation Program , Able to manage a large number of unstructured data sets , In new drug discovery 、 Recommendation system 、 Chat robot has a wide range of applications .

版权声明

本文为[Zilliz Planet]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204231654325045.html

边栏推荐

- 手写事件发布订阅框架

- Pytorch: the pit between train mode and eval mode

- 昆腾全双工数字无线收发芯片KT1605/KT1606/KT1607/KT1608适用对讲机方案

- MySQL master-slave configuration under CentOS

- Custom implementation of Baidu image recognition (instead of aipocr)

- Nodejs reads the local JSON file through require. Unexpected token / in JSON at position appears

- 批量制造测试数据的思路,附源码

- AIOT产业技术全景结构-数字化架构设计(8)

- LVM and disk quota

- Redis docker installation

猜你喜欢

Project framework of robot framework

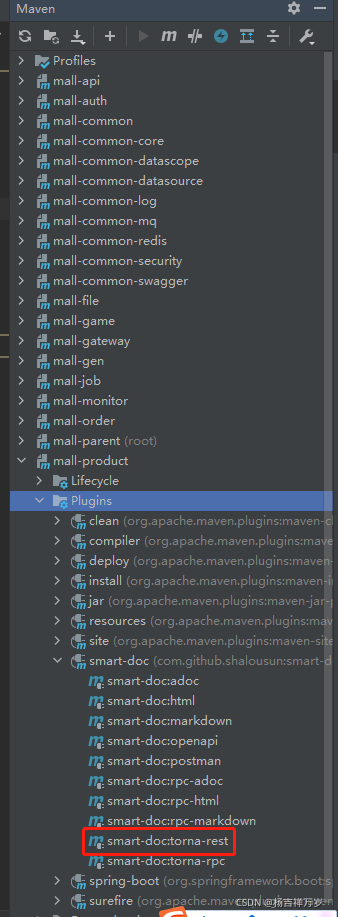

Smart doc + Torna generate interface document

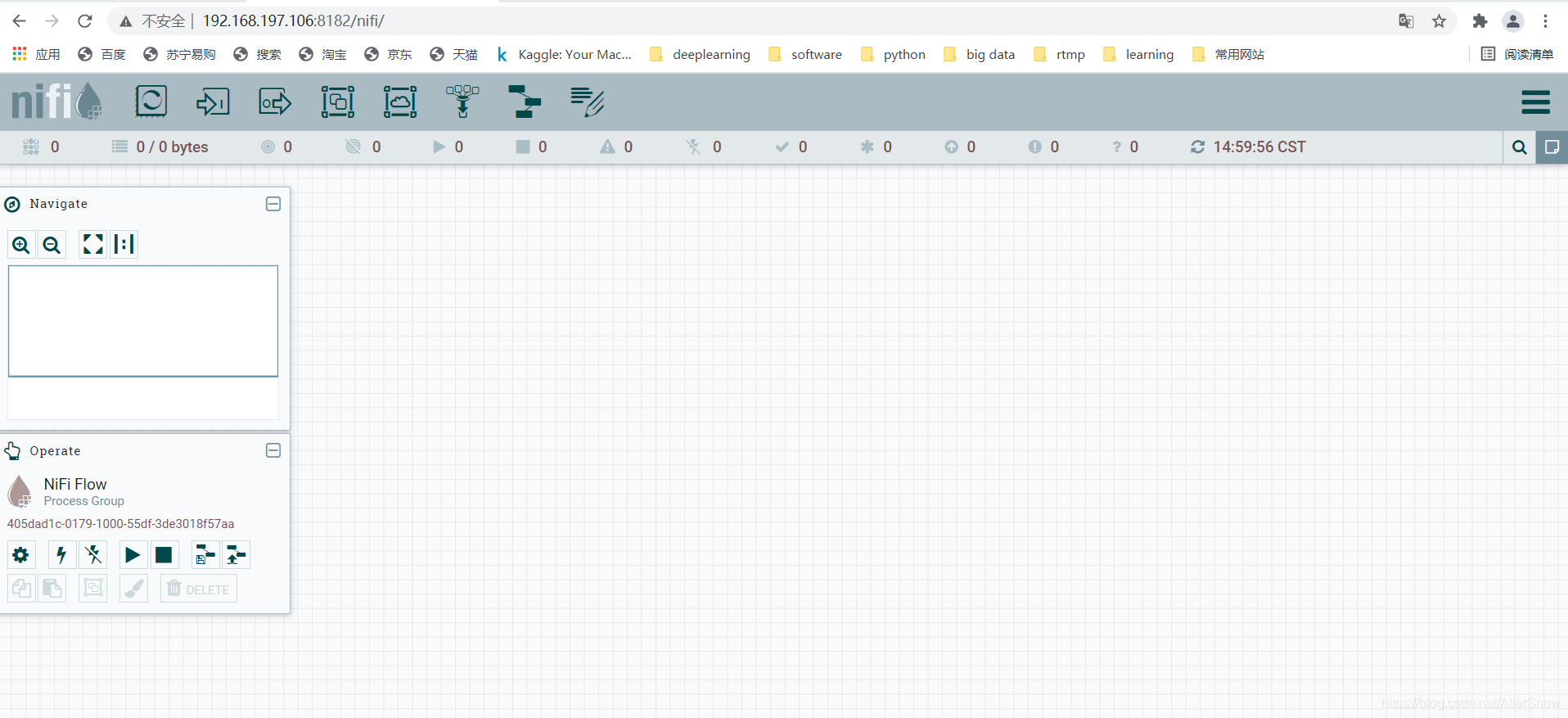

Nifi fast installation and file synchronization

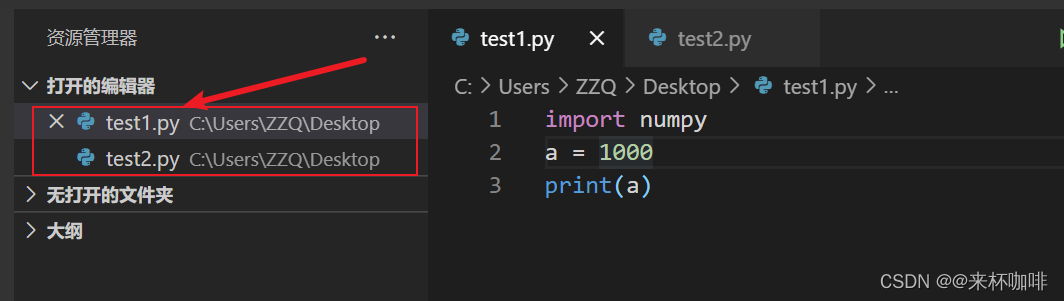

vscode如何比较两个文件的异同

![[pimf] openharmony paper Club - what is the experience of wandering in ACM survey](/img/b6/3df53baafb9aad3024d10cf9b56230.png)

[pimf] openharmony paper Club - what is the experience of wandering in ACM survey

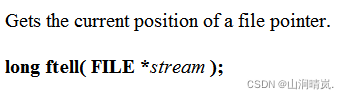

Detailed explanation of file operation (2)

LVM and disk quota

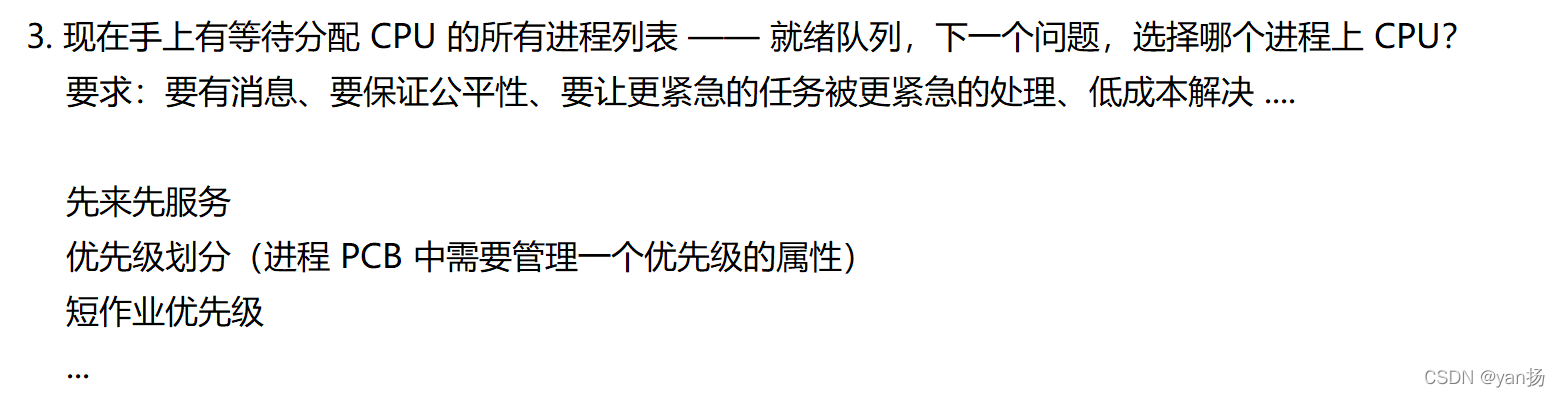

面试百分百问到的进程,你究竟了解多少

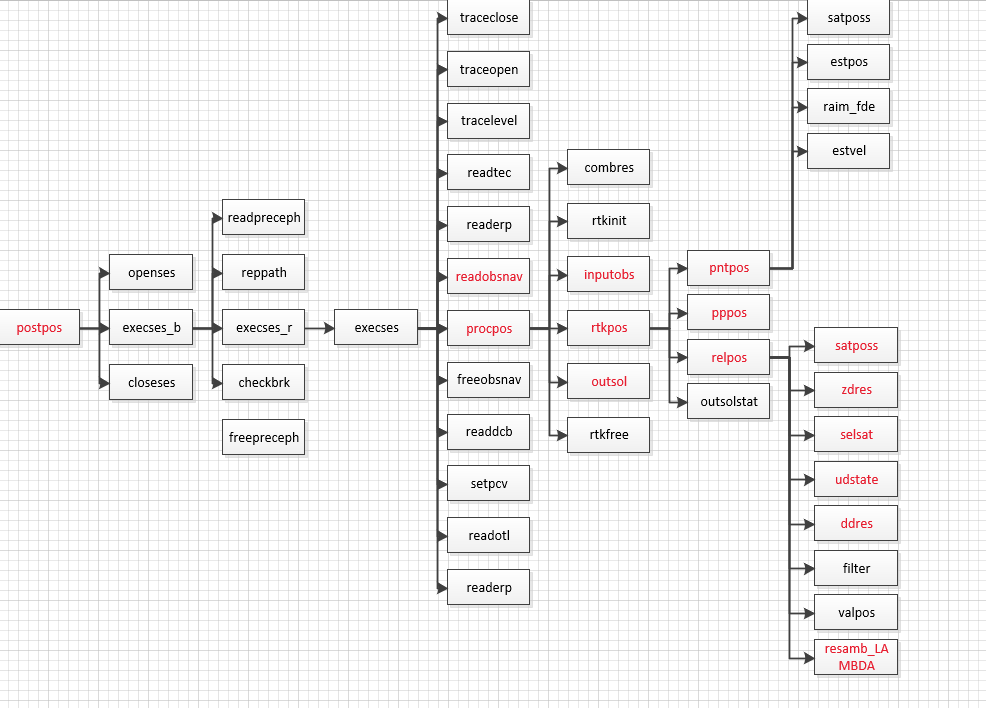

Rtklib 2.4.3 source code Notes

批量制造测试数据的思路,附源码

随机推荐

True math problems in 1959 college entrance examination

Bytevcharts visual chart library, I have everything you want

The new MySQL table has a self increasing ID of 20 bits. The reason is

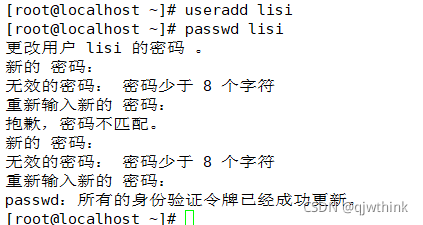

Change the password after installing MySQL in Linux

Detailed explanation of the penetration of network security in the shooting range

Nodejs installation and environment configuration

Installation and management procedures

Project framework of robot framework

RTKLIB 2.4.3源码笔记

Derivation of Σ GL perspective projection matrix

English | day15, 16 x sentence true research daily sentence (clause disconnection, modification)

SQL database

蓝桥杯省一之路06——第十二届省赛真题第二场

【题解】[SHOI2012] 随机树

Dlib of face recognition framework

VLAN高级技术,VLAN聚合,超级Super VLAN ,Sub VLAN

Production environment——

Flask如何在内存中缓存数据?

On the security of key passing and digital signature

Idea of batch manufacturing test data, with source code