当前位置:网站首页>Spark 算子之groupBy使用

Spark 算子之groupBy使用

2022-04-23 15:45:00 【逆风飞翔的小叔】

前言

groupBy,顾名思义,即为分组的含义,在mysql中groupBy经常被使用,相信很多同学并不陌生,作为Spark 中比较常用的算子之一,有必要深入了解和学习;

函数签名

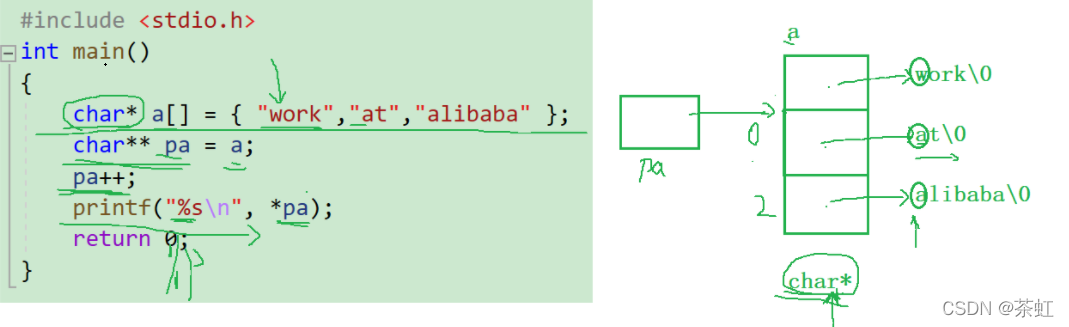

def groupBy[K](f: T => K )(implicit kt: ClassTag[K]): RDD[(K, Iterable[T])]

函数说明

将数据根据指定的规则进行分组 , 分区默认不变,但是数据会被 打乱重新组合 ,我们将这样的操作称之为 shuffle 。极限情况下,数据可能被分在同一个分区中

补充说明:

-

一个组的数据在一个分区中,但是并不是说一个分区中只有一个组

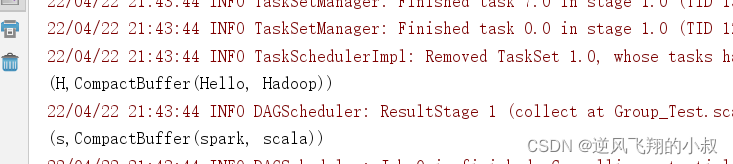

案例展示一

自定义一个集合,里面有多个字符串,按照每个元素的首字母进行分组

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.rdd.RDD

object Group_Test {

def main(args: Array[String]): Unit = {

val sparkConf = new SparkConf().setMaster("local[*]").setAppName("Operator")

val sc = new SparkContext(sparkConf)

val rdd : RDD[String] = sc.makeRDD(List("Hello","spark","scala","Hadoop"))

val result = rdd.groupBy(_.charAt(0))

result.collect().foreach(println)

}

}

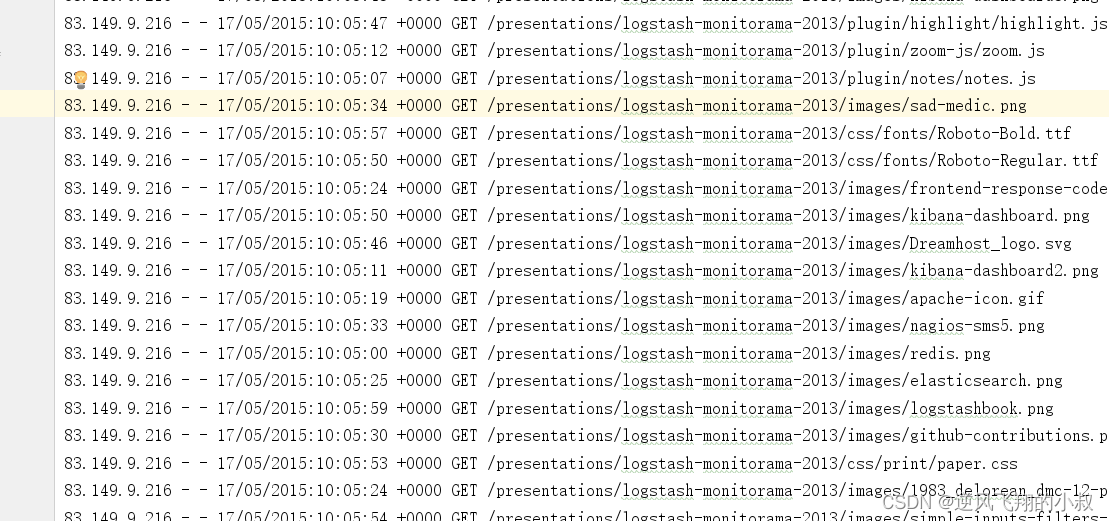

案例展示二

如下所示,为一个日志文件,现在需要按照时间进行分组,统计各个时间段内的数量

import java.text.SimpleDateFormat

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.rdd.RDD

object Group_Test {

def main(args: Array[String]): Unit = {

val sparkConf = new SparkConf().setMaster("local[*]").setAppName("Operator")

val sc = new SparkContext(sparkConf)

val rdd: RDD[String] = sc.textFile("E:\\code-self\\spi\\datas\\apache.log")

val result = rdd.map(

line => {

val datas = line.split(" ")

val time = datas(3)

val sdf = new SimpleDateFormat("dd/MM/yyyy:HH:mm")

val date = sdf.parse(time)

val sdf1 = new SimpleDateFormat("yyyy:HH")

val hour = sdf1.format(date)

(hour, 1)

}

).groupBy(_._1)

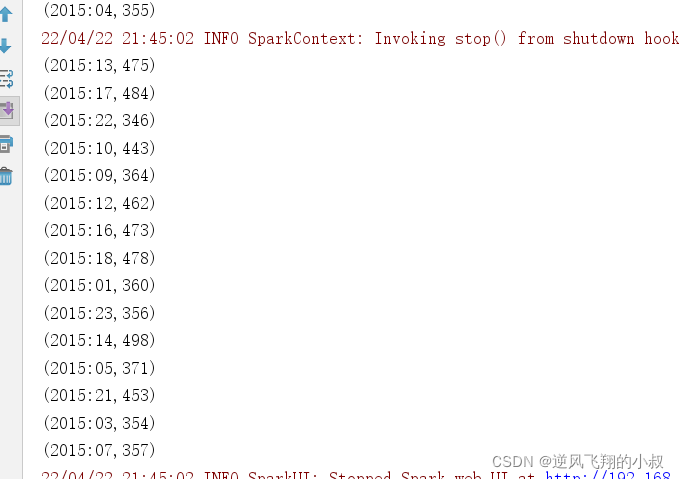

result.map {

case (hour, iter) => {

(hour, iter.size)

}

}.collect().foreach(println)

}

}

版权声明

本文为[逆风飞翔的小叔]所创,转载请带上原文链接,感谢

https://blog.csdn.net/congge_study/article/details/124355671

边栏推荐

- Code live collection ▏ software test report template Fan Wen is here

- APISIX jwt-auth 插件存在错误响应中泄露信息的风险公告(CVE-2022-29266)

- PHP PDO ODBC loads files from one folder into the blob column of MySQL database and downloads the blob column to another folder

- Why is IP direct connection prohibited in large-scale Internet

- 时序模型:长短期记忆网络(LSTM)

- Interview questions of a blue team of Beijing Information Protection Network

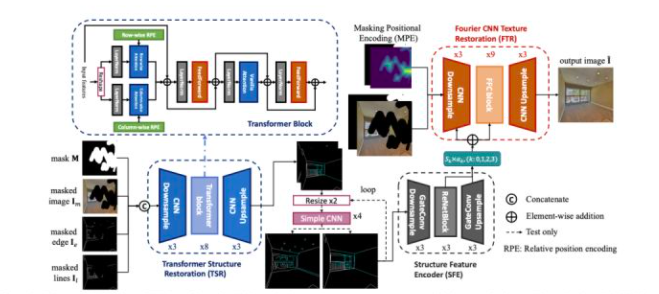

- 【AI周报】英伟达用AI设计芯片;不完美的Transformer要克服自注意力的理论缺陷

- CVPR 2022 quality paper sharing

- Upgrade MySQL 5.1 to 5.69

- 一刷312-简单重复set-剑指 Offer 03. 数组中重复的数字(e)

猜你喜欢

随机推荐

Use bitnami PostgreSQL docker image to quickly set up stream replication clusters

字符串排序

cadence SPB17.4 - Active Class and Subclass

Treatment of idempotency

Special analysis of China's digital technology in 2022

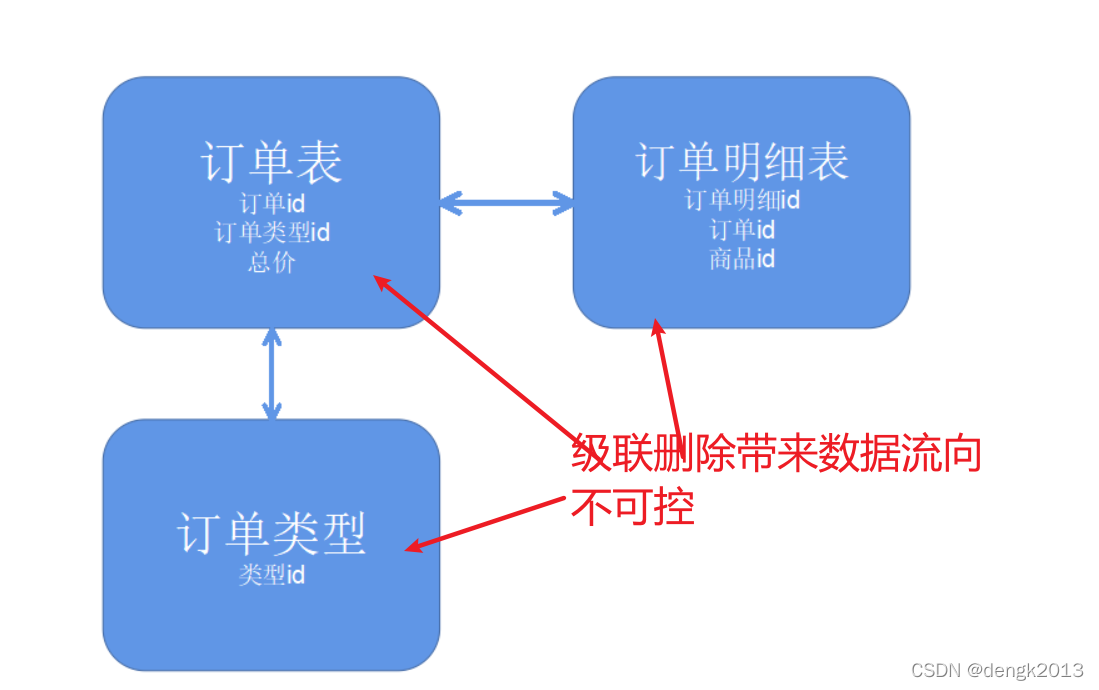

为啥禁用外键约束

API IX JWT auth plug-in has an error. Risk announcement of information disclosure in response (cve-2022-29266)

Upgrade MySQL 5.1 to 5.66

php函数

Connectez PHP à MySQL via aodbc

Multitimer V2 reconstruction version | an infinitely scalable software timer

What if the package cannot be found

PHP operators

Redis master-slave replication process

CVPR 2022 quality paper sharing

How do you think the fund is REITs? Is it safe to buy the fund through the bank

删除字符串中出现次数最少的字符

Open source project recommendation: 3D point cloud processing software paraview, based on QT and VTK

Why disable foreign key constraints

Connect PHP to MySQL via PDO ODBC