当前位置:网站首页>Anti crawler (0): are you still climbing naked with selenium? You're being watched! Crack webdriver anti crawler

Anti crawler (0): are you still climbing naked with selenium? You're being watched! Crack webdriver anti crawler

2022-04-23 05:17:00 【zzzzls~】

List of articles

selenium brief introduction

When we use requests When grabbing a page , The result may be different from what you see in the browser , Normally displayed page data , Use reuquests But there was no result . This is because requests It's all raw HTML file , The pages in the browser go through Javascript The result generated after data processing , These data come from a variety of sources , It could be through AJax Loaded , It could be passing by Javascript And generated after calculation by a specific algorithm .

There are usually two solutions :

- Deep excavation Ajax The logic of , Completely find out the interface address and its encryption parameter construction logic , Reuse Python Reappear , structure Ajax request

- By simulating the browser , Bypass this process .

Here we mainly introduce the second way , Simulated browser crawling .

Selenium It's an automated testing tool , It can drive the browser to perform specific operations . For example, click on , Pull down and so on , At the same time, you can also get the source code of the page currently rendered by the browser , Achieve What you see is what you get . For some use Javascript For dynamically rendered pages , This kind of grabbing is very effective !

The crawler

however , Use Selenium call ChromeSriver To open the web , There is still a certain difference from opening the web page normally . Now many websites have added right Selenium Detection of , To prevent some reptiles from crawling maliciously .

Most of the time , The basic principle of detection is to detect... In the current browser window window.navigator Whether the object contains webdriver This attribute . Under normal use of the browser , This property is undefined, Then once we use selenium, This property is initialized to true, Many websites pass Javascript Judge whether this property implements simple anti selenium Reptiles .

At this time, we may think of passing Javascript Just put this webdriver Property is empty , For example, by calling execute_script Method to execute the following code :

Object.defineProperty(navigator, "webdriver", {

get: () => undefined})

This line Javascript You can really put webdriver Property is empty , however execute_script Call this line Javascript The statement is actually executed after the page is loaded , Implemented too late , The website has been on the page long before the page rendering webdriver Property is detected , All the above methods can not achieve the effect .

Reflect the crawler

Anti climbing measures based on the above example , We can mainly use the following methods to solve :

To configure Selenium Options

option.add_experimental_option("excludeSwitches", ['enable-automation'])

however ChromeDriver 79.0.3945.36 Version has been modified to exclude... In non headless mode “ Enable automation ” when window.navigator.webdriver It's an undefined problem , For normal use , Need to put Chrome Roll back 79 Previous version , And find the corresponding ChromeDriver edition , That's how it works !

Of course , You can also refer to CDP(Chrome Devtools-Protocol) file , Use driver.execute_cdp_cmd stay selenium Call in CDP The order of . The following code only needs to be executed once , Then just don't close this driver Open window , No matter how many URLs you open , It will be on all the websites that come with it JS Execute this statement before , So as to hide webdriver Purpose .

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

options = Options()

# hide Under the control of automatic software This a few word

options.add_experimental_option("excludeSwitches", ["enable-automation"])

options.add_experimental_option('useAutomationExtension', False)

driver = webdriver.Chrome(executable_path=r"E:\chromedriver\chromedriver.exe", options=options)

# modify webdriver value

driver.execute_cdp_cmd("Page.addScriptToEvaluateOnNewDocument", {

"source": "Object.defineProperty(navigator, 'webdriver', {get: () => undefined})"

})

driver.get('https://www.baidu.com')

In addition, the following configurations can also be removed webdriver features

options = Options()

options.add_argument("--disable-blink-features")

options.add_argument("--disable-blink-features=AutomationControlled")

Control the open browser

Since the use of selenium There are some specific parameters in the open browser , Then we can find another way , Open a real browser directly and manually , And then use selenium Don't you just control !

-

utilize Chrome DevTools Protocol opens a browser , It allows customers to check and debug Chrome browser

(1) Close all open Chrome window

(2) open CMD, Entering commands on the command line :

# here Chrome The path of needs to be modified to your local Chrome Installation position # --remote-debugging-port Specify any open ports "C:\Program Files(x86)\Google\Chrome\Application\chrome.exe" --remote-debugging-port=9222If the path is right , A new Chrome window

-

Use selenium Connect this open Chrome window

from selenium import webdriver from selenium.webdriver.chrome.options import Options options = Options() # The port here needs to be consistent with the port used in the previous step # Most other blogs use it here 127.0.0.1:9222, Tested unable to connect , The proposal USES localhost:9222 # For specific reasons, see : https://www.codenong.com/6827310/ options.add_experimental_option("debuggerAddress", "localhost:9222") driver = webdriver.Chrome(executable_path=r"E:\chromedriver\chromedriver.exe", options=options) driver.get('https://www.baidu.com')

However, there are some disadvantages in using this method :

Once the browser starts ,selenium The browser configuration in no longer takes effect , for example –-proxy-server etc. , Of course, you can also start from the beginning Chrome Add... When

mitmproxy A middleman

mitmproxy Actually sum fiddler/charles The principle of other packet capture tools is somewhat similar , As a third party , It will disguise itself as your browser and send a request to the server , Server returned response It will be passed to your browser , You can Change the delivery of this data by writing a script , So as to realize the control of the server “ cheating ” And to the client “ cheating ”

Some websites use separate js Documents to identify webdriver Result , We can go through mitmproxy Interception identification webdriver identifier Of js file , And falsify the correct results .

Reference resources : Use mitmproxy + python Act as interceptor agent

To be continued …

Actually , It's not just webdriver,selenium After opening the browser , There will also be these signature codes :

webdriver

__driver_evaluate

__webdriver_evaluate

__selenium_evaluate

__fxdriver_evaluate

__driver_unwrapped

__webdriver_unwrapped

__selenium_unwrapped

__fxdriver_unwrapped

_Selenium_IDE_Recorder

_selenium

calledSelenium

_WEBDRIVER_ELEM_CACHE

ChromeDriverw

driver-evaluate

webdriver-evaluate

selenium-evaluate

webdriverCommand

webdriver-evaluate-response

__webdriverFunc

__webdriver_script_fn

__$webdriverAsyncExecutor

__lastWatirAlert

__lastWatirConfirm

__lastWatirPrompt

...

If you don't believe it , We can do an experiment , Separate use Normal browser , selenium+Chrome,selenium+Chrome headless Open up this website :https://bot.sannysoft.com/

Of course , These examples are not intended to undermine your confidence , I just hope you don't start to be complacent when you learn some techniques , Always keep a pure heart , Keep moving forward with a passion for Technology . Reptiles and anti reptiles, this war without gunsmoke , It's still going on …

版权声明

本文为[zzzzls~]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204220547251831.html

边栏推荐

- SQLyog的基本使用

- How does PostgreSQL parse URLs

- 云计算与云原生 — OpenShift 的架构设计

- Good simple recursive problem, string recursive training

- Data management of basic operation of mairadb database

- Redis persistence

- Implementation of resnet-34 CNN with kears

- The introduction of lean management needs to achieve these nine points in advance

- Musk and twitter storm drama

- Blender程序化地形制作

猜你喜欢

The introduction of lean management needs to achieve these nine points in advance

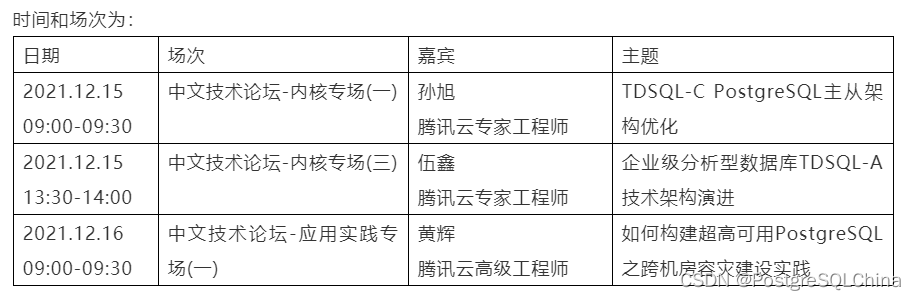

At pgconf Asia Chinese technology forum, listen to Tencent cloud experts' in-depth understanding of database technology

Restful toolkit of idea plug-in

JSP-----JSP简介

Good simple recursive problem, string recursive training

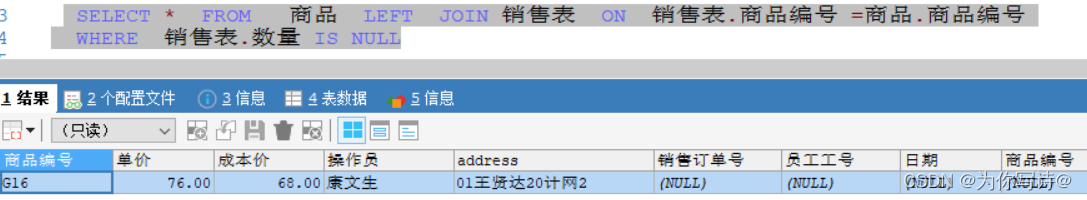

Where, on when MySQL external connection is used

引入精益管理方式,需要提前做到这九点

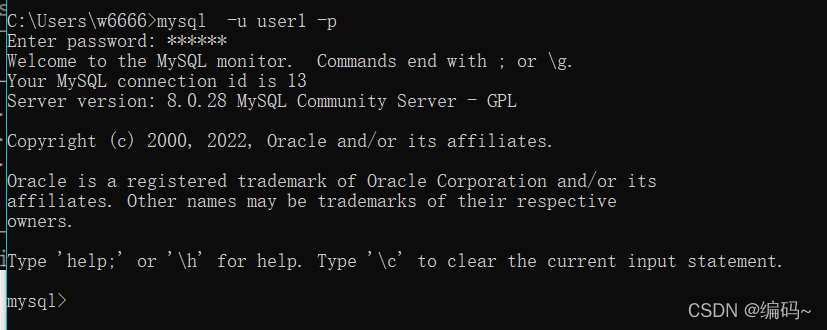

MySQL basics 3

Discussion on flow restriction

无线网怎么用手机验证码登录解决方案

随机推荐

The concept of meta universe is popular. Is virtual real estate worth investing

Deep learning notes - object detection and dataset + anchor box

Get the number of days between dates, get the Chinese date, get the date of the next Monday of the date, get the working day, get the rest day

了解 DevOps,必读这十本书!

[untitled] kimpei kdboxpro's cool running lantern coexists with beauty and strength

Detailed explanation of concurrent topics

Day. JS common methods

Golang memory escape

Chapter I overall project management of information system project manager summary

Power consumption parameters of Jinbei household mute box series

MySQL circularly adds sequence numbers according to the values of a column

Acid of MySQL transaction

Top 25 Devops tools in 2021 (Part 2)

Backup MySQL database with Navicat

低代码和无代码的注意事项

项目经理值得一试的思维方式:项目成功方程式

What role do tools play in digital transformation?

My old programmer's perception of the dangers and opportunities of the times?

The difference between static pipeline and dynamic pipeline

Publish your own wheel - pypi packaging upload practice