当前位置:网站首页>Flink SQL realizes the integration of stream and batch

Flink SQL realizes the integration of stream and batch

2022-04-23 09:02:00 【Big data Institute】

1 Flink Stream and batch unify thought

1.1 Bounded flow and unbounded flow

stay Flink in , A special case of stream processing in batch processing .

1.2 Flink Old architecture and problems

1. from Flink User perspective ( Enterprise developers )

(1) When developing ,Flink SQL The support is not good , Two at the bottom API To choose from , Even maintain two sets of code .

(2) Different semantics 、 Different connector Support 、 Different error recovery strategies, etc .

(3)Table API Will also be affected by different underlying API、 Different connector And so on .

2. from Flink From the perspective of developers (Flink Community people )

(1) Different translation processes , Different operator implementations 、 Different Task perform .

(2) Code is hard to reuse .

(3) Two independent technology stacks need more manpower , Function development slows down 、 Performance improvement becomes difficult 、bug More .

1.3 Flink A new architecture integrating flow and batch

2 Flink layered API

1.API Hierarchical structure

(1)Flink1.12 Previous version ,Table API and SQL In the active development stage , There is no stream batch system ⼀ All features of , So you need to be careful when using .

(2) from Flink1.12 Start ,Table API and SQL It's already mature , It can be safely used in production .

2.Flink Table API/SQL Implementation process of

3 Flink The flow batch is unified

3.1 Relational algebra and stream processing

Flink Provided by the upper layer Table API and SQL It is a unified flow batch , That is, whether it's stream processing ( By incident ) Batch processing ( Bounded flow ),Table API and SQL All have the same meaning .

We all know SQL Designed for relational models and batch processing , therefore SQL Query is difficult to realize and understand in stream processing , Let's start with some special concepts of stream processing to help you understand Flink How to execute on stream processing SQL Of .

Relational algebra ( It mainly refers to the tables in the relational database ) and SQL, It's mainly for batch processing , There's a natural gap between this and stream processing .

3.2 Understand dynamic tables and continuous queries

In order to use relational algebra in stream processing (Table API/SQL),Flink Dynamic table is introduced (Dynamic Tables) The concept of .

Because the data faced by stream processing is an unbounded data stream , This is the same as what we are familiar with in a relational database “ surface ” Completely different , So one idea is to convert the data flow into Table, And then execute SQL operation , however SQL The result of implementation is not invariable , But constantly updated with the arrival of new data .

With the arrival of new data , Constantly update the previous results , The resulting table , stay Flink Table API In concept , It's called “ Dynamic table ”(Dynamic Tables).

Dynamic tables are Flink The flow of data Table API and SQL The core concept of support . Unlike static tables that represent batch data , Dynamic tables change over time . Dynamic tables can be queried like static batch tables , Querying a dynamic table produces persistent queries (Continuous Query). Continuous queries never terminate , And another dynamic table is generated . Inquire about (Query) The dynamic result table will be updated continuously , To reflect changes on its dynamic input table .

When executing a relational query on a data stream , The main steps of the transformation diagram between data flow and dynamic table are as follows .

(1) Convert data flow to dynamic table .

(2) Continuous query on dynamic table , And generate a new dynamic table .

(3) The generated dynamic table is transformed into a new data stream .

3.3 Detailed explanation of dynamic table

1. Define dynamic tables ( Click event flow )

CREATE TABLE clicks (

user VARCHAR, -- user name

url VARCHAR, -- User accessed URL

cTime TIMESTAMP(3) -- Access time

) WITH (...);

2. Define dynamic tables ( Click event flow )

To execute relational queries , First, you have to convert the data flow into a dynamic table . Click stream is shown on the left of the figure below , On the right is the dynamic table , All new events on the stream will correspond to... On the dynamic table insert operation ( except insert There are other models , I'll talk about it later ).

3. Continuous query

Next , We execute continuous queries on the dynamic table to generate a new dynamic table ( Result sheet ), Continuous queries do not stop , It will constantly query, calculate and update the result table according to the arrival of new data in the input table ( Multiple modes , Later on ). We are click On the dynamic table group by count Aggregate query , Over time , The dynamic result table on the right follows the left measurement ⼊ Table the change of each data ⽽ change .

We are click On the dynamic table group by count polymerization , In addition, a tumbling window is added , Statistics 1 Number of visits per user in the hourly rollover window . Over time , The dynamic result table on the right changes with the data of the left test input table , But the results of each window are independent , And the calculation is triggered at the end of each window .

3.4 Convert dynamic table to data flow

Just like regular database tables , Dynamic tables can be created by inserting (Insert)、 to update (Update) And delete (Delete) change , Make continuous changes . When converting a dynamic table to a stream or writing it to an external system , These changes need to be coded .Flink Of Table API and SQL Supports three ways to encode changes to dynamic tables .

1. Just append the stream (Append-only stream, namely insert-only)

Only by inserting INSERT Change to modify the dynamic table , It can be converted directly to “ Just add ” flow . The data sent out in this flow is every new event in the dynamic table .

2. Withdrawal flow (Retract stream)

Insert 、 to update 、 Deleting a dynamic table that is supported by both will be converted to a withdrawal stream .

The withdrawal flow contains two types of messages : add to (Add) Messages and withdrawals (Retract) news .

Dynamic tables can be used to INSERT Encoded as add news 、DELETE Encoded as retract news 、UPDATE Encoded as the changed line ( Before change ) Of retract After messages and updates ( New line ) Of add news , Convert to retract flow .

3. Update insert stream (Upsert flow )

Upsert Flows contain two types of messages :Upsert News and delete news . Convert to upsert Dynamic table of flows , You need to have a unique key (key). By way of INSERT and UPDATE Change the code to upsert news , take DELETE Change the code to DELETE news , You can have a unique key (Unique Key) Dynamic table to stream .

4. Query restrictions

Continuous queries on unbounded data streams have some limitations , Mainly in the following two aspects :

(1) Status size limit

Continuous queries on unbounded data streams need to run for weeks or months or even longer , therefore , The amount of data processed by continuous query may be very large . for example , In the previous example of calculating user visits , It is necessary to maintain the count status of user visits , If only registered users are considered, the status will not be too large , If you assign a unique user name to each unregistered user , You need to maintain a very large state , Over time, it may cause the query to fail .

SELECT user, COUNT(url)

FROM clicks

GROUP BY user;

(2) Calculate update cost limit

Some queries even add or update only one input record , You also need to recalculate and update most of the issued result lines . Such queries are not suitable for execution as continuous queries . For example, the following example , It calculates a for each user based on the time of the last click RANK. once clicks New form received , User lastAction Will update and calculate the new ranking . however , Because two rows cannot have the same ranking , All lower ranked rows also need to be updated .

SELECT user, RANK() OVER (ORDER BY lastAction)

FROM (

SELECT user, MAX(cTime) AS lastAction FROM clicks GROUP BY user

);

版权声明

本文为[Big data Institute]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230723184774.html

边栏推荐

- Talent Plan 学习营初体验:交流+坚持 开源协作课程学习的不二路径

- Chris LATTNER, father of llvm: the golden age of compilers

- Concave hull acquisition method based on convex hull of point cloud

- Notes on xctf questions

- 求简单类型的矩阵和

- Valgrind et kcachegrind utilisent l'analyse d'exécution

- 是否完全二叉搜索树 (30 分)

- Trc20 fund collection solution based on thinkphp5 version

- Solidity 问题汇总

- dataBinding中使用include

猜你喜欢

L2-022 rearrange linked list (25 points) (map + structure simulation)

2021 Li Hongyi's adaptive learning rate of machine learning

Multi view depth estimation by fusing single view depth probability with multi view geometry

Four pictures to understand some basic usage of Matplotlib

Consensus Token:web3. 0 super entrance of ecological flow

Share the office and improve the settled experience

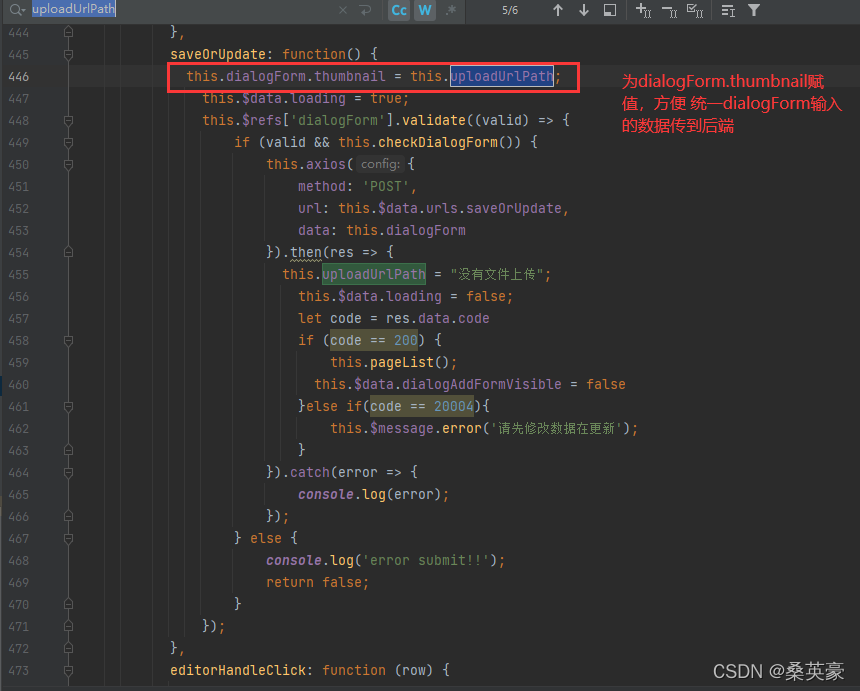

Project upload part

搞不懂时间、时间戳、时区,快来看这篇

MySQL small exercise (only suitable for beginners, non beginners are not allowed to enter)

bashdb下载安装

随机推荐

Mini - exercice MySQL (seulement pour les débutants, pas pour les non - débutants)

On array replication

使用flask和h5搭建网站/应用的简要步骤

Search tree judgment (25 points)

Harbor enterprise image management system

npm ERR! network

bashdb下载安装

Find the sum of simple types of matrices

MySQL小练习(仅适合初学者,非初学者勿进)

Common errors of VMware building es8

MySQL小練習(僅適合初學者,非初學者勿進)

Notes d'apprentissage oneflow: de functor à opexprinterpreter

Wechat: get the owner of a single tag

Illegal character in scheme name at index 0:

论文阅读《Multi-View Depth Estimation by Fusing Single-View Depth Probability with Multi-View Geometry》

idea打包 jar文件

是否同一棵二叉搜索树 (25 分)

Flink reads MySQL and PgSQL at the same time, and the program will get stuck without logs

ONEFLOW learning notes: from functor to opexprinter

Idea is configured to connect to the remote database mysql, or Navicat fails to connect to the remote database (solved)