当前位置:网站首页>[paper reading] [3D object detection] voxel transformer for 3D object detection

[paper reading] [3D object detection] voxel transformer for 3D object detection

2022-04-23 04:37:00 【Lukas88664】

Paper title :Voxel Transformer for 3D Object Detection

iccv2021

Most of the current practice is to point on the cloud For example, first put the point cloud group turn Then group transformer This article proposes a method based on voxel Of transformer Can be applied based on voxel On the detector Easy to carry out voxel 3d The extraction of global features .

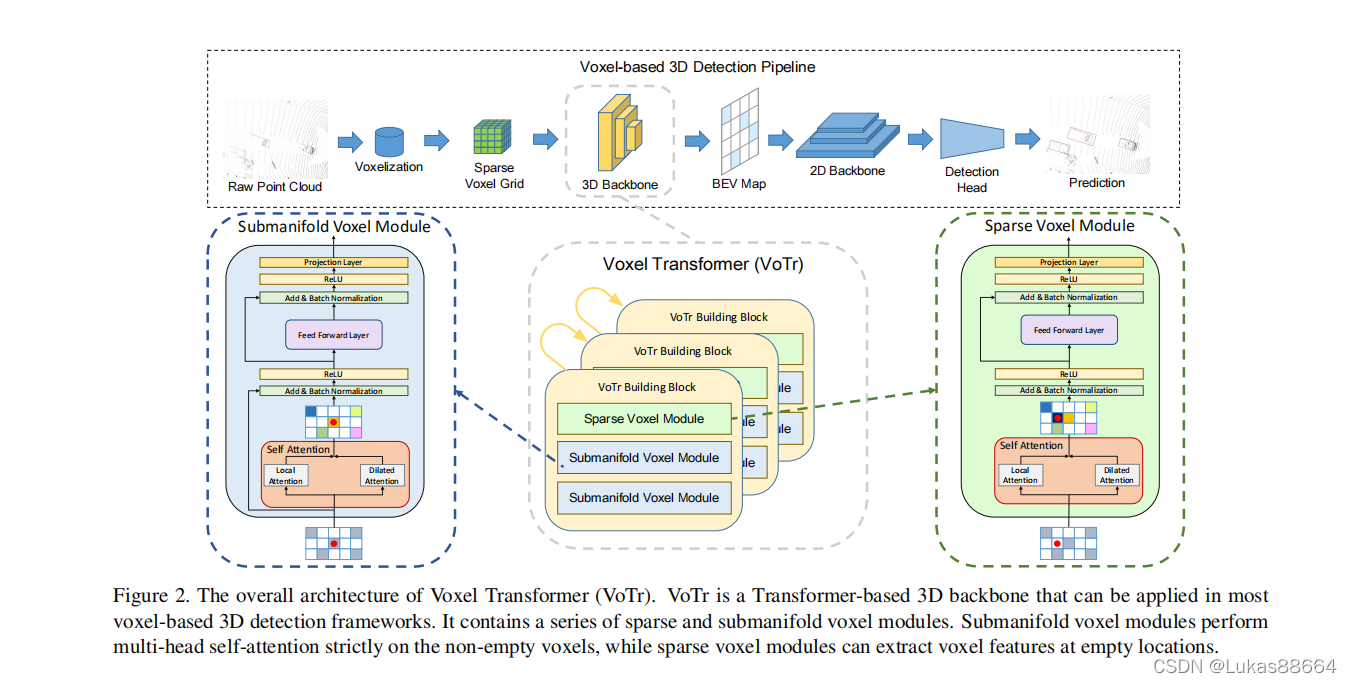

Old rules Upper figure !

It can be seen that the main innovation of the article is 3d Of backbone This means that we can apply this module to all voxel A phase of Above the two-stage detector .

Point cloud voxel Of 3d Convolution is mainly divided into two categories of processing :sparse and submanifold.

Their operation is basically the same except attending voxel It's just different , These two categories of 3d For operation, please refer to SECOND Three dimensional target detector .

To put it simply, use sparse Take the next sample use submanifold While maintaining sparsity 3d Convolution .

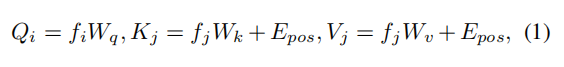

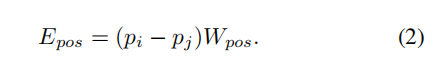

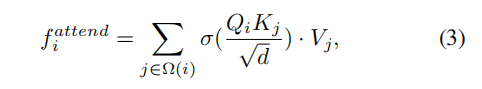

For non empty voxel We have to attending voxel( What is? attending voxel Well Let's define ) Conduct transformer operation Position code: select the relative position code Yes transformer Basic students will understand by looking at the following formula ~

about submanifold Layer

its querying voxel All non empty voxel , Well, first of all, there are two kinds of attention operation The output result is added to the input ( One res Layer operation ) And then batch Norm. Then input to the forward propagation layer Conduct submanifold Convolution Another one res layer batch norm layer Last relu Activate Then proceed proj Pay attention to is What we use here is batch norm And the random identification of neurons is cancelled The author believes that this will help the learning process .( The two mentioned in the article attention Let's explain below )

about sparse Layer

It needs to be in some empty voxel on querying operation And these voxel It's not feature Of We use an estimation function The article says it can be for attending voxel Interpolation and other operations The network directly adopts max pool Obviously, through the self attention layer The output result is different from the output structure So the network framework cancels the previous one res layer .

Then let's explain two attention modular

These two kinds of attention The module is mainly composed of attend voxel Divided according to the difference of

local attention

Participate in this module voxel It's our current query voxel Nearby voxel It's probably all nonempty in a convolution size voxel

Give them a transformer operation , obviously For the present query voxel Come on His feature Fusion is the combination of all... In the current feeling field voxel and transformer Relative to convolution More receptive to people from nearby feature.

dilated attention

The convolution of this part can refer to sparse convolution The name is similar Mainly to expand the receptive field :

The article says a sparse attention After reasonable attending voxel choice You can make query The range is up to 15m.

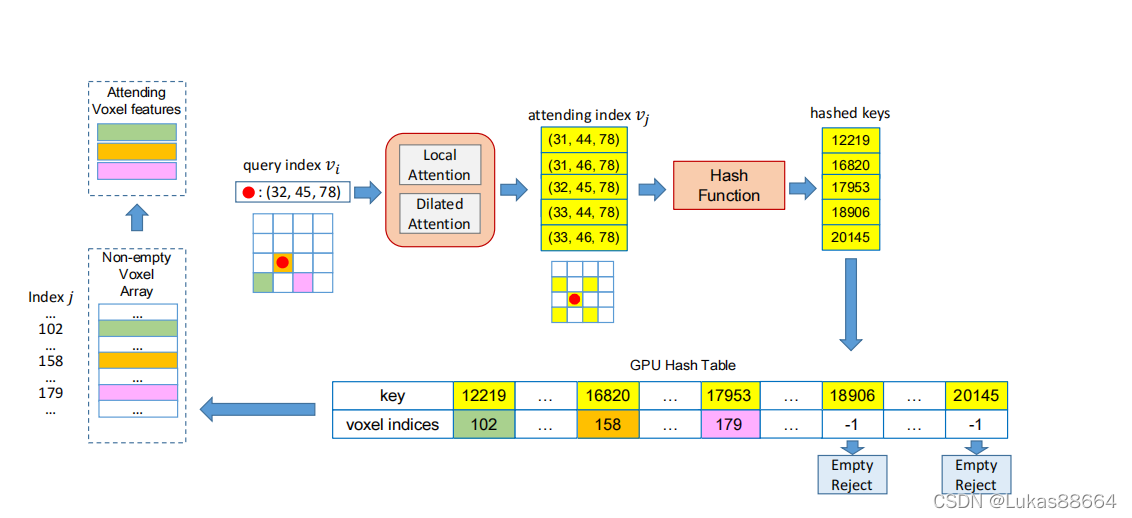

Finally, we can understand the above convolution in combination with the diagram of the article :

After the above two convolution operations We have achieved localfeature and Wider receptive field feature Fusion .

Then the author puts forward a voxel query Quick take non empty voxel Methods The main idea is to put non empty voxel out Make a code You have to be right behind someone voxel Conduct attention When dealing with Directly to attending voxel Just take their code such The complexity of the model is significantly reduced :

The results are very good :

The necessity of different convolution was compared in Ablation Experiment

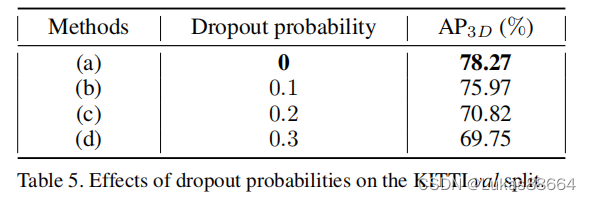

Necessity of random inactivation layer :

attending voxel Number of

Finally, the reasoning speed and size compared with the traditional model are compared

I saw it for the first time voxel do trans Relatively new

版权声明

本文为[Lukas88664]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230407539009.html

边栏推荐

- 协程与多进程的完美结合

- [mapping program design] coordinate inverse artifact v1 0 (with C / C / VB source program)

- win10, mysql-8.0.26-winx64. Zip installation

- STM32 upper μ C / shell transplantation and Application

- 从MySQL数据库迁移到AWS DynamoDB

- 单极性非归零NRZ码、双极性非归零NRZ码、2ASK、2FSK、2PSK、2DPSK及MATLAB仿真

- QtSpim手册-中文翻译

- A new method for evaluating the quality of metagenome assembly - magista

- Detailed explanation of life cycle component of jetpack

- 用D435i录制自己的数据集运行ORBslam2并构建稠密点云

猜你喜欢

AWS EKS添加集群用户或IAM角色

win10, mysql-8.0.26-winx64. Zip installation

Brushless motor drive scheme based on Infineon MCU GTM module

Express中间件②(中间件的分类)

MYSQL查询至少连续n天登录的用户

AWS EKS 部署要点以及控制台与eksctl创建的差异

2021数学建模国赛一等奖经验总结与分享

Express中间件①(中间件的使用)

![[BIM introduction practice] wall hierarchy and FAQ in Revit](/img/95/e599c7547029f57ce23ef4b87e8b9a.jpg)

[BIM introduction practice] wall hierarchy and FAQ in Revit

Jetpack 之 LifeCycle 组件使用详解

随机推荐

电钻、电锤、电镐的区别

AWS eks add cluster user or Iam role

Xiaohongshu was exposed to layoffs of 20% as a whole, and the internal volume among large factories was also very serious

[AI vision · quick review of today's sound acoustic papers, issue 3] wed, 20 APR 2022

1个需求的一生,团队协作在云效钉钉小程序上可以这么玩

减治思想——二分查找详细总结

[mapping program design] coordinate inverse artifact v1 0 (with C / C / VB source program)

【论文阅读】【3d目标检测】Improving 3D Object Detection with Channel-wise Transformer

zynq平台交叉编译器的安装

Express中间件②(中间件的分类)

阿里十年技术专家联合打造“最新”Jetpack Compose项目实战演练(附Demo)

[AI vision · quick review of NLP natural language processing papers today, issue 31] Fri, 15 APR 2022

Difference between LabVIEW small end sequence and large end sequence

递归调用--排列的穷举

383. Ransom letter

C language character constant

Recursive call -- Enumeration of permutations

Go reflection rule

[BIM introduction practice] wall hierarchy and FAQ in Revit

重剑无锋,大巧不工