当前位置:网站首页>Common processing of point cloud dataset

Common processing of point cloud dataset

2022-04-23 19:15:00 【Incense Dad】

Catalog

Dataset enhancements

Affine transformation

Translation transformation

import numpy as np

import random

# file name

old_file=r"rabbit.txt"

new_file=r"rabbit_change.txt"

# Translation parameters

x_offset=random.uniform(-10, 10)

y_offset=random.uniform(-10, 10)

z_offset=random.uniform(-10, 10)

# Transformation matrix

transformation_matrix=np.array([

[1,0,0,x_offset],

[0,1,0,y_offset],

[0,0,1,z_offset],

[0,0,0,1]

])

# Load the file

old_array=np.loadtxt(old_file)

old_xyz=old_array[:,:3]

# The supplementary data is homogeneous

ones_data=np.ones(old_xyz.shape[0])

old_xyz=np.insert(old_xyz,3,values=ones_data,axis=1)

# Transform data

new_xyz = np.dot(transformation_matrix,old_xyz.T)

new_array=np.concatenate((new_xyz.T[:,:3],old_array[:,3:]),axis=1)

np.savetxt(new_file,new_array,fmt='%.06f')

Rotation transformation

import numpy as np

import random

# file name

old_file=r"rabbit.txt"

new_file=r"rabbit_change.txt"

# Rotation Angle

roate_x=random.uniform(-np.pi/10, np.pi/10)

roate_y=random.uniform(-np.pi/10, np.pi/10)

roate_z=random.uniform(-np.pi/10, np.pi/10)

roate_x_matrix=np.array([

[1,0,0,0],

[0,np.cos(roate_x),-np.sin(roate_x),0],

[0,np.sin(roate_x),np.cos(roate_x),0],

[0,0,0,1]

])

roate_y_matrix=np.array([

[np.cos(roate_y),0,np.sin(roate_y),0],

[0,1,0,0],

[-np.sin(roate_y),0,np.cos(roate_y),0],

[0,0,0,1]

])

roate_z_matrix=np.array([

[np.cos(roate_z),-np.sin(roate_z),0,0],

[np.sin(roate_z),np.cos(roate_z),0,0],

[0,0,1,0],

[0,0,0,1]

])

# Transformation matrix

transformation_matrix=dot(roate_z_matrix).dot(roate_y_matrix).dot(roate_x_matrix)

# Load the file

old_array=np.loadtxt(old_file)

old_xyz=old_array[:,:3]

# The supplementary data is homogeneous

ones_data=np.ones(old_xyz.shape[0])

old_xyz=np.insert(old_xyz,3,values=ones_data,axis=1)

# Transform data

new_xyz = np.dot(transformation_matrix,old_xyz.T)

new_array=np.concatenate((new_xyz.T[:,:3],old_array[:,3:]),axis=1)

np.savetxt(new_file,new_array,fmt='%.06f')

Scale transformation

import numpy as np

import random

# file name

old_file=r"rabbit.txt"

new_file=r"rabbit_change.txt"

# Scaling parameters

scale=0.1

# Transformation matrix

transformation_matrix=np.array([

[scale,0,0,0],

[0,scale,0,0],

[0,0,scale,0],

[0,0,0,1]

])

# Load the file

old_array=np.loadtxt(old_file)

old_xyz=old_array[:,:3]

# The supplementary data is homogeneous

ones_data=np.ones(old_xyz.shape[0])

old_xyz=np.insert(old_xyz,3,values=ones_data,axis=1)

# Transform data

new_xyz = np.dot(transformation_matrix,old_xyz.T)

new_array=np.concatenate((new_xyz.T[:,:3],old_array[:,3:]),axis=1)

np.savetxt(new_file,new_array,fmt='%.06f')

Affine transformation

The synthesis of the above three transformations can be written as :

import numpy as np

import random

# file name

old_file=r"rabbit.txt"

new_file=r"rabbit_change.txt"

# Translation parameters

x_offset=random.uniform(-10, 10)

y_offset=random.uniform(-10, 10)

z_offset=random.uniform(-10, 10)

# Scaling parameters

scale=0.1

# Rotation Angle

roate_x=random.uniform(-np.pi/10, np.pi/10)

roate_y=random.uniform(-np.pi/10, np.pi/10)

roate_z=random.uniform(-np.pi/10, np.pi/10)

roate_x_matrix=np.array([

[1,0,0,0],

[0,np.cos(roate_x),-np.sin(roate_x),0],

[0,np.sin(roate_x),np.cos(roate_x),0],

[0,0,0,1]

])

roate_y_matrix=np.array([

[np.cos(roate_y),0,np.sin(roate_y),0],

[0,1,0,0],

[-np.sin(roate_y),0,np.cos(roate_y),0],

[0,0,0,1]

])

roate_z_matrix=np.array([

[np.cos(roate_z),-np.sin(roate_z),0,0],

[np.sin(roate_z),np.cos(roate_z),0,0],

[0,0,1,0],

[0,0,0,1]

])

# Transformation matrix

transformation_matrix=np.array([

[scale,0,0,x_offset],

[0,scale,0,y_offset],

[0,0,scale,z_offset],

[0,0,0,1]

]).dot(roate_z_matrix).dot(roate_y_matrix).dot(roate_x_matrix)

# Load the file

old_array=np.loadtxt(old_file)

old_xyz=old_array[:,:3]

# The supplementary data is homogeneous

ones_data=np.ones(old_xyz.shape[0])

old_xyz=np.insert(old_xyz,3,values=ones_data,axis=1)

# Transform data

new_xyz = np.dot(transformation_matrix,old_xyz.T)

new_array=np.concatenate((new_xyz.T[:,:3],old_array[:,3:]),axis=1)

np.savetxt(new_file,new_array,fmt='%.06f')

Affine transformation :

PCL Affine transformation , Realize the translation and rotation of point cloud

Add noise

import numpy as np

# file name

old_file=r"rabbit.txt"

new_file=r"rabbit_change.txt"

def add_noise(point, sigma=0.1, clip=0.1):

point = point.reshape(-1,3)

Row, Col = point.shape

noisy_point = np.clip(sigma * np.random.randn(Row, Col), -1*clip, clip)

noisy_point += point

return noisy_point

# Load the file

old_array=np.loadtxt(old_file)

old_xyz=old_array[:,:3]

new_xyz=add_noise(old_xyz)

new_array=np.concatenate((new_xyz,old_array[:,3:]),axis=1)

np.savetxt(new_file,new_array,fmt='%.06f')

Down sampling

import numpy as np

# file name

old_file=r"rabbit.txt"

new_file=r"rabbit_change.txt"

# Voxel filtering

def voxel_filter(point_cloud, leaf_size):

filtered_points = []

# Calculate boundary points

x_min, y_min, z_min = np.amin(point_cloud, axis=0) # Calculation x y z The maximum value of the three dimensions

x_max, y_max, z_max = np.amax(point_cloud, axis=0)

# Calculation voxel grid dimension

Dx = (x_max - x_min) // leaf_size + 1

Dy = (y_max - y_min) // leaf_size + 1

Dz = (z_max - z_min) // leaf_size + 1

# Calculate the voxel Indexes

h = list() # h Save indexed list for

for i in range(len(point_cloud)):

hx = (point_cloud[i][0] - x_min) // leaf_size

hy = (point_cloud[i][1] - y_min) // leaf_size

hz = (point_cloud[i][2] - z_min) // leaf_size

h.append(hx + hy * Dx + hz * Dx * Dy)

h = np.array(h)

# Screening point

h_indice = np.argsort(h) # return h The index of the elements sorted from small to large

h_sorted = h[h_indice]

begin = 0

for i in range(len(h_sorted) - 1): # 0~9999

if h_sorted[i] != h_sorted[i + 1]:

point_idx = h_indice[begin: i + 1]

filtered_points.append(np.mean(point_cloud[point_idx], axis=0))

begin = i+1

# Change the point cloud format to array, And return to

filtered_points = np.array(filtered_points, dtype=np.float64)

return filtered_points

# Load the file

old_array=np.loadtxt(old_file)

old_xyz=old_array[:,:3]

# Save the file

new_xyz=voxel_filter(old_xyz, 1)

np.savetxt(new_file,new_xyz,fmt='%.06f')

Data normalization

De centralization

centroid = np.mean(pc, axis=0)

pc = pc - centroid

Scale normalization

m = np.max(np.sqrt(np.sum(pc**2, axis=1)))

pc = pc / m

版权声明

本文为[Incense Dad]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204231913486893.html

边栏推荐

- Installation, use and problem summary of binlog2sql tool

- MySQL学习第五弹——事务及其操作特性详解

- I just want to leave a note for myself

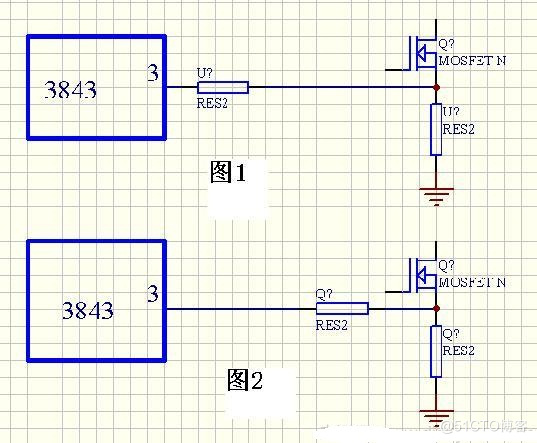

- Switching power supply design sharing and power supply design skills diagram

- Introduction to micro build low code zero Foundation (lesson 3)

- JS calculation time difference

- Raspberry pie 18b20 temperature

- openlayers 5.0 加载arcgis server 切片服务

- Zlib realizes streaming decompression

- mysql通过binlog恢复或回滚数据

猜你喜欢

Wechat video extraction and receiving file path

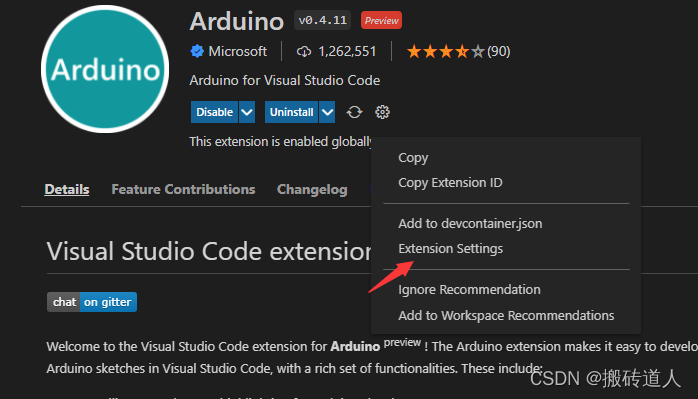

Using Visual Studio code to develop Arduino

![[记录]TypeError: this.getOptions is not a function](/img/c9/0d92891b6beec3d6085bd3da516f00.png)

[记录]TypeError: this.getOptions is not a function

Switching power supply design sharing and power supply design skills diagram

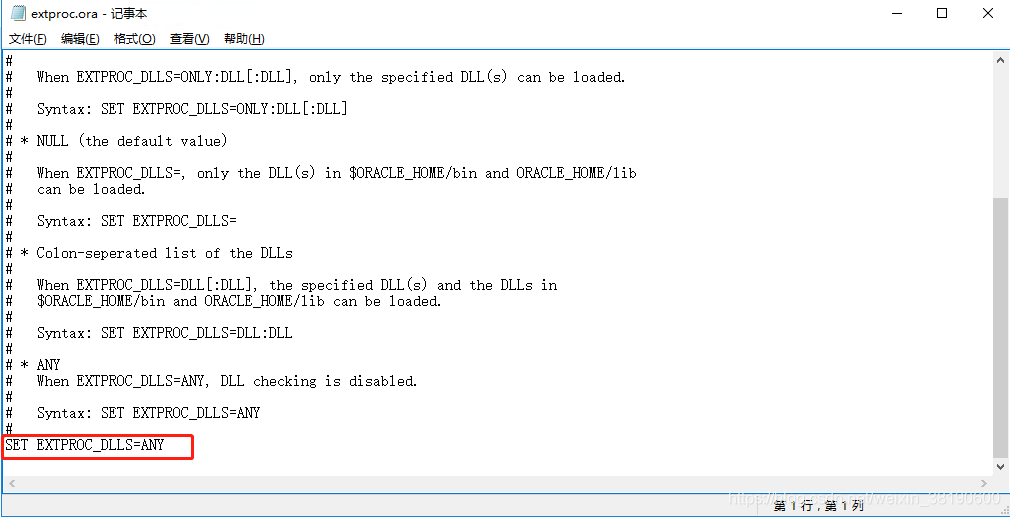

Oracle configuration st_ geometry

Matlab 2019 installation of deep learning toolbox model for googlenet network

简化路径(力扣71)

Introduction to micro build low code zero Foundation (lesson 3)

![[report] Microsoft: application of deep learning methods in speech enhancement](/img/29/2d2addd826359fdb0920e06ebedd29.png)

[report] Microsoft: application of deep learning methods in speech enhancement

【C语言进阶11——字符和字符串函数及其模拟实现(2))】

随机推荐

腾讯云GPU最佳实践-使用jupyter pycharm远程开发训练

Partage de la conception de l'alimentation électrique de commutation et illustration des compétences en conception de l'alimentation électrique

ArcGIS JS API dojoconfig configuration

为何PostgreSQL即将超越SQL Server?

SSDB基础

開關電源設計分享及電源設計技巧圖解

js上传文件时控制文件类型和大小

An idea of rendering pipeline based on FBO

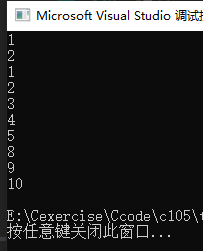

Quick start to static class variables

Transaction processing of SQL Server database

Codeforces Round #784 (Div. 4)

微搭低代码零基础入门课(第三课)

c#:泛型反射

Using Visual Studio code to develop Arduino

Application of DCT transform

Using oes texture + glsurfaceview + JNI to realize player picture processing based on OpenGL es

Tencent cloud GPU best practices - remote development training using jupyter pycharm

简化路径(力扣71)

[today in history] April 23: the first video uploaded on YouTube; Netease cloud music officially launched; The inventor of digital audio player was born

2022.04.23(LC_763_划分字母区间)