当前位置:网站首页>How to determine the neural network parameters, the number of neural network parameters calculation

How to determine the neural network parameters, the number of neural network parameters calculation

2022-08-11 09:34:00 【Yangyang 2013 haha】

神经网络权值怎么确定?

神经网络的权值是通过对网络的训练得到的.如果使用MATLAB的话不要自己设定,newff之后会自动赋值.也可以手动:{}=;{}=.一般来说输入归一化,那么w和b取0-1的随机数就行.

神经网络的权值确定的目的是为了让神经网络在训练过程中学习到有用的信息,这意味着参数梯度不应该为0.

参数初始化要满足两个必要条件:1、各个激活层不会出现饱和现象,比如对于sigmoid激活函数,初始化值不能太大或太小,导致陷入其饱和区.

2、各个激活值不为0,如果激活层输出为零,也就是下一层卷积层的输入为零,所以这个卷积层对权值求偏导为零,从而导致梯度为0.扩展资料:神经网络和权值的关系.

在训练智能体执行任务时,会选择一个典型的神经网络框架,并相信它有潜力为这个任务编码特定的策略.注意这里只是有潜力,还要学习权重参数,才能将这种潜力变化为能力.

受到自然界早成行为及先天能力的启发,在这项工作中,研究者构建了一个能自然执行给定任务的神经网络.也就是说,找到一个先天的神经网络架构,然后只需要随机初始化的权值就能执行任务.

研究者表示,这种不用学习参数的神经网络架构在强化学习与监督学习都有很好的表现.其实如果想象神经网络架构提供的就是一个圈,那么常规学习权值就是找到一个最优点(或最优参数解).

但是对于不用学习权重的神经网络,它就相当于引入了一个非常强的归纳偏置,以至于,整个架构偏置到能直接解决某个问题.但是对于不用学习权重的神经网络,它相当于不停地特化架构,或者说降低模型方差.

这样,当架构越来越小而只包含最优解时,随机化的权值也就能解决实际问题了.如研究者那样从小架构到大架构搜索也是可行的,只要架构能正好将最优解包围住就行了.参考资料来源:百度百科-神经网络.

Convolution neural network with full connection is how to determine the parameters of?

Determine the parameters of convolution neural network with full connection:Convolution neural network with the traditional method of face detection is different,它是通过直接作用于输入样本,用样本来训练网络并最终实现检测任务的A8U神经网络.

它是非参数型的人脸检测方法,可以省去传统方法中建模、参数估计以及参数检验、重建模型等的一系列复杂过程.本文针对图像中任意大小、位置、姿势、方向、肤色、面部表情和光照条件的人脸.

Input layer convolution neural network input layer can handle multi-dimensional data,常见地,一维卷积神经网络的输入层接收一维或二维数组,其中一维数组通常为时间或频谱采样;二维数组可能包含多个通道;二维卷积神经网络的输入层接收二维或三维数组;三维卷积神经网络的输入层接收四维数组.

由于卷积神经网络在计算机视觉领域应用较广,因此许多研究在介绍其结构时预先假设了三维输入数据,即平面上的二维像素点和RGB通道.

How to choose the super parameter neural network

1、The selection of hidden layer neural network algorithm1.1Construction method first using three methods of determine the number of hidden layer upon layer to get three implicit number,找到最小值和最大值,Then starting from the minimum value one by one to verify model prediction error,直到达到最大值.

Lastly, model the minimum error of the implied number.The method is suitable for double hidden layer of network.1.2Delete the method of single hidden layer network nonlinear mapping ability is weak,相同问题,For achieving the mapping relationship between,Hidden layer nodes to a little more,To increase the adjustable parameters of network,So, for using the delete.

1.3The main idea of the golden section method algorithm:首先在[a,b]Looking for the ideal number of hidden layer nodes,Thus fully guarantee the network approximation ability and generalization ability.

To meet the requirement of high precision approximation,In accordance with the principle of golden section expand search interval again,Namely get range[b,c](其中b=0.619*(c-a)+a),在区间[b,c]Search for the optimum,Gets stronger approximation ability on the number of hidden layer nodes,In practical application according to the requirement,Choose the one can.

BPNeural network model of each parameter selection problem

Sample variables don't need so many,Because neural network information storage capacity is limited,Too many samples can cause some useful information would be discarded.If the sample size too much,Should increase the number of hidden layer nodes and the number of hidden layer,To enhance the ability to learn.

一、Number of hidden layer generally think that,增加隐层数可以降低网络误差(也有文献认为不一定能有效降低),提高精度,但也使网络复杂化,从而增加了网络的训练时间和出现“过拟合”的倾向.

Neural network priority should be given to the design in general should be3层网络(即有1个隐层).一般地,Increase the number of hidden layer nodes for lower error,The training effect than to increase the number of hidden layer are easier to implement.

For there is no hidden layer neural network model,In fact is a linear or nonlinear(Depending on the output layer adopts linear or nonlinear transformation function type)回归模型.

因此,一般认为,Should not contain hidden layer network model in the regression analysis of,Technology has very mature,It is not necessary in neural network theory to discuss the.

二、Number of hidden layer nodes inBP网络中,The number of hidden layer nodes is very important,It not only has great influence on the performance of the neural network model is set up,And when is training“过拟合”的直接原因,But until now, there is not a scientific theory and general method for determining.

Most is proposed in the literature the formula of the number of hidden layer nodes is aimed at the situation of the training sample any more,And most are for the most unfavorable situation,General engineering practice, it is difficult to meet,不宜采用.事实上,All kinds of formulas to get the number of hidden layer nodes sometimes differs a few times or even one hundred times.

For as much as possible to avoid training in“过拟合”现象,Ensure a high enough network performance and generalization ability,Determine the number of hidden layer nodes is the most basic principle:As much as possible under the premise of meeting the accuracy requirement take compact structure,Namely take as little as possible on the number of hidden layer nodes.

研究表明,Number of hidden layer nodes is not only with the input/Number of nodes in the output layer on,More and the complexity of the problem to be resolved and conversion function type and characteristics of the sample data of the.

神经网络算法中,参数的设置或者调整,有什么方法可以采用

若果对你有帮助,请点赞.神经网络的结构(例如2输入3隐节点1输出)建好后,一般就要求神经网络里的权值和阈值.

现在一般求解权值和阈值,都是采用梯度下降之类的搜索算法(梯度下降法、牛顿法、列文伯格-马跨特法、狗腿法等等),这些算法会先初始化一个解,在这个解的基础上,确定一个搜索方向和一个移动步长(各种法算确定方向和步长的方法不同,也就使各种算法适用于解决不同的问题),使初始解根据这个方向和步长移动后,能使目标函数的输出(在神经网络中就是预测误差)下降.

然后将它更新为新的解,再继续寻找下一步的移动方向的步长,这样不断的迭代下去,目标函数(神经网络中的预测误差)也不断下降,最终就能找到一个解,使得目标函数(预测误差)比较小.

而在寻解过程中,步长太大,就会搜索得不仔细,可能跨过了优秀的解,而步长太小,又会使寻解过程进行得太慢.因此,步长设置适当非常重要.

学习率对原步长(在梯度下降法中就是梯度的长度)作调整,如果学习率lr=0.1,那么梯度下降法中每次调整的步长就是0.1*梯度,而在matlab神经网络工具箱里的lr,代表的是初始学习率.

因为matlab工具箱为了在寻解不同阶段更智能的选择合适的步长,使用的是可变学习率,它会根据上一次解的调整对目标函数带来的效果来对学习率作调整,再根据学习率决定步长.

机制如下:ifnewE2/E2>maxE_inc%若果误差上升大于阈值lr=lr*lr_dec;%则降低学习率elseifnewE2

祝学习愉快.

The neural network output neuron number how to determine the

如果是RBF神经网络,那么只有3层,输入层,隐含层和输出层.The method of determine the number of neurons hasK-means,ROLS等算法.

Now there is no mature theorem can determine the number of neurons in each layer of neurons and contains several layers of network,Most still rely on experience,不过3Layer of the network can approximate any nonlinear network,The more the number of neurons of the approaching the better the results.

神经网络可以指向两种,一个是生物神经网络,一个是人工神经网络.生物神经网络:一般指生物的大脑神经元,细胞,触点等组成的网络,用于产生生物的意识,帮助生物进行思考和行动.

人工神经网络(ArtificialNeuralNetworks,简写为ANNs)也简称为神经网络(NNs)或称作连接模型(ConnectionModel),它是一种模仿动物神经网络行为特征,进行分布式并行信息处理的算法数学模型.

这种网络依靠系统的复杂程度,通过调整内部大量节点之间相互连接的关系,从而达到处理信息的目的.人工神经网络:是一种应用类似于大脑神经突触联接的结构进行信息处理的数学模型.

在工程与学术界也常直接简称为“神经网络”或类神经网络.

How do you determine the neural network output neuron number

Number of output neurons is according to your need to make sure,For example you need to simulate functiony=1/x,So your input vector isx,输出就是y=1/x,Also is an output.Again for instance you need to simulate water incod,bod参数值,You is the output of the two.

你的情况,比如说,You do will sign is the purpose of symbol recognition is divided into normal symbols and abnormal sign,The output of you is2个,Is by the actual need to.

神经网络weightHow to initialize parameters

不一定,也可设置为[-1,1]之间.事实上,Must have the right to the negative value,Not only activate the neurons,Not no inhibition of.至于为什么在[-1,1]之间就足够了,This is because the normalization andSigmoidFunction output range limit this two reasons.

一般在编程时,Setting up a matrix of thebounds=ones(S,1)*[-1,1];%The upper and lower bounds weights.在MATLAB中,可以直接使用net=init(net);来初始化.

We can set the network parametersnet.initFcn和net.layer{i}.initFcnThis technique to initialize a given network.net.initFcnUsed to determine the network initialization function.

The default value of feedforward network forinitlay,It allows each layer with a separate initialization function.设定了net.initFcn,那么参数net.layer{i}.initFcnAlso want to set is used to determine the initialization function of each layer.

For feedforward network,There are two different ways of initialization is often used:initwb和initnw.

initwbInitialization parameters function according to each layer(net.inputWeights{i,j}.initFcn)初始化权重矩阵和偏置.Feedforward network initialization weight is usually set torands,It makes the weight in-1到1之间随机取值.

This way is often used in conversion function is a linear function of.initnwUsually used for conversion function is a function curve.

它根据Nguyen和Widrow[NgWi90]Initial weights and bias value is created for the layer,To make each layer neuron activity area can be roughly flat distribution in the input space.

Through the neural network fitting which parameters see the function effect of?Neural network is proposed to knowing how to determine the number of nodes in the hidden? 10

Basically see mean square error (mse) and its percentage(准确率).If you are fitting it isui,计算(yi-ui)^2的平均值,And then calculate the average andyiAverage than(Is the mean square error percentage),当然用1Minus the percentage is the accuracy.

Generally will draw a picture,把yi和uiRespectively in different colors or symbols represented,直观对比.To fit the number of hidden layer nodes is not a common formula for sure,Can only rely on experience and trial and error.

一般情况下,The complexity of the problem(Degree of nonlinear and dimensions)越高,The more hidden layer node number.Here are a little experience:With not too big to predict the number of nodes first,If increase the number of nodes to test the accuracy and training set accuracy is improved,You should continue to increase.

If increasing node number test set accuracy is not very obvious,And training set accuracy is improved,Should not continue to increase the,The current is the ideal,Continue to increase the number of nodes will only have the opposite effect.

SPSSNeural network model of the parameters set question

边栏推荐

猜你喜欢

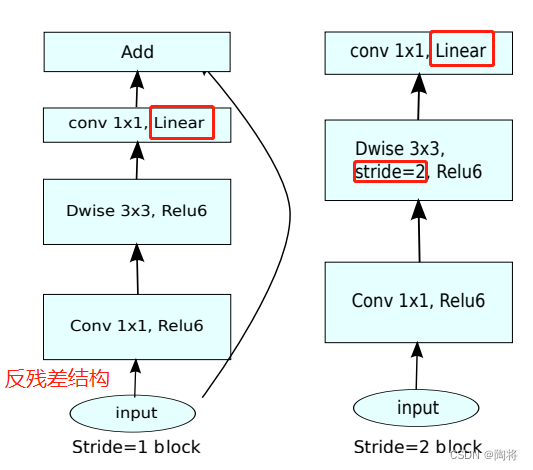

Lightweight network (1): MobileNet V1, V2, V3 series

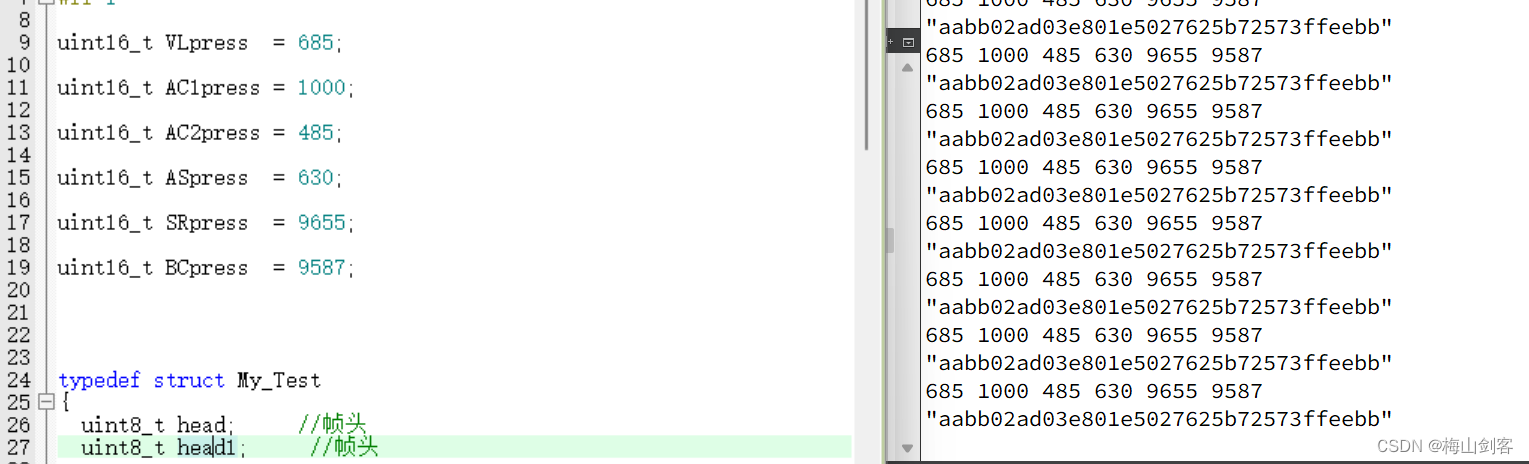

STM32之串口传输结构体

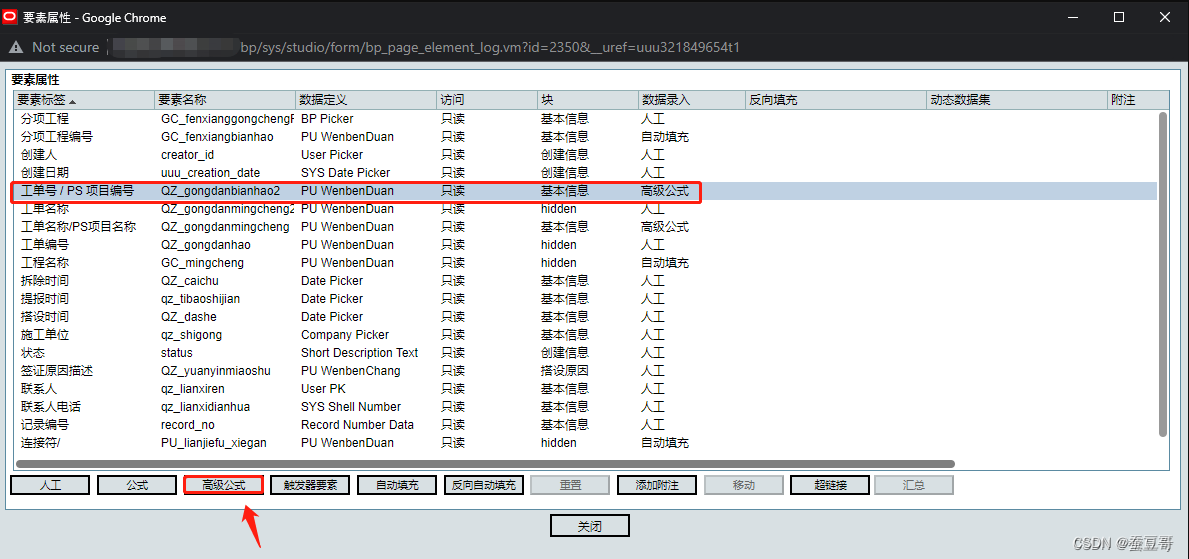

Primavera Unifier 高级公式使用分享

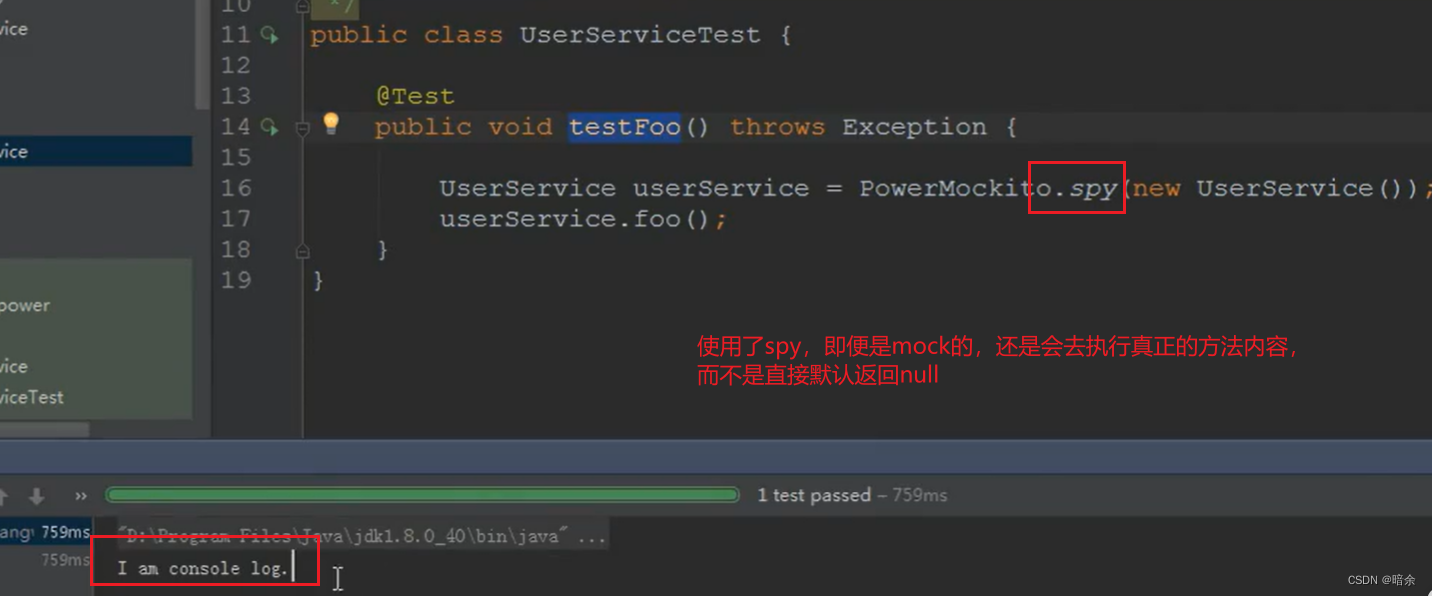

PowerMock for Systematic Explanation of Unit Testing

保证金监控中心保证期货开户和交易记录

OAK-FFC系列产品上手指南

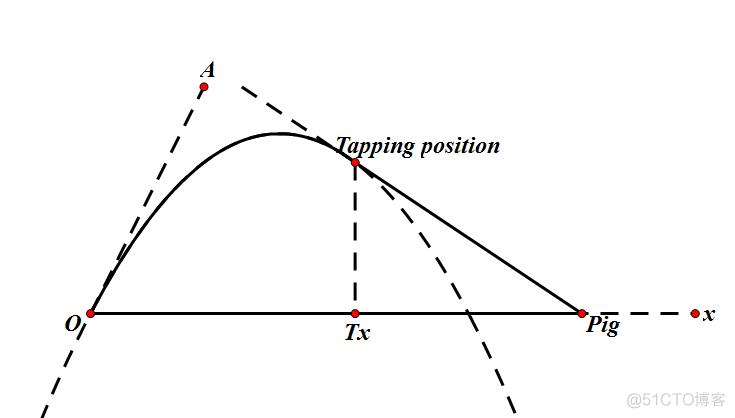

SDUT 2877: angry_birds_again_and_again

Typescript基本类型---上篇

IPQ4019/IPQ4029 support WiFi6 MiniPCIe Module 2T2R 2×2.4GHz 2x5GHz MT7915 MT7975

无代码平台助力中山医院搭建“智慧化管理体系”,实现智慧医疗

随机推荐

Primavera Unifier 自定义报表制作及打印分享

HStreamDB v0.9 发布:分区模型扩展,支持与外部系统集成

神经痛分类图片大全,神经病理性疼痛分类

OAK-FFC Series Product Getting Started Guide

Typescrip编译选项

SDUT 2877: angry_birds_again_and_again

数据中台方案分析和发展方向

深度学习100例 —— 卷积神经网络(CNN)识别眼睛状态

工业检测深度学习方法综述

ES6:数值的扩展

Primavera Unifier -AEM 表单设计器要点

Network model (U - net, U - net++, U - net++ +)

Huawei WLAN Technology: AC/AP Experiment

canvas图像阴影处理

canvas文字绘制(大小、粗体、倾斜、对齐、基线)

HDRP Custom Pass Shader 获取世界坐标和近裁剪平面坐标

MySql事务

Go 语言的诞生

清除微信小程序button的默认样式

【wxGlade学习】wxGlade环境配置