当前位置:网站首页>logstash/filebeat only receives data from the most recent period

logstash/filebeat only receives data from the most recent period

2022-08-11 10:07:00 【Zz Robert】

The overall structure of the project isfilebeat+logstash+elasticsearch,logstash版本为:7.17.3,filebeat版本为:7.16.3

需求

因为项目是通过logstash按天创建es索引,But there are too many internal logs in the server,如果启动的话,Will all logging index,cause too many indexes,too much data,Currently I want to put7The logs of the day are pushed toes中,通过logstash,我该怎么做?

当然可以通过linuxTimed task deletion log can be achieved,but don't want to do it,仅仅想通过logstash做筛选.

方法

采用logstash的filter,并使用ruby语句(ruby语句必须在filter范围内)

过滤事件:

filter{

ruby {

code => "event.cancel if event['message'] =~ /^info/ " #正则匹配message字段以info开头,then remove this event,不继续往下走

}

}

过滤7天内的数据:

filter{

ruby {

code => "event.cancel if (Time.now.to_f - event.get('@timestamp').to_f) > (60 * 60 * 24 * 5)"

}

}

# 其中,event['@timestamp']不行,会报错,必须写为event.get('@timestamp')

filebeat方式

filebeatThe configuration file contains such a parameter:ignore_older

如果启用,那么Filebeatwill ignore files modified before the specified time span.If you want to keep log files for a longer time,那么配置ignore_older是很有用的.例如,如果你想要开始Filebeat,但是你Just want to send the latest files from the last week,In this case you can configure this option.

you can use time string,比如2h(2小时),5m(5分钟).默认是0,Disable this set.

你必须设置ignore_older比close_inactive更大.

close_inactive

当启用此选项时,If the file is not fetched within the specified duration,则Filebeat将关闭文件句柄.当harvesterWhen reading the last line of the log,The counter of the specified period starts to work.It is not based on the modification time of the file.If closed file to change again,则会启动一个新的harvester,并且在scan_frequency结束后,will get the latest changes.

推荐给close_inactiveSet a value a little more often than how often your log files are updated.例如,If your log file is updated every few seconds,你可以设置close_inactive为1m.If the update rate of the log file is not constant,Then you can use multiple configurations.

将close_inactiveSetting to a lower value means the file handle can be closed earlier.然而,这样做的副作用是,如果harvester关闭了,New log lines are not sent in real time.

Close the timestamp of the file does not depend on the modification time of the file.代替的,FilebeatWith an internal timestamp to reflect the last time to read the file.例如,如果close_inactive被设置为5分钟,那么在harvesterAfter reading the last line of the file,这个5The minute countdown begins.

you can use time string,比如2h(2小时),5m(5分钟).默认是5m.

scan_frequency

FilebeatHow often to check for new files in the specified path(PS:检查的频率).例如,If the path you specify is /var/log/* ,then the specifiedscan_frequencyFrequency to scan files in the directory(PS:周期性扫描).指定1秒钟扫描一次目录,It's not very often.Settings is not recommended for less than1秒.

If you need to send log lines in near real time,不要设置scan_frequencyFor a very low value,should be adjustedclose_inactiveso that the file handler remains open,and constantly poll your file.

默认是10秒.

画外音:

Let's focus again here ignore_older , close_inactive , scan_frequency 这三个配置项

- ignore_older: It is to set a time range(跨度),Is not within the span of file update whatever

- scan_frequency: It sets the frequency of scanning files,see if the file is updated

- close_inactive:It is set to close the file handle if the file has not been updated for a long time,it has a countdown,If during the countdown,文件没有任何变化,then close the file handle when the countdown is over.Settings is not recommended for less than1秒.

If the file handle is closed after,file is updated again,So the next scan found the change at the end of the cycle changes,Then the file will be opened again to read the log line,前面我们也提到过,where each file was last read(偏移量)都记录在registry文件中

The result is configured as:

filebeat.inputs:

- type: log

enabled: true

paths:

- /home/logs/monitor/*.log

ignore_older: 168h

close_inactive: 24h

fields:

type: beats-monitor-store

- type: log

enabled: true

paths:

- /home/logs/gateway/*.log

ignore_older: 168h

close_inactive: 24h

fields:

type: beats-gateway-store

output.logstash:

hosts: ["xxxx:5044"]

参考文献:

边栏推荐

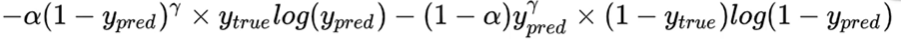

- Segmentation Learning (loss and Evaluation)

- Convolutional Neural Network System,Convolutional Neural Network Graduation Thesis

- 大家有遇到这种错吗?flink-sql 写入 clickhouse

- SQL statement

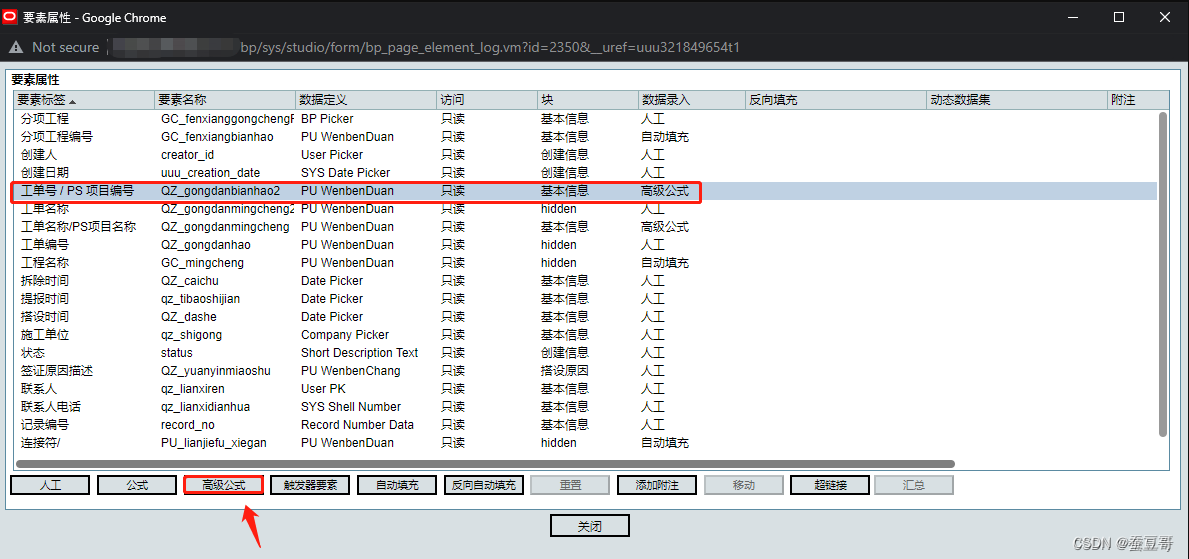

- Primavera Unifier advanced formula usage sharing

- pycharm 取消msyql表达式高亮

- 收集awr

- WordpressCMS主题开发01-首页制作

- 深度神经网络与人脑神经网络哪些区域有一定联系?

- 力扣打卡----打家劫舍

猜你喜欢

Adobe LiveCycle Designer report designer

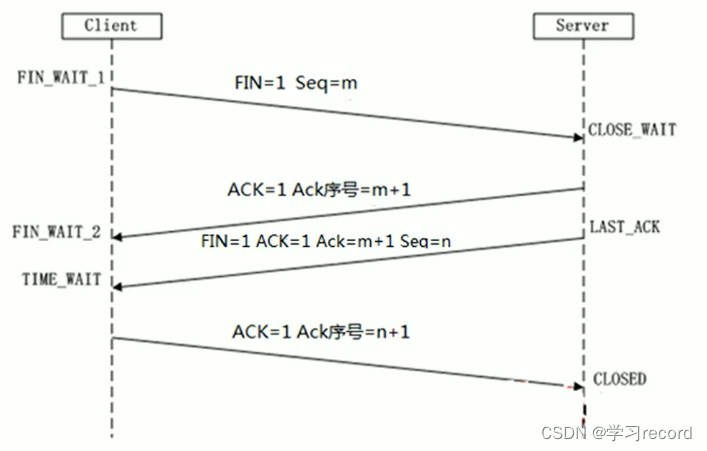

三次握手与四次挥手

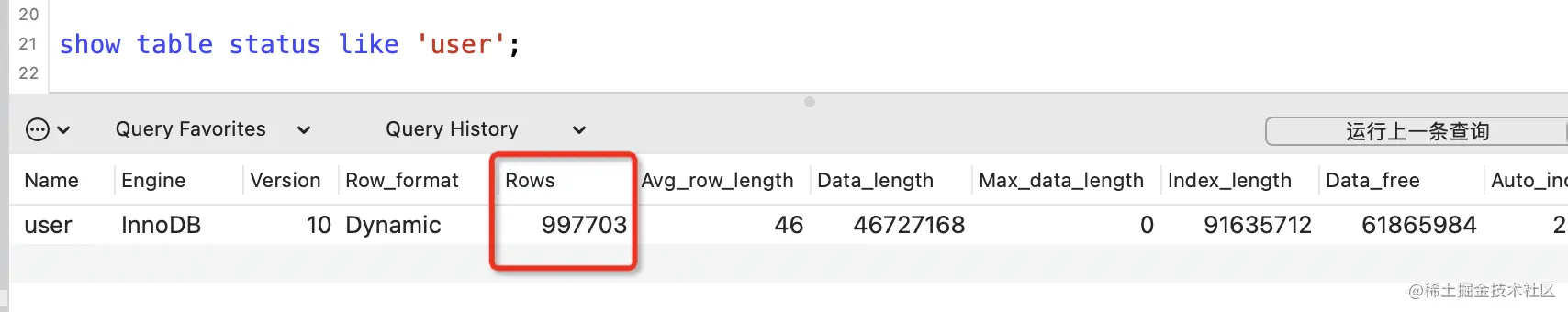

MySQL select count(*) count is very slow, is there any optimization solution?

How to improve the efficiency of telecommuting during the current epidemic, sharing telecommuting tools

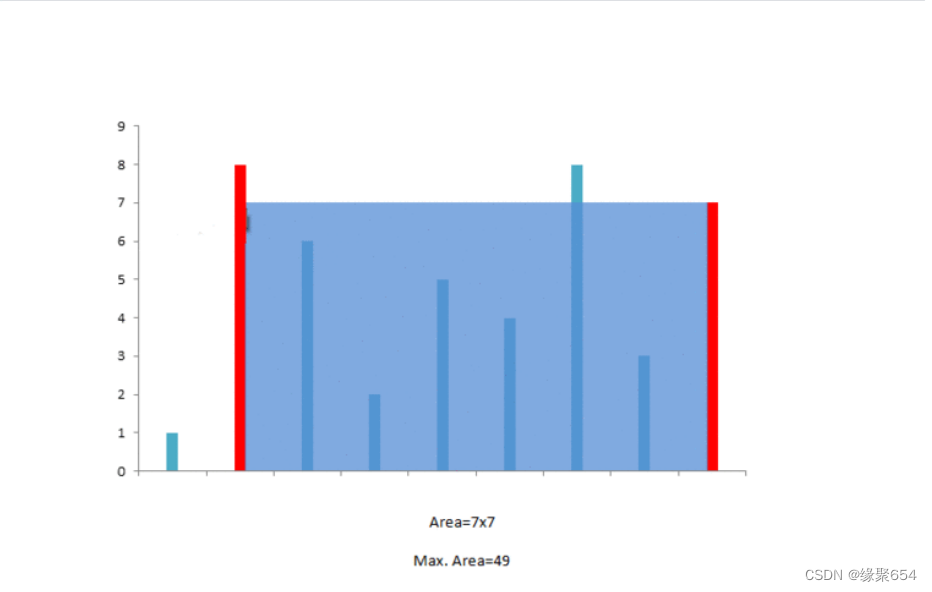

力扣题解8/10

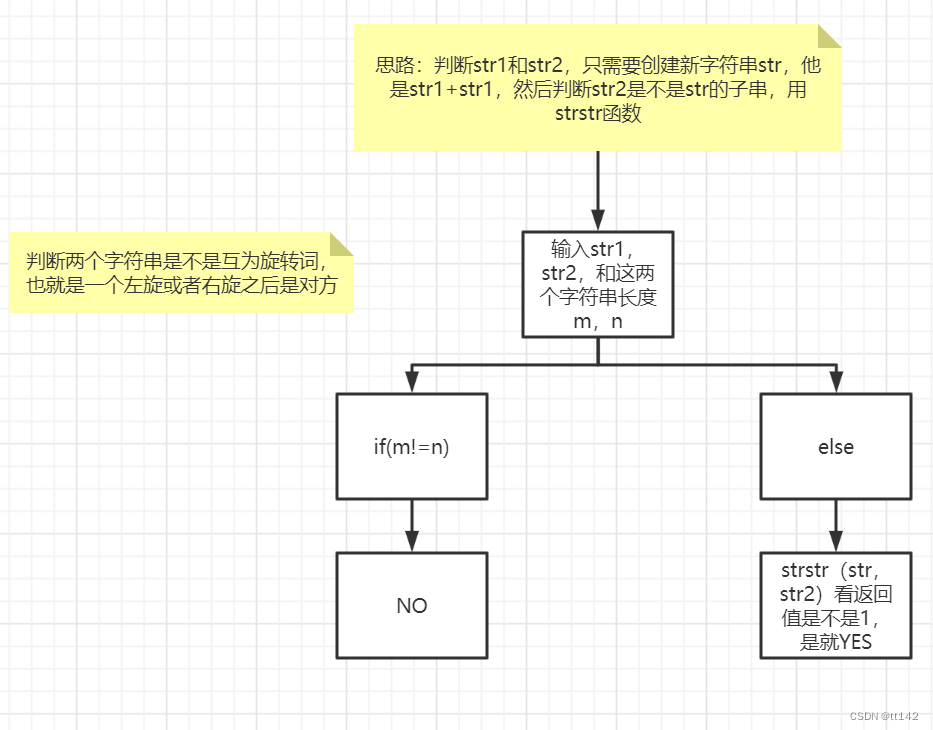

【剑指offer】左旋字符串,替换空格,还有类题!!!

Primavera Unifier 高级公式使用分享

Segmentation Learning (loss and Evaluation)

Six functions of enterprise exhibition hall production

Software custom development - the advantages of enterprise custom development of app software

随机推荐

canvas图片操作

计算数组某个元素的和

idea plugin autofill setter

PowerMock for Systematic Explanation of Unit Testing

爆料!前华为微服务专家纯手打500页落地架构实战笔记,已开源

QTableWidget 使用方法

卷积神经网络梯度消失,神经网络中梯度的概念

【luogu CF1427F】Boring Card Game(贪心)(性质)

SQL statement

mindspore 执行模型转换为310的mindir文件显示无LRN算子

【教程】区块链是数据库?那么区块链的数据存储在哪里?如何查看数据?FISCO-BCOS如何更换区块链的数据存储,由RocksDB更换为MySQL、MariaDB,联盟链区块链数据库,区块链数据库应用

使用stream实现两个list集合的合并(对象属性的合并)

VideoScribe卡死解决方案

Database Basics

HDRP shader 获取像素深度值和法线信息

A few days ago, Xiaohui went to Guizhou

训练一个神经网络要多久,神经网络训练时间过长

Halcon算子解释

腾讯电子签开发说明

[UE] 入坑