当前位置:网站首页>Clickhouse table engine

Clickhouse table engine

2022-04-23 17:07:00 【Magic Flute love】

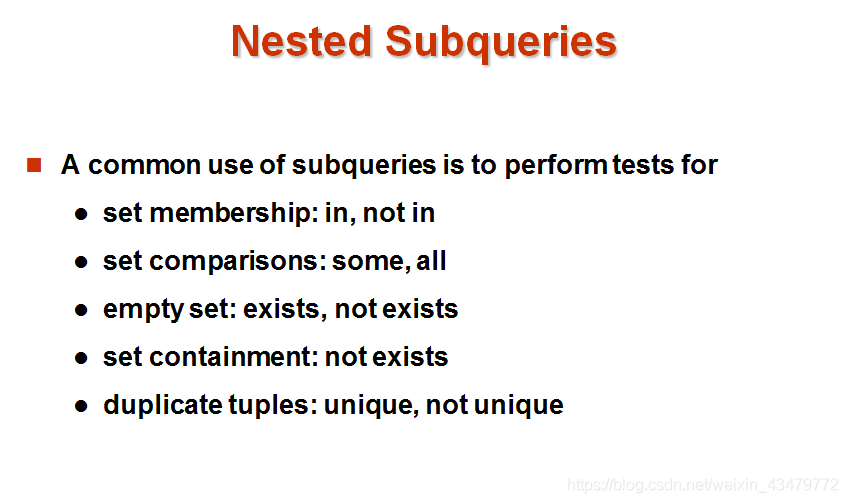

Watch engine

Use of table engine

The watch engine is ClickHouse A major feature of . so to speak , The table engine determines how to store table data . Include :

- How and where data is stored , Where to write and where to read data , Can integrate external databases , for example Mysql、Kafka、HDFS etc. .

- What queries are supported and how to support .

- Concurrent data access .

- Use of index ( If there is ).

- Can multithreaded requests be executed .

- Copy data .

The use of the table engine is that the engine used by the table must be explicitly defined when the table is created , And the relevant parameters used by the engine .

Particular attention : The name of the engine is case sensitive

If you need it, you can check it on the official website https://clickhouse.com/docs/zh/interfaces/formats/

TinyLog

Save on disk in the form of column file , Index not supported , There is no concurrency control . A small table that usually holds a small amount of data , Limited role in production environment . Sure For daily practice and test .

Such as :

create table t_tinylog (id String, name String) engine=TinyLog;

Memory

Memory engine , The data is stored directly in memory in uncompressed original form , When the server restarts, the data disappears . Read and write operations do not block each other , Index not supported . Very, very high performance under simple queries ( exceed 10G/s).

There are not many places to use it , Except to test , It's when you need very high performance , At the same time, the amount of data is not too large ( The upper limit is about 1 Billion rows ) Scene .

MergeTree

ClickHouse in The most powerful table engine is MergeTree( Merge tree ) engine And the series (*MergeTree) Other engines in , Supports indexing and partitioning , Status can be equivalent to innodb To Mysql. And based on MergeTree, In addition to many younger brothers , It is also a very distinctive engine .

/* Create table statement */

create table t_order_mt(

id UInt32,

sku_id String,

total_amount Decimal(16,2),

create_time Datetime

) engine =MergeTree

partition by toYYYYMMDD(create_time)

primary key (id)

order by (id,sku_id);

/* insert data */

insert into t_order_mt values

(101,'sku_001',1000.00,'2020-06-01 12:00:00') ,

(102,'sku_002',2000.00,'2020-06-01 11:00:00'),

(102,'sku_004',2500.00,'2020-06-01 12:00:00'),

(102,'sku_002',2000.00,'2020-06-01 13:00:00'),

(102,'sku_002',12000.00,'2020-06-01 13:00:00'),

(102,'sku_002',600.00,'2020-06-02 12:00:00');

/* Query results */

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 102 │ sku_002 │ 600.00 │ 2020-06-02 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 101 │ sku_001 │ 1000.00 │ 2020-06-01 12:00:00 │

│ 102 │ sku_002 │ 2000.00 │ 2020-06-01 11:00:00 │

│ 102 │ sku_002 │ 2000.00 │ 2020-06-01 13:00:00 │

│ 102 │ sku_002 │ 12000.00 │ 2020-06-01 13:00:00 │

│ 102 │ sku_004 │ 2500.00 │ 2020-06-01 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

MergeTree In fact, there are many parameters ( Most use default values ), But three parameters are more important , It also involves about MergeTree A lot of concepts .

Sorting is a sort within a partition .

partition by Partition ( Optional )

1) effect

Did you learn hive Should be no stranger , The purpose of partitioning is mainly to reduce the scanning range , Optimize query speed

2) If you don't fill in

Only one partition will be used .

3) Partition Directory

MergeTree Is a column file + Index file + Table definition file composition , But if the partition is set, these files will be saved to different partition directories .

The partition directory is named : Partition id_ Minimum partition block number _ Maximum partition block number _ The level of consolidation

Partition id(partitionId) The generation rules of :

Data partitioning rules are defined by partitioning id decision , Partition id Yes partition by Partition key to determine , According to the partition key field type id Generation rules are divided into :

- Partition key not defined : No definition partition by, By default, a directory named all Data partition for , All the data is in all Next ;

- Integer partition key : The partition key is an integer , Use the integer value of the string directly id;

- Date type partition key : Partition key is date type , Or it can be converted to a date type ;

- Other types of partition keys :String、Float Type, etc , adopt 128 Bit Hash The algorithm takes its Hash Value as partition id

Minimum partition block number (minBlockNum): Self increasing type , from 1 Start increasing up , The directory partition is incremented upward without generating a new directory partition 1;

Maximum partition fast label (maxBlockNum): Newly created partition minBlockNum And so on MaxBlockNum;

The level of consolidation (Level): The number of times it was merged , The more times you merge , The higher the level value is

4) parallel

After partition , In the face of query statistics involving cross partitions ,ClickHouse Will process in parallel on a partition by partition basis . It is recommended to partition according to days .

5) Data write and partition merge

Any batch of data writing will generate a temporary partition , Will not include any existing partitions . Some time after writing ( Probably 10-15 Minutes later ),ClickHouse The merge operation will be performed automatically ( Can't wait to manually pass optimize perform ), Put the data of temporary partition , Merge into existing partition .

optimize table t_order_mt final;

/* Specify the partition to merge */

optimize table t_order_mt partition '20200601' final;

For example, merge manually 20200601_1_1_0 and 20200601_3_3_0 after , Will become 20200601_1_3_1, and 20200601_1_1_0 and 20200601_3_3_0 The documents will not be cleared immediately , But it will be deleted when it is actually merged

6) for example

Perform the above insertion operation again

insert into t_order_mt values

(101,'sku_001',1000.00,'2020-06-01 12:00:00') ,

(102,'sku_002',2000.00,'2020-06-01 11:00:00'),

(102,'sku_004',2500.00,'2020-06-01 12:00:00'),

(102,'sku_002',2000.00,'2020-06-01 13:00:00'),

(102,'sku_002',12000.00,'2020-06-01 13:00:00'),

(102,'sku_002',600.00,'2020-06-02 12:00:00');

The view data is not included in any partitions

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 102 │ sku_002 │ 600.00 │ 2020-06-02 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 102 │ sku_002 │ 600.00 │ 2020-06-02 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 101 │ sku_001 │ 1000.00 │ 2020-06-01 12:00:00 │

│ 102 │ sku_002 │ 2000.00 │ 2020-06-01 11:00:00 │

│ 102 │ sku_002 │ 2000.00 │ 2020-06-01 13:00:00 │

│ 102 │ sku_002 │ 12000.00 │ 2020-06-01 13:00:00 │

│ 102 │ sku_004 │ 2500.00 │ 2020-06-01 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 101 │ sku_001 │ 1000.00 │ 2020-06-01 12:00:00 │

│ 102 │ sku_002 │ 2000.00 │ 2020-06-01 11:00:00 │

│ 102 │ sku_002 │ 2000.00 │ 2020-06-01 13:00:00 │

│ 102 │ sku_002 │ 12000.00 │ 2020-06-01 13:00:00 │

│ 102 │ sku_004 │ 2500.00 │ 2020-06-01 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

Manual optimize after

optimize table t_order_mt final;

Query again

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 102 │ sku_002 │ 600.00 │ 2020-06-02 12:00:00 │

│ 102 │ sku_002 │ 600.00 │ 2020-06-02 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 101 │ sku_001 │ 1000.00 │ 2020-06-01 12:00:00 │

│ 101 │ sku_001 │ 1000.00 │ 2020-06-01 12:00:00 │

│ 102 │ sku_002 │ 2000.00 │ 2020-06-01 11:00:00 │

│ 102 │ sku_002 │ 2000.00 │ 2020-06-01 13:00:00 │

│ 102 │ sku_002 │ 12000.00 │ 2020-06-01 13:00:00 │

│ 102 │ sku_002 │ 2000.00 │ 2020-06-01 11:00:00 │

│ 102 │ sku_002 │ 2000.00 │ 2020-06-01 13:00:00 │

│ 102 │ sku_002 │ 12000.00 │ 2020-06-01 13:00:00 │

│ 102 │ sku_004 │ 2500.00 │ 2020-06-01 12:00:00 │

│ 102 │ sku_004 │ 2500.00 │ 2020-06-01 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

primary key Primary key ( Optional )

ClickHouse The primary key , Different from other databases , It only provides a primary index of the data , But it's not the only constraint . This means that there can be the same primary key Data. .

The setting of the primary key is mainly based on the... In the query statement where Conditions .

Perform some form of binary search on the primary key according to the conditions , Can locate the corresponding index granularity, Avoid full table scans .

index granularity: Direct translation is index granularity , Referring to Sparse index The interval of data corresponding to two adjacent indexes in the .ClickHouse Medium MergeTree The default is 8192. Officials do not recommend changing this value , Unless there are a large number of duplicate values in this column , For example, only tens of thousands of rows in a partition have different data .

Sparse index :

The advantage of sparse index is that it can use very little index data , Locate more data , The cost is to locate only the first row of the index granularity , Then do a little more scanning .

order by( Mandatory )

order by Set the Within the Division According to which fields are the data stored in order .

order by yes MergeTree The only required item in , Even better than primary key Also important , Because when the user does not set the primary key , A lot of processing will follow order by To process the fields ( For example, we will talk about de duplication and summary later ).

requirement : Primary key must be order by The prefix field of the field , There is a primary key , The primary key must be placed first

such as order by The fields are (id,sku_id) Then the primary key must be id perhaps (id,sku_id)

Secondary indexes ( Hop index )

Currently in ClickHouse The function of secondary index on the official website of v20.1.2.4 It was marked as experimental , After this version, it is enabled by default .

1、 Before using the secondary index in the old version, you need to add settings

Whether experimental secondary indexes are allowed **(v20.1.2.4 Start , This parameter has been deleted , Default on )**

set allow_experimental_data_skipping_indices=1;

2、 Create test table

create table t_order_mt2(

id UInt32,

sku_id String,

total_amount Decimal(16,2),

create_time Datetime,

INDEX a total_amount TYPE minmax GRANULARITY 5

) engine =MergeTree

partition by toYYYYMMDD(create_time)

primary key (id)

order by (id, sku_id);

among GRANULARITY N Is to set the granularity of the secondary index to the primary index , The index divides the data into several pieces , This N In the secondary index is to N Blocks are combined into an interval .

3、 insert data

insert into t_order_mt2 values

(101,'sku_001',1000.00,'2020-06-01 12:00:00') ,

(102,'sku_002',2000.00,'2020-06-01 11:00:00'),

(102,'sku_004',2500.00,'2020-06-01 12:00:00'),

(102,'sku_002',2000.00,'2020-06-01 13:00:00'),

(102,'sku_002',12000.00,'2020-06-01 13:00:00'),

(102,'sku_002',600.00,'2020-06-02 12:00:00');

4、 Contrast effect

Then use the following statement to test , It can be seen that the secondary index can play a role in the query of non primary key fields .

## --send_logs_level=trace Indicates that the client directly logs , Or get the log file to see

[bd@hadoop113 lib]$ clickhouse-client --send_logs_level=trace <<< 'select * from t_order_mt2 where total_amount > toDecimal32(900., 2)';

data TTL

TTL namely Time To Live,MergeTree Provides a way to manage data tables or columns Life cycle The function of , Data expiration time

Column level TTL

/* Create test table , Expired fields cannot make primary keys */

create table t_order_mt3(

id UInt32,

sku_id String,

total_amount Decimal(16,2) TTL create_time+interval 10 SECOND,

create_time Datetime

) engine =MergeTree

partition by toYYYYMMDD(create_time)

primary key (id)

order by (id, sku_id);

/* insert data ( Be careful : Change according to the actual time ) */

insert into t_order_mt3 values

(106,'sku_001',1000.00,'2021-12-07 14:15:50'),

(107,'sku_002',2000.00,'2021-12-07 14:15:50'),

(110,'sku_003',600.00,'2021-12-07 14:15:50');

/* Merge manually , After the effect expires , The specified field data belongs to 0 */

optimize table t_order_mt3 final;

/* stay 2021-12-07 14:15:50 When viewing data before , Normal data , After the merger, it is also normal stay 2021-12-07 14:16:00 View data after and before merging , It's normal , After merging, you get the following data */

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 106 │ sku_001 │ 0.00 │ 2021-12-07 14:15:50 │

│ 107 │ sku_002 │ 0.00 │ 2021-12-07 14:15:50 │

│ 110 │ sku_003 │ 0.00 │ 2021-12-07 14:15:50 │

└─────┴─────────┴──────────────┴─────────────────────┘

Table level TTL

The following statement is that the data will be in create_time after 10 Seconds lost

alter table t_order_mt3 MODIFY TTL create_time + INTERVAL 10 SECOND;

The field involving judgment must be Date perhaps Datetime type , It is recommended to use the date field of the partition .

Time period that can be used :

- SECOND

- MINUTE

- HOUR

- DAY

- WEEK

- MONTH

- QUARTER

- YEAR

about TTL Overdue data , The default operation is to delete , Or you can move and other operations , You can check the corresponding description on the official website .

ReplacingMergeTree

ReplacingMergeTree yes MergeTree A variation of , It fully inherits the storage feature MergeTree, Just one more duplicate removal The function of . Even though MergeTree You can set the primary key , however primary key In fact, there is no unique constraint function . If you want to get rid of duplicate data , You can use this ReplacingMergeTree.

De duplication is not based on the primary key , But according to order by Field de duplication .

1) De duplication of time

Data De duplication only occurs during the merge process . The merge will take place in the background at an unknown time , So you can't plan ahead . Some of the data may not have been processed .

2) Weight removal range

If the table is partitioned , De duplication will only be performed within the partition , Cannot perform cross partition de duplication .

therefore ReplacingMergeTree Limited ability , ReplacingMergeTree It is suitable for removing duplicate data in the background to save space , But it is There is no guarantee that there will be no duplicate data .

3) Case presentation

Create table

create table t_order_rmt(

id UInt32,

sku_id String,

total_amount Decimal(16,2) ,

create_time Datetime

) engine = ReplacingMergeTree(create_time)

partition by toYYYYMMDD(create_time)

primary key (id)

order by (id, sku_id);

ReplacingMergeTree() The filled parameter is the version field , Maximum value of duplicate data retention version field . If you don't fill in the version field , By default, the last one is retained in the insertion order . If the identification fields are the same, the insertion order is also compared

Insert the following data twice , When inserting, a de duplication operation will be performed first

insert into t_order_rmt values

(101,'sku_001',1000.00,'2020-06-01 12:00:00') ,

(102,'sku_002',2000.00,'2020-06-01 11:00:00'),

(102,'sku_004',2500.00,'2020-06-01 12:00:00'),

(102,'sku_002',2000.00,'2020-06-01 13:00:00'),

(102,'sku_002',12000.00,'2020-06-01 13:00:00'),

(102,'sku_002',600.00,'2020-06-02 12:00:00');

Perform the first query

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 102 │ sku_002 │ 600.00 │ 2020-06-02 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 101 │ sku_001 │ 1000.00 │ 2020-06-01 12:00:00 │

│ 102 │ sku_002 │ 12000.00 │ 2020-06-01 13:00:00 │

│ 102 │ sku_004 │ 2500.00 │ 2020-06-01 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 102 │ sku_002 │ 600.00 │ 2020-06-02 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 101 │ sku_001 │ 1000.00 │ 2020-06-01 12:00:00 │

│ 102 │ sku_002 │ 12000.00 │ 2020-06-01 13:00:00 │

│ 102 │ sku_004 │ 2500.00 │ 2020-06-01 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

Execute the query after merging

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 101 │ sku_001 │ 1000.00 │ 2020-06-01 12:00:00 │

│ 102 │ sku_002 │ 12000.00 │ 2020-06-01 13:00:00 │

│ 102 │ sku_004 │ 2500.00 │ 2020-06-01 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 102 │ sku_002 │ 600.00 │ 2020-06-02 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

4) Through the test, we get the conclusion

* It's actually using order by Field as the unique key

* De duplication cannot cross partitions

* Only Insert in the same batch ( The new version ) Or merge partitions , It's the de duplication in the partition

* Identify duplicate data retention , The version field with the largest value

* If the version fields are the same, keep the last one in the insertion order

SummingMergeTree

For non query details , We only care about the scenario of summarizing and aggregating results by dimension . If you only use ordinary MergeTree Words , Whether it's the overhead of storage space , Or the overhead of temporary aggregation during query is relatively large .

ClickHouse For this scenario , Provides a way to “ Prepolymerization ” The engine of SummingMergeTree

Partition aggregation and fragment merging

1) Case presentation

Create table

create table t_order_smt(

id UInt32,

sku_id String,

total_amount Decimal(16,2) ,

create_time Datetime

) engine = SummingMergeTree(total_amount)

partition by toYYYYMMDD(create_time)

primary key (id)

order by (id,sku_id );

insert data

insert into t_order_smt values

(101,'sku_001',1000.00,'2020-06-01 12:00:00'),

(102,'sku_002',2000.00,'2020-06-01 11:00:00'),

(102,'sku_004',2500.00,'2020-06-01 12:00:00'),

(102,'sku_002',2000.00,'2020-06-01 13:00:00'),

(102,'sku_002',12000.00,'2020-06-01 13:00:00'),

(102,'sku_002',600.00,'2020-06-02 12:00:00');

The results of the first query are as follows , When the same batch is inserted , There will be an aggregation first

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 102 │ sku_002 │ 600.00 │ 2020-06-02 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 101 │ sku_001 │ 1000.00 │ 2020-06-01 12:00:00 │

│ 102 │ sku_002 │ 16000.00 │ 2020-06-01 11:00:00 │

│ 102 │ sku_004 │ 2500.00 │ 2020-06-01 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

Then insert the above data , The query results are as follows

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 102 │ sku_002 │ 600.00 │ 2020-06-02 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 101 │ sku_001 │ 1000.00 │ 2020-06-01 12:00:00 │

│ 102 │ sku_002 │ 16000.00 │ 2020-06-01 11:00:00 │

│ 102 │ sku_004 │ 2500.00 │ 2020-06-01 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 102 │ sku_002 │ 600.00 │ 2020-06-02 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 101 │ sku_001 │ 1000.00 │ 2020-06-01 12:00:00 │

│ 102 │ sku_002 │ 16000.00 │ 2020-06-01 11:00:00 │

│ 102 │ sku_004 │ 2500.00 │ 2020-06-01 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

Merge manually

optimize table t_order_mt3 final;

give the result as follows

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 102 │ sku_002 │ 1200.00 │ 2020-06-02 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

┌──id─┬─sku_id──┬─total_amount─┬─────────create_time─┐

│ 101 │ sku_001 │ 2000.00 │ 2020-06-01 12:00:00 │

│ 102 │ sku_002 │ 32000.00 │ 2020-06-01 11:00:00 │

│ 102 │ sku_004 │ 5000.00 │ 2020-06-01 12:00:00 │

└─────┴─────────┴──────────────┴─────────────────────┘

2) The following conclusions can be drawn from the results

* With SummingMergeTree() The columns specified in are used as summary data columns

* You can fill in more than one column, which must be numeric , If you don't fill in , With all A field that is not a dimension column and is a numeric column For summary data columns

* With order by Shall prevail , As a dimension column

* The other columns keep the first row in the insertion order

* Data not in a partition will not be aggregated , Intra partition aggregation

* Insert only in the same batch ( The new version ) Or fragment merging

3) Development Suggestions

Designing aggregation tables , Unique key value 、 The serial number can be removed , All fields are dimensions 、 Measurement or timestamp .

4) problem

Can you directly execute the following SQL Get the summary value

select total_amount from XXX where province_name=’’ and create_date=’xxx’

no way , It may contain some temporary details that have not yet been aggregated

If you get the aggregate value , Still need to use sum Aggregate , In this way, the efficiency will be improved , But in itself ClickHouse Is stored in columns , Limited efficiency improvement , Not particularly obvious .

select sum(total_amount) from province_name=’’ and create_date=‘xxx’

版权声明

本文为[Magic Flute love]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204231704450676.html

边栏推荐

- EF core in ASP Generate core priority database based on net entity model

- Path environment variable

- 1-5 nodejs commonjs specification

- PHP efficiently reads large files and processes data

- ◰ GL shader handler encapsulation

- 【WPF绑定3】 ListView基础绑定和数据模板绑定

- 线性代数感悟之1

- Redis docker installation

- Shortcut keys (multiline)

- Multithreaded @ async thread pool

猜你喜欢

SQL database

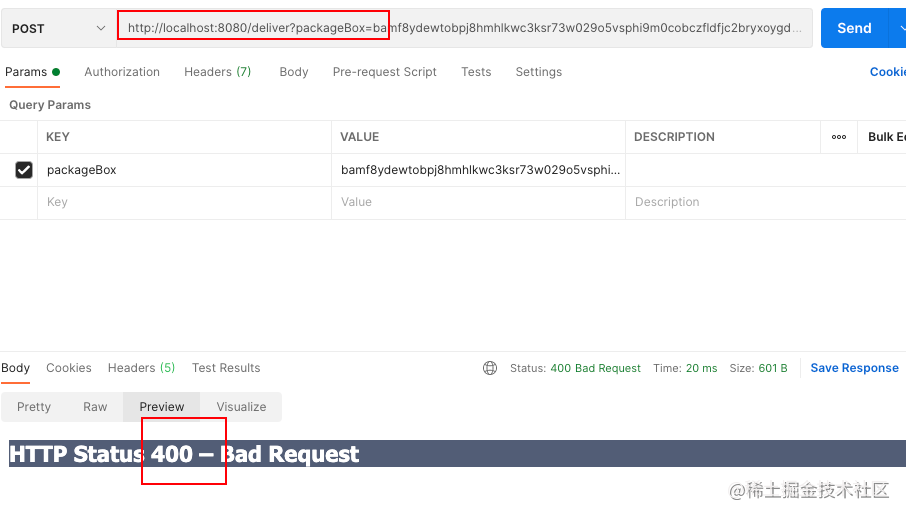

Feign report 400 processing

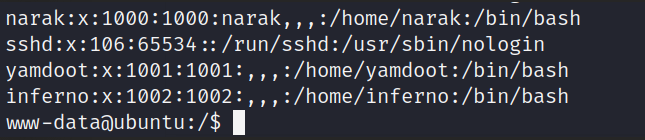

Detailed explanation of the penetration of network security in the shooting range

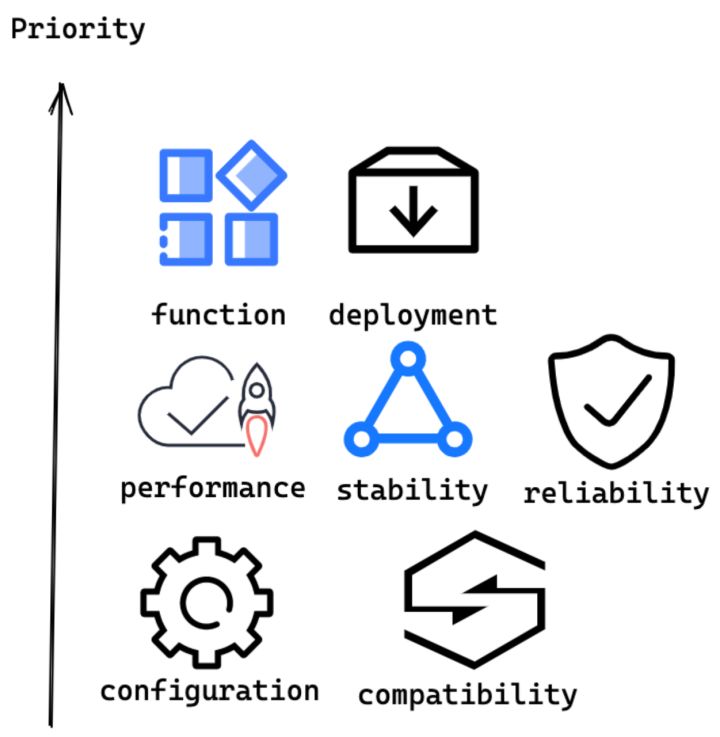

Milvus 2.0 质量保障系统详解

![[pimf] openharmony paper Club - what is the experience of wandering in ACM survey](/img/b6/3df53baafb9aad3024d10cf9b56230.png)

[pimf] openharmony paper Club - what is the experience of wandering in ACM survey

Bottom processing of stack memory in browser

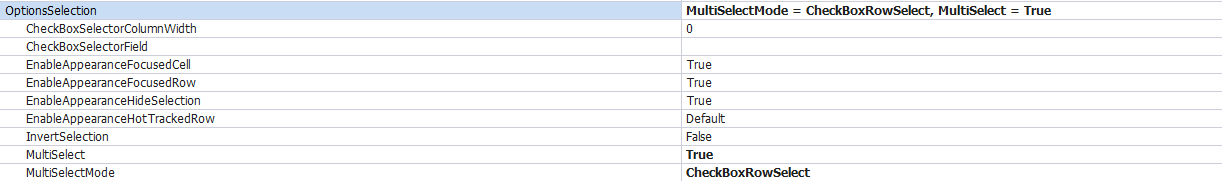

Devexpress GridView add select all columns

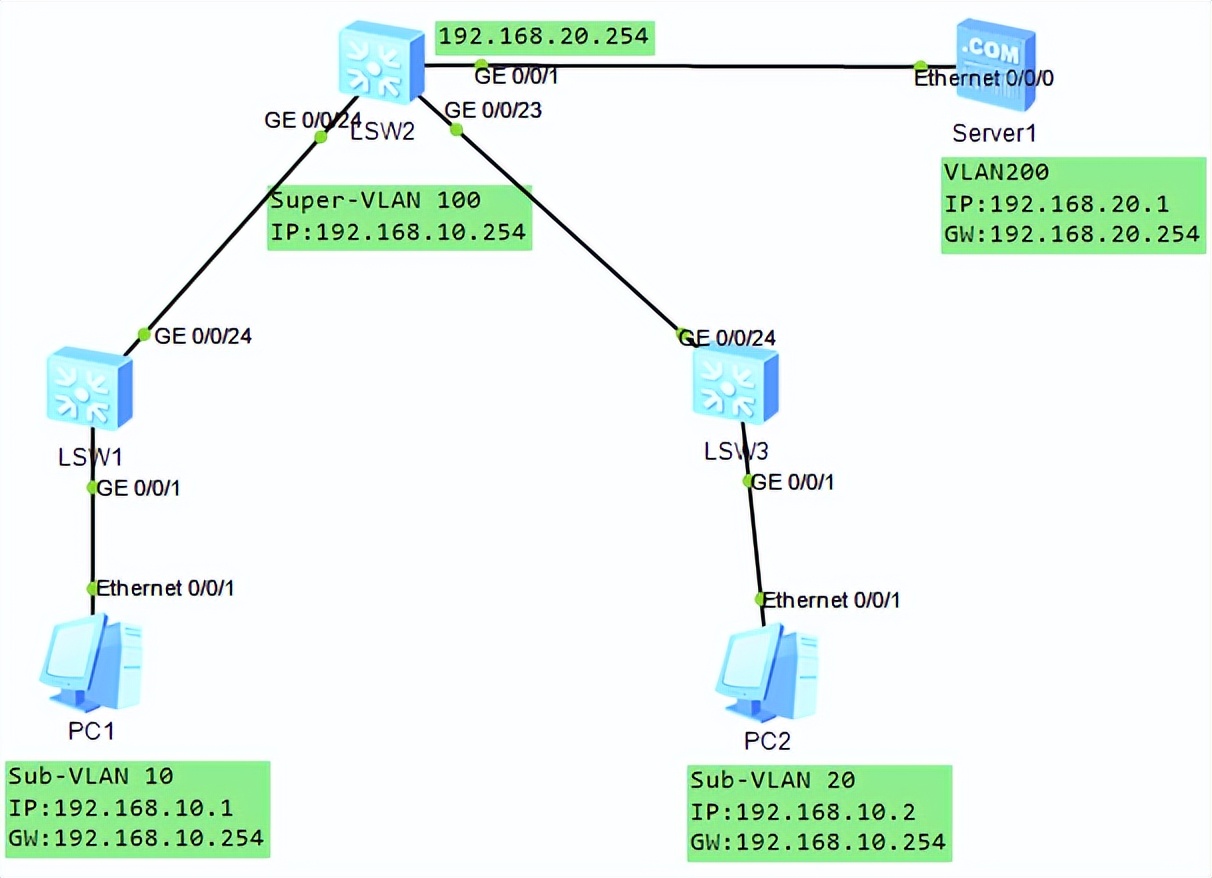

VLAN高级技术,VLAN聚合,超级Super VLAN ,Sub VLAN

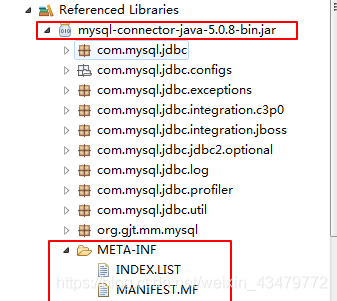

Getting started with JDBC

Nacos + aspnetcore + Ocelot actual combat code

随机推荐

Shell script -- shell programming specification and variables

MySQL restores data through binlog file

feign报400处理

freeCodeCamp----shape_ Calculator exercise

1-2 characteristics of nodejs

Get the column name list of the table quickly in Oracle

RTKLIB 2.4.3源码笔记

ASP. Net core configuration options (Part 2)

AIOT产业技术全景结构-数字化架构设计(8)

Aiot industrial technology panoramic structure - Digital Architecture Design (8)

MySQL modify master database

Promise (III)

Multithreaded @ async thread pool

ACL 2022 | dialogved: a pre trained implicit variable encoding decoding model for dialogue reply generation

Path environment variable

Conversion between hexadecimal numbers

Node access to Alipay open platform sandbox to achieve payment function

Calculate pie chart percentage

groutine

Nifi fast installation and file synchronization