当前位置:网站首页>Spatial Pyramid Pooling -Spatial Pyramid Pooling (including source code)

Spatial Pyramid Pooling -Spatial Pyramid Pooling (including source code)

2022-08-11 07:13:00 【KPer_Yang】

Table of Contents

1. Problems solved by Spatial Pyramid Pooling

2. SpatialPyramid Pooling Implementation Principle

3. Code implementation of Spatial Pyramid Pooling

Reference:

"Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition"

1,Problems solved by Spatial Pyramid Pooling

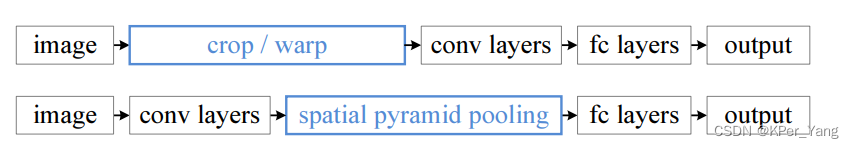

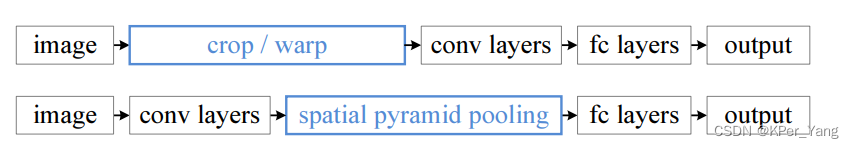

Spatial pyramid pooling is mainly used to solve the problem of inconsistent resolution of input images.Previously, image scaling or cropping was used to resolve image resolution inconsistencies, which could easily lead to loss of image information.The difference between the two methods to solve the problem of inconsistent image resolution is shown in Figure 1.1:

Figure 1.1 The difference between cropping, scaling and Spatial Pyramid Pooling

2. Principle of Spatial Pyramid Pooling

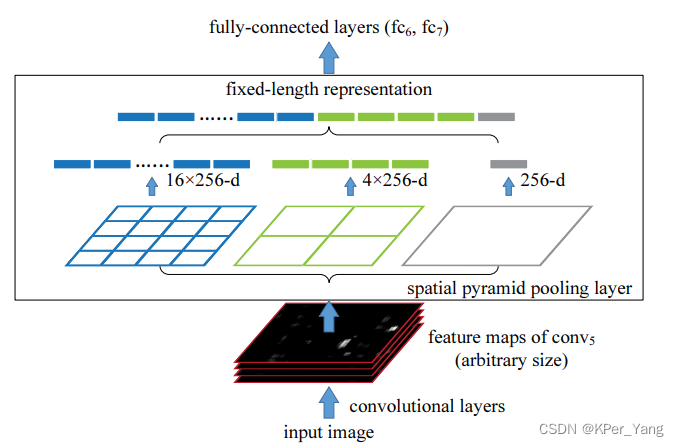

As shown in Figure 2.1, the implementation of SPP-Net is to pool the feature maps by a variety of pooling layers of different sizes, and then perform vector flattening and splicing.The pooling layers of 16*16, 4*4, and 1*1 are used in this article. When applied to your own tasks, you can change them according to factors such as the size of the feature map.At the same time, when the feature map is not equal in length and width, a padding operation is required, and 16*16 and 4*4 are pooled according to the method of dividing the grid, which is different from the operation of the ordinary pooling layer.

Figure 2.1 The principle diagram of Spatial Pyramid Pooling implementation

3,Code implementation of Spatial Pyramid Pooling

import mathdef spatial_pyramid_pool(self, previous_conv, num_sample, previous_conv_size, out_pool_size):'''previous_conv: a tensor vector of previous convolution layernum_sample: an int number of image in the batchprevious_conv_size: an int vector [height, width] of the matrix features size of previous convolution layerout_pool_size: a int vector of expected output size of max pooling layerreturns: a tensor vector with shape [1 x n] is the concentration of multi-level pooling'''# print(previous_conv.size())for i in range(len(out_pool_size)):# print(previous_conv_size)h_wid = int(math.ceil(previous_conv_size[0] / out_pool_size[i]))w_wid = int(math.ceil(previous_conv_size[1] / out_pool_size[i]))h_pad = (h_wid*out_pool_size[i] - previous_conv_size[0] + 1)/2w_pad = (w_wid*out_pool_size[i] - previous_conv_size[1] + 1)/2maxpool = nn.MaxPool2d((h_wid, w_wid), stride=(h_wid, w_wid), padding=(h_pad, w_pad))x = maxpool(previous_conv)if(i == 0):spp = x.view(num_sample,-1)# print("spp size:",spp.size())else:# print("size:",spp.size())spp = torch.cat((spp,x.view(num_sample,-1)), 1)return 边栏推荐

猜你喜欢

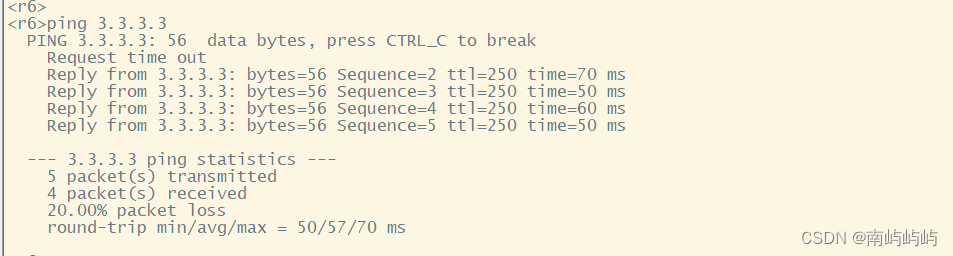

HCIP OSPF动态路由协议

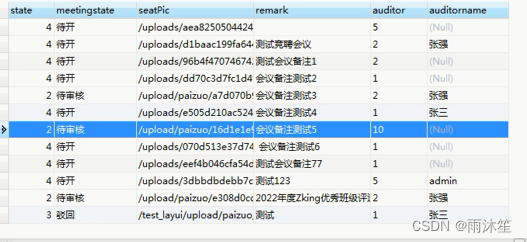

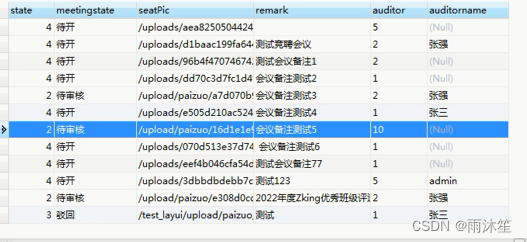

Conference OA Project My Conference

亚马逊API接口大全

My approval of OA project (inquiry & meeting signature)

HCIP WPN实验

OA项目之我的审批(查询&会议签字)

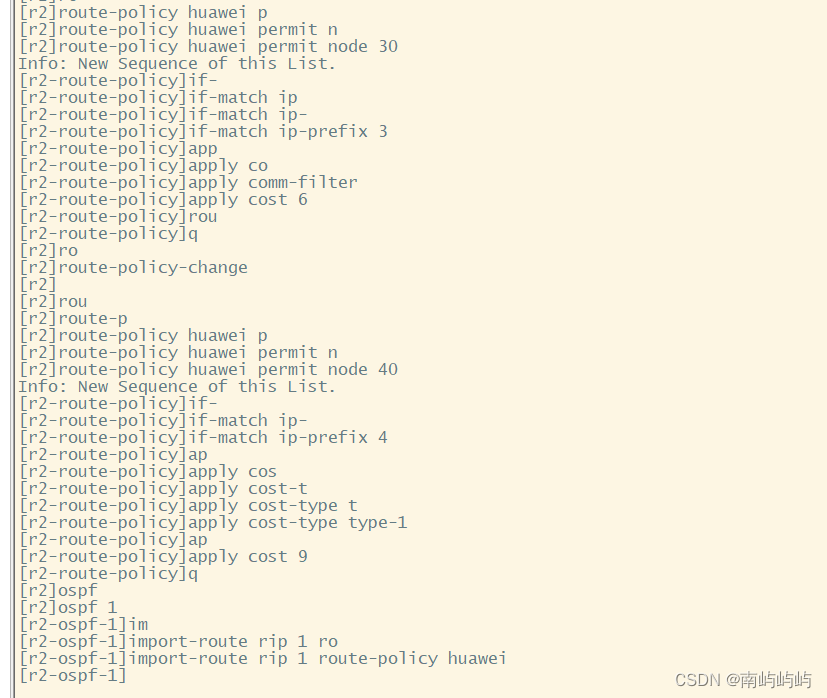

HCIP Republish/Routing Policy Experiment

HCIP-生成树(802.1D ,标准生成树/802.1W : RSTP 快速生成树/802.1S : MST 多生成树)

拼多多API接口(附上我的可用API)

空间金字塔池化 -Spatial Pyramid Pooling(含源码)

随机推荐

arcgis填坑_3

1688商品详情接口

MySQL之CRUD

HCIP Republish/Routing Policy Experiment

cloudreve使用体验

每日sql-统计各个专业人数(包括专业人数为0的)

iptables 流量统计

HCIA knowledge review

防火墙-0-管理地址

HCIP MPLS/BGP综合实验

空间点模式方法_一阶效应和二阶效应

华为防火墙会话 session table

京东商品详情API调用实例讲解

利用opencv读取图片,重命名。

OA项目之待开会议&历史会议&所有会议

TOP2两数相加

HCIP-Spanning Tree (802.1D, Standard Spanning Tree/802.1W: RSTP Rapid Spanning Tree/802.1S: MST Multiple Spanning Tree)

抖音API接口

Record a Makefile just written

淘宝API接口参考