Sharing guests : Da Yue Gao @ US group review ,Apache Kylin PMC member ,Druid Commiter

Edit and organize :Druid Chinese user group 6th MeetUp

Production platform :DataFunTalk

--

Reading guide : For a long time , Yes SQL And permission support has always been Druid The weakness of . Although the community has been in 0.9 and 0.12 The version adds the right SQL and Security Support for , But according to our understanding , Considering the maturity and stability of the function , The real SQL and Security The number of users is relatively small . This sharing will introduce the community SQL and Security The principle of the scheme , And the problems met in the process of implementing the two functions of meituan reviews 、 Improvements made 、 And the final results . Let's start today's sharing :

My share today consists of four parts . First , Let me introduce meituan to you Druid The status quo of the use of , And what we're building Druid Challenges encountered in the process of platform development . The second part , Introduce Druid SQL The basic principle and usage of , And what we're using Druid SQL Problems encountered in the process of and some improvements made . The third part , Introduce Druid Support for data security , And we combine our own business needs in Druid Security Practical experience in the field . Last , Make a summary of today's sharing .

--

01 Druid The current situation and challenges in meituan

1.Druid Application status

Meituan comes from 16 Put into use Druid, The cluster version is from 0.8 To the present 0.12 edition . There are two on the line Druid colony , There are about 70 Multiple data nodes .

Data scale , There are 500 Multiple tables ,100TB The storage , The biggest watch starts from Kafka The amount of news ingested is at the level of 10 billion . In terms of inquiry , The number of queries per day is 1700 More than ten thousand times , Here are some regular queries initiated by the program , For example, multidimensional queries triggered regularly in risk control scenarios . Performance aspect , Different application scenarios have different requirements , But on the whole TP99 A table with a response time of one second takes up 80%, It's not the same with us Druid The positioning of —— Second level real time OLAP The engine is consistent .

2.Druid Platform challenges

hold Druid In the process of providing a service for business use , We mainly encountered ease of use 、 Security 、 Three challenges of stability .

Ease of use : Business will care about Druid How high is the cost of learning and using , Whether you can access... Soon . As we all know ,Druid It only provides data writing and query based on JSON Of API Interface , You need to learn how to use the interface , Understand the meaning of various fields , The use cost is very high . This is the problem we hope to solve through platform .

Security : Data is one of the core assets of many businesses , Business is very concerned about Druid Whether the service can guarantee their data security .Druid Earlier versions have weak support for security , Therefore, this is also the key part of our construction last year .

stability : On the one hand, we need to solve various stability problems during the implementation of open source systems , On the other hand , How to when the query logic is uncontrollable , Locate and solve problems in a multi tenant environment , It is also a great challenge .

--

02 Druid SQL Application and improvement of

stay Druid SQL Before appearance ,Druid The query is based on JSON Of DSL To express ( The figure below ). This query language costs a lot to learn first , Users need to know Druid What are provided queryType, Each of these queryType Which parameters need to be passed , How to choose the right one queryType etc. . Secondly, the use cost is high , The application needs to implement JSON Request generation logic and response JSON The analytic logic of .

adopt Druid SQL, You can make the above complex JSON Write the following standard SQL.SQL The convenience is obvious , On the one hand, there is no additional learning cost for programmers and data analysts , On the other hand, you can use something like JDBC Standard interface for , Greatly reduced the threshold .

1.Druid SQL brief introduction

Let me briefly introduce Druid SQL.

First ,Druid SQL yes 0.10 A new core module in version , from Druid The community provides continuous support and optimization , So whether it's stability or perfection , Will give more than others Druid add to SQL Dialect projects are better .

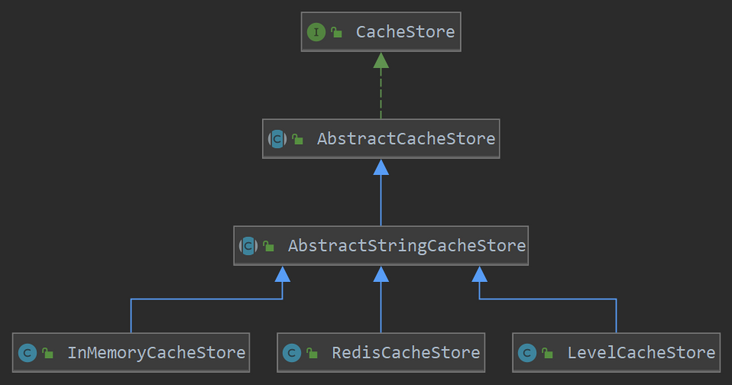

In principle ,Druid SQL Mainly realized from SQL To native JSON Translation layer of query language . Because only a layer of language translation is done , The advantage is Druid SQL It will not have a great impact on the stability and performance of the cluster , The disadvantage is limited to native JSON The ability to query ,Druid SQL only SQL A subset of features .

Call mode ,Druid SQL Provides HTTP and JDBC Two ways to meet the needs of different applications . Finally, expressiveness ,Druid SQL It can express almost everything JSON The logic that queries can implement , And it can automatically help you choose the most suitable queryType.

Here are three Druid SQL Example .

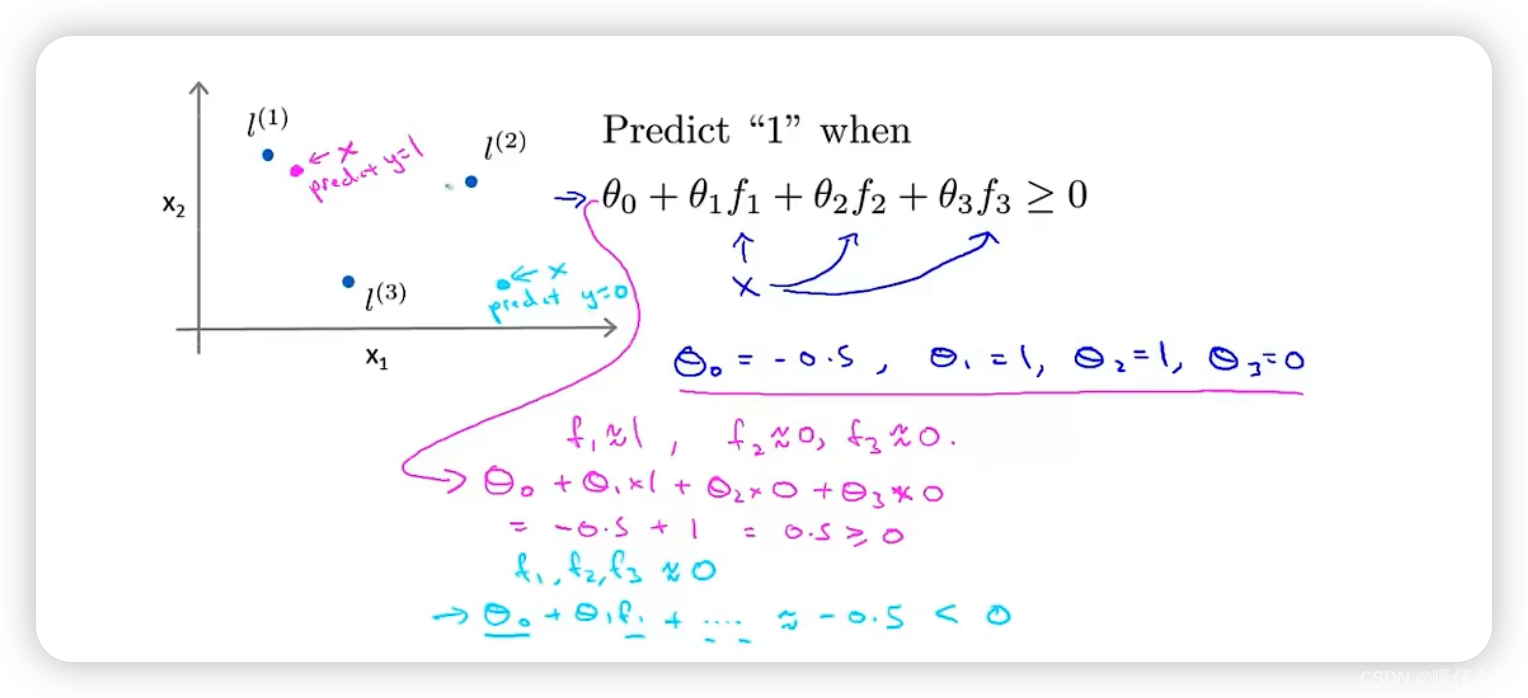

The first example is the approximation TopN Inquire about . For analyzing a single dimension based on an indicator TopN The need for value , Native JSON Queries provide an approximation TopN Implementation of algorithm .Druid SQL Can recognize this pattern , Generate the corresponding approximation TopN Inquire about .

The second example is semi connected . We know Druid Flexibility is not supported JOIN Of , But businesses often have such needs , The result of the first query is used as the filter condition of the second query , use SQL The expression is in subquery, Or semi connected .Druid SQL Special support is provided for this scenario , Users do not need to initiate multiple queries in the application layer , It's written as in subquery Just the form of .Druid SQL The subquery is executed first , Materialize the results into outer query filter conditions , Then execute the outer query .

Finally, there is a nested GroupBy Example .Druid SQL Can recognize this kind of multi-layer GroupBy structure , Generate the corresponding native nested GroupBy JSON .

2.Druid SQL framework

Let's introduce Druid SQL The overall structure of .

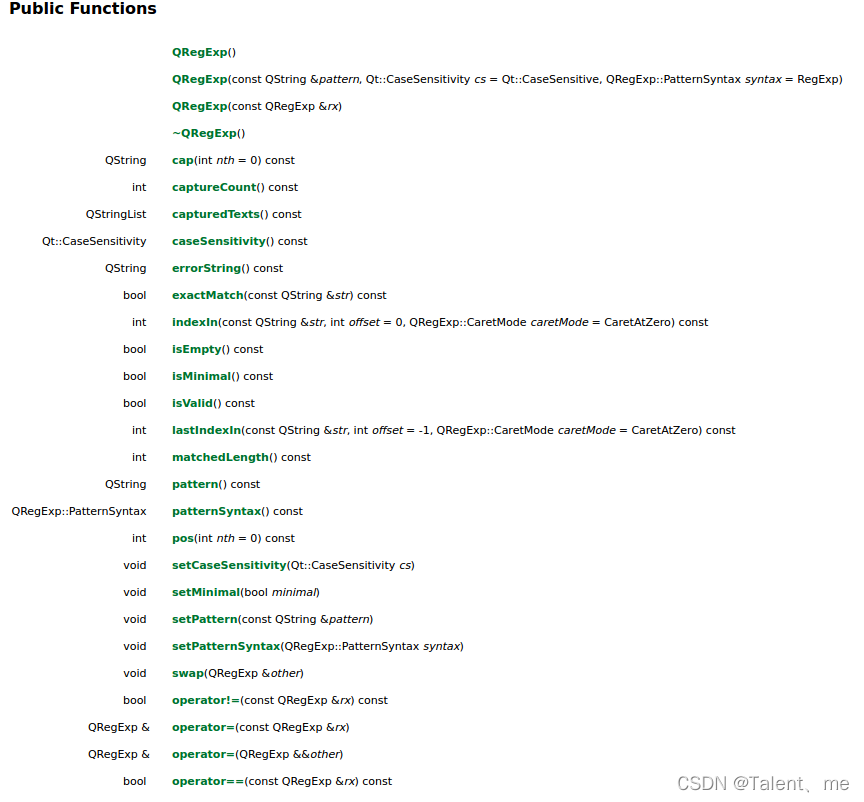

Druid SQL Is to query the proxy node Broker Functions implemented in , It mainly includes Server and SQL Layer Two modules .

Server The module is responsible for receiving and parsing requests , Include HTTP and JDBC Two types of . For ordinary HTTP request , Add corresponding REST Endpoint that will do . about JDBC,Druid Reuse the Avatica Project JDBC Driver and RPC Definition , So you just need to implement Avatica Of SPI That's it . because Avatica Of RPC Is based on HTTP Of , Therefore, both can use the same Jetty Server.

SQL Layer Responsible for SQL Translate into native JSON Inquire about , Is based on Calcite Project implementation .Calcite It's a universal SQL Optimizer framework , Be able to put the standard SQL analysis 、 analysis 、 Optimize into a specific implementation plan , It has been widely used in the field of big data . The light green component in the figure is Calcite Provided , The light blue component is Druid Realized , It mainly includes three .

First ,DruidSchema Components for Calcite Provide metadata required for query parsing and validation , For example, which tables are included in the cluster , The name and type of each field in each table .RulesSet Component defines the transformation rules used by the optimizer . because Druid SQL Only do language translation , So here are some logic optimization rules ( For example, projection elimination 、 Constant folding, etc ), Does not include physical optimization . adopt RulesSet,Calcite Will turn the logical plan into DruidRel node ,DruidRel Contains all the information of the query . Last ,QueryMaker The component will try to DruidRel Turn into one or more native JSON Inquire about , these JSON The query is finally submitted to Druid Of QueryExecution Module execution .

3.API choice : HTTP or JDBC

Druid SQL Provides HTTP and JDBC Two interfaces , Which one should I use ? Our experience is ,HTTP Applicable to all programming languages ,Broker No state , Operation and maintenance is relatively simple ; The disadvantage is that the client processing logic is relatively more .JDBC about Java Application friendly , But it leads to Broker Become a stateful node , This requires special attention when making complex equilibrium . in addition JDBC There are still some unresolved BUG, If you use JDBC Interface , Need extra attention .

4. improvement

Let's introduce our understanding of Druid SQL Some improvements made .

The first improvement is about Schema Derived performance optimization . We know Druid It's a schema-less System , It doesn't require all the data schema identical , How to define Druid Tabular schema Well ? The realization of community is : Through the first SegmentMetadataQuery Calculate each segment Of schema, Then merge segment schema Get the... Of the table segment, Last in segment Recalculate the entire table when changes occur schema.

The implementation of the community has encountered three problems in our scenario . The first is the Broker Too long startup time . We have a cluster with 60 m segment, The test found that light calculates these segment Of schema It will take half an hour . This can lead to Broker After starting , It takes half an hour to provide service . The second question is Broker You need to cache all... In memory Segment Metadata , Resulting in an increase in resident memory , in addition schema Refresh will bring great GC pressure . The third question is , The metadata query level submitted by the community scheme is the same as Broker and Segment Directly proportional to the product of the number , Therefore, the scalability is not good .

In response to this question , After analyzing the business requirements, we found that : First schema Change is a relatively low-frequency operation , That is, most segment Of schema It's the same , There is no need to double calculate . in addition , In most cases, businesses only need to use the latest schema To query . therefore , Our solution is , Use only the most recent period of time , Not everything segment To derive schema. After transformation ,broker Calculation schema The time of the was reduced from half an hour to 20 second ,GC The pressure is also significantly reduced .

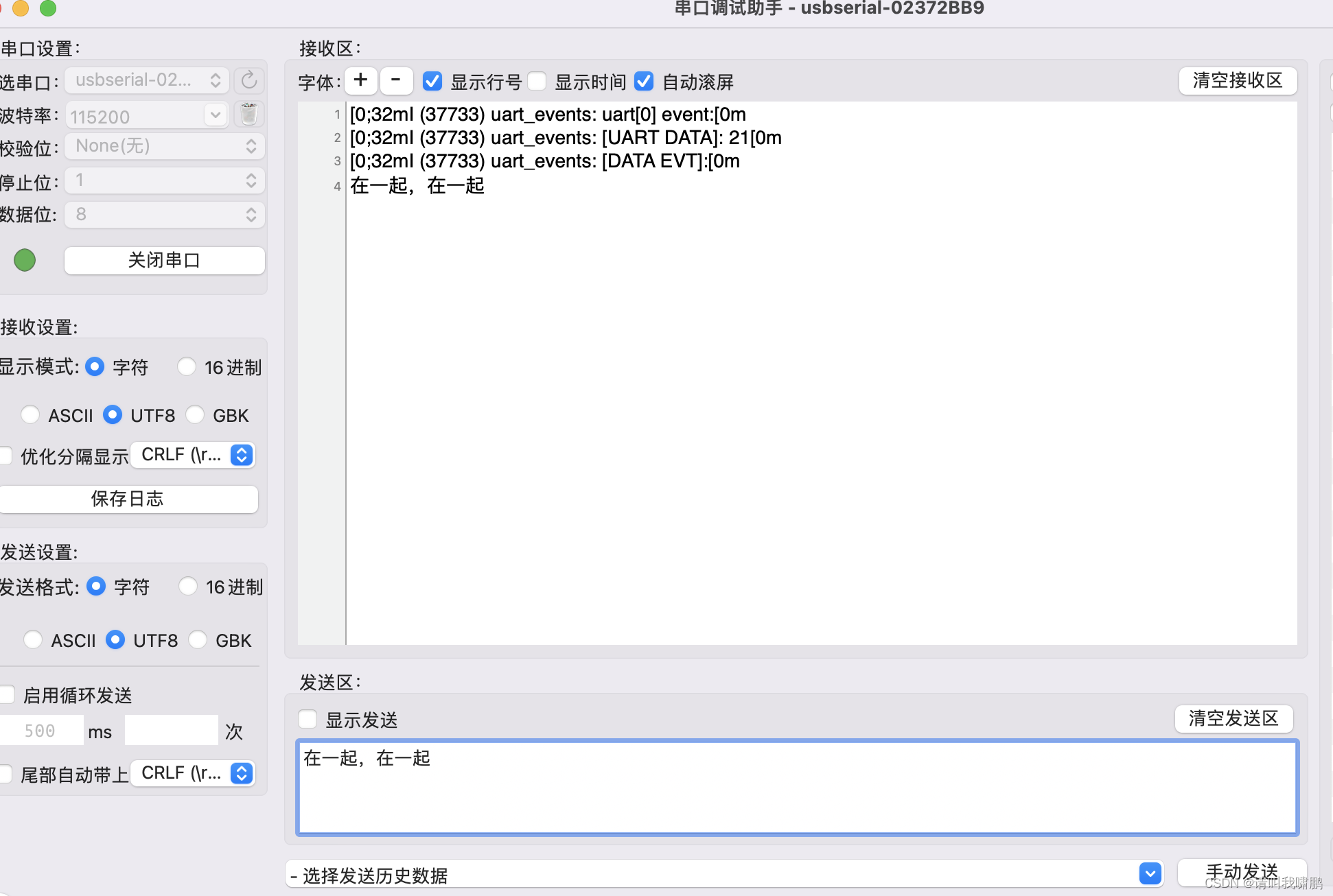

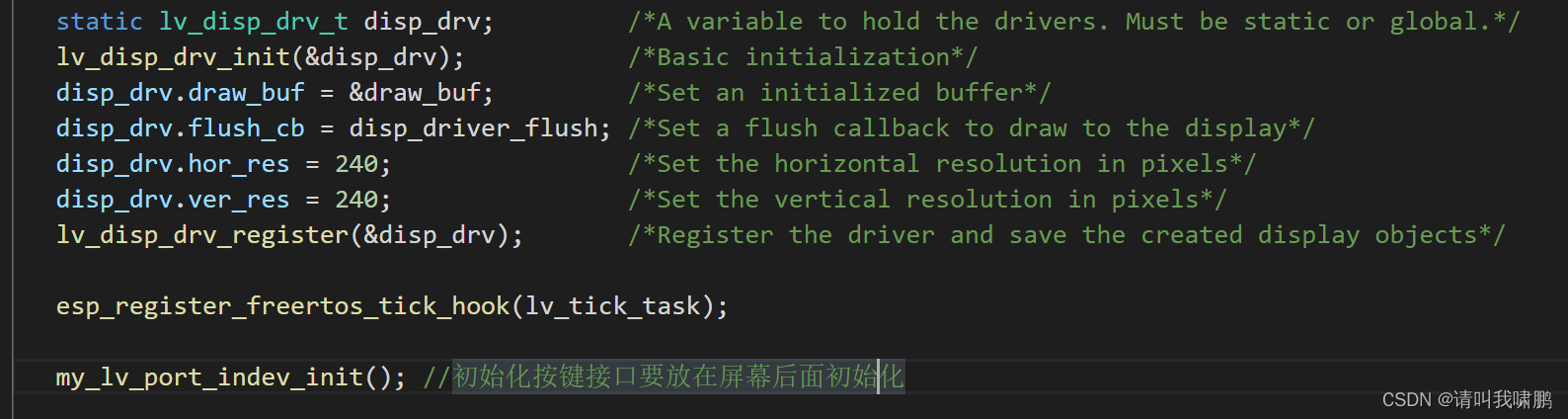

The second optimization is about logging and monitoring . Request logs and monitoring indicators are two tools we rely heavily on in the operation and maintenance process , For example, slow query positioning 、SLA Calculation of indicators 、 Both traffic monitoring and playback depend on the log . however 0.12 Version of SQL There is no request log , There are no monitoring indicators , This is a problem that must be solved before going online . We have two goals : First, you can record all SQL Basic information of the request , For example, request time 、 user 、SQL Content , Time consuming, etc ; Secondly, it can SQL Request and native JSON Query Association . Because the indicators at the implementation level are JSON Query granularity , We need to find JSON Query the corresponding original SQL Inquire about .

Our solution has been incorporated into 0.14 edition . First , We will give it to everyone SQL Request to assign a unique sqlQueryId. Then we expanded RequestLogger Interface , Added output SQL Log method . Here is an example , For each SQL request , Except for the output SQL Out of content , It will also output its sqlQueryId, It can be used to associate with the log of the client . It will also output SQL For each of them JSON Of the query queryId, It can be used to communicate with JSON Query and do association analysis .

The third improvement is relatively small , But it is important for the stability of the service . We know ,JSON The query requires the user to specify the time range of the query ,Druid Will use this range to do partition clipping , This is very important to improve performance . however Druid SQL There are no such restrictions . User write SQL Often forget to add a time limit , This leads to full table scanning , Occupy a lot of cluster resources , It's a big risk . So we added a pair of where Inspection of conditions , If the user does not specify the filter condition of the timestamp field , The query will directly report an error .

--

03 Druid Security The practical experience of

First, we introduce the problems we face in data security . At that time 0.10 edition , This version does not have any support for data security , be-all API No access control , Anyone can access or even delete all data , This is a very big hidden danger for business data security .

There are five goals we hope to achieve : all API All certified 、 Realization DB Granular access control 、 All data access has an audit log 、 Business can be smoothly upgraded to a secure cluster 、 Changes to the code are less intrusive .

To achieve these goals , We first investigated Druid New security features in subsequent versions .

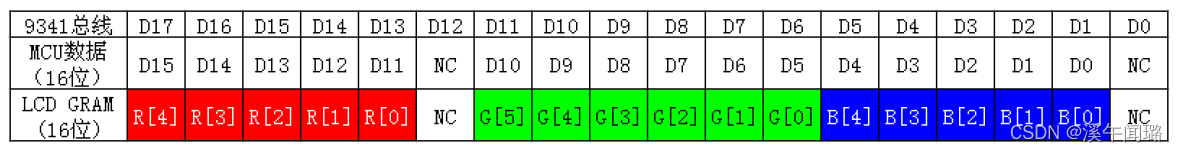

1.Druid Security Function and principle

0.11 This version supports end-to-end transport layer encryption (TLS), It can realize client to cluster , And the transport layer security between the nodes of the cluster .0.12 This version introduces an extensible authentication and authentication framework , And based on this framework , Provides BA and Kerberos And so on , And a role-based authentication module .

The following figure introduces the principle and configuration of authentication framework .

2.Druid Security Disadvantages of community programs

Community programs can meet most of our needs , But there are still some problems .

The first problem is that we found that the browser is right BA Certification support is poor . So for Web Console , We hope to take a unified SSO authentication .

The second problem is to support the smooth transition of business to security cluster , At the beginning of the launch, it must be compatible with non authenticated requests , What we used at the time was 0.12 Version does not have this feature .

The third problem is that the community role-based authentication module only provides the underlying management API, Users use these directly API Very inconvenient .

The last problem is that the community does not support audit logs yet .

To address these issues , We have made three major improvements .

3. improvement

Improve one : be based on DB Access control

First , To simplify the management of permissions , We introduced DB The concept of , And implemented DB Granular access control . Business goes through DB Read and write account access DB In the table .

Improvement 2 : Automatically manage permissions DB

Access platform maintenance through tasks DB and DataSource The mapping relation of , And in DB and DataSource When something changes , Call the authentication module interface to update the permission DB.

Improve three : Support SSO Authenticated and non authenticated access

Custom authentication chain , adopt SSO authentication Filter Realization Web Console SSO authentication , Through insecure access Filter The bottom line , Compatible with non authenticated requests .

matters needing attention

(1) Use 0.13 Above version ( perhaps cherrypick The high version of the bugfix)

(2) Online process

- Enable basic-security function , use allowAll The bottom line

- Initialize permissions DB, Create anonymous users and authorize

- take allowAll Replace with anonymous

- Gradually reclaim the rights of anonymous users

(3) Online sequence :coordinator->overlord->broker->historical->middleManager

--

04 summary

1. About SQL

(1) If you're still using native JSON query language , It is strongly recommended to try

(2) The community is improving SQL modular , It is recommended to use the latest version

(3)Druid SQL It is essentially a language translation layer

- It doesn't have much impact on query performance and stability

- Limited by Druid Its own query processing ability , Supported by SQL Limited function

(4) Pay attention to the pit

- Large clusters of schema Derivation efficiency

- Broker Need to wait schema Provide services after initialization (#6742)

2. About Security

(1)Druid Include Security characteristic , It is recommended to upgrade to the latest version to use

- Transport layer encryption

- Authentication framework

- BA and Kerberos authentication

- RBAC authentication

(2) The authentication framework is flexible enough , It can be expanded according to its own needs

(3) Experience the test of production environment , The degree of completion and stability are good enough

(4) Compatibility and node update sequence shall be fully considered before going online

Today's sharing is here , Thank you. .

This article was first published in WeChat official account “DataFunTalk”

Introduction to guests :

Da Yue Gao ,Apache Kylin PMC member ,Druid Commiter, Open source and database technology enthusiasts , For many years SQL Engine and big data system development experience . Currently in charge of meituan review OLAP Kernel development of engine 、 Platform construction 、 Business implementation and other work .

notes : Welcome to reprint , Please leave a message or private message for reprint .

Druid SQL and Security More relevant articles on the practice of meituan review

- US group review SQL Optimization tool SQLAdvisor Open source quick deployment

US group review SQL Optimization tool SQLAdvisor Open source quick deployment git clone https://github.com/Meituan-Dianping/SQLAdvisor.gityum install ...

- Apache Kylin Application in meituan comments

The official account of WeChat public is the main content of this article. . Thank Gao Dayue, the comment engineer of meituan, for writing and authorizing the reprint . Da Yue Gao , Meituan comment Engineer ,Apache Kylin PMC member , Currently, he is mainly responsible for meituan review data platform OLAP Construction of query engine . back ...

- US group review DBProxy Read write separation instructions

Purpose Because the business architecture needs to achieve read-write separation , Just a while ago, meituan's comments were open source 360Atlas Based on the development of read-write separation middleware DBProxy, Its introduction has been explained in detail in the official documents , Its main characteristics are : Read / write separation . Load balancing . ...

- Meituan's comments are based on MGR Of CMDB The way to build high availability architecture 【 turn 】

Wang Zhipeng US group review DBA Once worked in Jingdong finance as DBA, Currently working in meituan reviews , Mainly responsible for the operation and maintenance of financial business line database and basic component database . MySQL Group Replication( hereinafter referred to as MGR), On 5.7.17 edition ...

- US group review MySQL Database high availability architecture from MMM To MHA+Zebra as well as MHA+Proxy Evolution of

This article introduces the comments of meituan in recent years MySQL The evolution of database high availability architecture , And some of our innovations based on open source technology . meanwhile , It is also compared with other solutions in the industry , Learn about the industry's progress in high availability , And some of our plans and prospects for the future . MMM ...

- In depth and detailed interpretation of meituan comments CAT Cross language service monitoring ( 7、 ... and ) Message analyzer and report ( Two )

CrossAnalyzer- Call chain analysis In a distributed environment , Applications run in separate processes , It could be a different machine , Or different server processes . So if they want to connect with each other , Form a call chain , stay Cat in ,CrossAn ...

- In depth and detailed interpretation of meituan comments CAT Cross language service monitoring ( One ) CAT Introduction and deployment

Preface : CAT It is a real-time and near full monitoring system , It focuses on Java Application monitoring , In addition to comments RPC In addition to the good integration of components , He will be able to Spring.MyBatis.Dubbo Such framework and Log4j Equal combination , Support P ...

- Leaf—— Meituan comments distributed ID generating system

background In a complex distributed system , A lot of data and messages need to be uniquely identified . As in meituan's comments on Finance . payment . Restaurant . The hotel . Cat eye movies and other products in the system , Data is growing , There needs to be a unique table after the data sub base is divided ID To identify a data or message , Count ...

- US group review CAT Monitoring platform research

1. US group review CAT Monitoring platform research 1.1. Preface This article is based on my reading and recording of official documents , In the middle may be interspersed with some of their own thinking and pit 1.2. brief introduction CAT Is based on Java Developed real-time application monitoring platform , Point for meituan ...

- Hungry? Monitoring system EMonitor Comment with meituan CAT Comparison of

Background introduction Hungry? Monitoring system EMonitor: It is a one-stop monitoring system for all technical departments of hungry , It covers system monitoring . Container monitoring . network monitoring . Middleware monitoring . Business monitoring . Data storage and query of access layer monitoring and front-end monitoring . Total daily processing ...

Random recommendation

- PAT Class A 1001. A+B Format (20)

Original title : Calculate a + b and output the sum in standard format -- that is, the digits must be separated ...

- iOS There are four ways to log in

iOS There are four ways to log in One . Web page loading : http://www.cnblogs.com/tekkaman/archive/2013/02/21/2920218.ht ml [iOS The realization of landing ] A ...

- Luogu2045 Check the enhanced version

Title Description Give a n*n Matrix , Each lattice has a nonnegative integer Aij,(Aij <= 1000) Now from (1,1) set out , You can go right or down , We finally reached (n,n), Every time you reach a grid , Take out the number of the grid , The number of the grid becomes ...

- 【git】idea /git bash command Operation branch

1. demand Because there are some changes to be made to the project at present , And the project is about to go online , These new changes don't need to go online together , So at this time, we need to master Pull out another branch from the branch for development . 2. Branch operation open git bash Tools → Switch ...

- [C++]Linux Multi process running code framework

Statement : If you need to quote or extract the source code of this blog or its articles , Please indicate in the prominent place , From this blog / author , To show respect for the fruits of labor , Help open source spirit . Welcome to discuss , communication , To make progress together - 0.0 Multi process code framework example /* @url: ...

- swiper Added auto scroll effect , Then cross the page with your finger , Found that the auto scroll effect doesn't work

I give swiper Added auto scroll effect , Then cross the page with your finger , Found that the auto scroll effect doesn't work , What's wrong ? Add parameter autoplayDisableOnInteraction : false,

- python Deep copy, shallow copy

python Deep and shallow copy issues : What is deep copy ? ( Personal understanding ) A deep copy is as like as two peas. , Then save it to another address , Instead of quoting the address What is shallow copy ? ( Personal understanding ) Is the reference address (1) With an equal sign ...

- Finish adding users to the background ssm project , Full version

1:ssm Framework integration 1.1 add to maven rely on pom.xml <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns: ...

- Raspberry pie specifies static IP

1. Backup and empty interfaces file cp /etc/network/interfaces /etc/network/interfaces.bak vi /etc/network/interfa ...

- [ Roof placement ] Android Use in Movie Show gif Dynamic graph

Reprint please indicate : http://blog.csdn.net/u012975705/article/details/48717391 Before I read this blog, I'm right attr If you are not familiar with the custom properties of children's shoes, you can take a look first :An ...