当前位置:网站首页>Fundamentals of machine learning theory -- some terms about machine learning

Fundamentals of machine learning theory -- some terms about machine learning

2022-04-23 18:39:00 【Capture bamboo shoots 123】

Catalog

This blog reference book :scikit-learn machine learning – Common algorithm principle and programming practice

cost function ( error )

Measure the consistency between the model and the training sample

cost For all training samples , The value fitted by the model is the same as the real value of the training sample Average error

cost function Is the functional relationship between cost and model parameters

J t r a i n ( θ ) = 1 2 m ∑ i = 1 m ( h θ ( x i ) − t i ) 2 J_{train}(\theta)=\frac{1}{2m}\sum_{i=1}^{m}(h_{\theta}(x^i)-t^i)^2 Jtrain(θ)=2m1i=1∑m(hθ(xi)−ti)2

among h ( x i ) h(x^i) h(xi) Represents the prediction label of the model for each sample value , t i t^i ti Represent the real label of each sample

The training process of the model is to find Appropriate model parameters bring The value of the cost function is the smallest

Model accuracy

Multiple models may be used to fit a data set ( For example, using first-order polynomials 、 Second order polynomial 、…、 Multiorder polynomial ), We tend to choose the one that performs best from these models , So how to evaluate the performance of a model ?

We often use the cost function value of the test set as the index , J t e s t ( θ ) J_{test}(\theta) Jtest(θ) The brighter the value, the smaller the error between the predicted value of the model and the actual value of the sample , That is, the better the prediction accuracy of new data

J t e s t ( θ ) = 1 2 m ∑ i = 1 m ( h θ ( x i ) − t i ) 2 J_{test}(\theta)=\frac{1}{2m}\sum_{i=1}^{m}(h_{\theta}(x^i)-t^i)^2 Jtest(θ)=2m1i=1∑m(hθ(xi)−ti)2

stay sklearn Interfaces are often used in score(x,y) To evaluate the performance of a model

Cross validation data sets

If you have a dataset now , We want to get some information from it , There are multiple models to choose from , Then we need to do the following three things

1. Train model parameters with possible multiple models

2. Select the best model from multiple models

3. Evaluate the prediction accuracy of this model

The main purpose of testing the data set is to test the accuracy of the model , And this process requires the use of models without “ Yes ” The data of , If step 2 Using test data , Then the data is “ Yes ”, To solve this problem , We can divide the data set into 3 part , The extra one is Cross validation data sets

Many times we don't use Cross validation data sets , This is because most of the time for a data set , We know what model to use

The learning curve

Take the cost function values of training data set and test data set as the vertical axis , The training dataset size is used as the horizontal axis , Draw a curve

Use sklearn Draw the learning curve with the interface provided in

from sklearn.model_selection import learning_curve,ShuffleSplit

def plot_learning_curve(estimator,x,y,cv=None,n_jobs=1,train_size=np.linspace(.1,1.0,5)):

train_size,train_score,test_score=learning_curve(estimator,x,y,cv=cv,n_jobs=n_jobs,train_sizes=train_size)

# Calculating mean , variance

train_score_mean=np.mean(train_score,axis=1)

train_score_std=np.std(train_score,axis=1)

test_score_mean=np.mean(test_score,axis=1)

test_score_std=np.std(test_score,axis=1)

plt.plot(train_size,train_score_mean,'o-',c='r')

plt.plot(train_size,test_score_mean,'o-',c='g')

return plt

The meaning of the learning curve : With the training data set ( The amount of training data ) An increase in , The accuracy of model fitting to training data , The prediction accuracy of cross validation data set changes

Over fitting

The model can fit the training samples very well , Cross validation data sets ( The new data ) The prediction accuracy of is low

resolvent

Get more training data

When fitting has happened , Increasing the amount of data can effectively improve the performance of the model

Reduce the number of input features

Over fitting shows that the model is too complex to some extent , This is what we can try to reduce the number of input features , This can reduce the amount of calculation of the model , It also reduces the complexity of the model

Under fitting

The model can't fit the training samples well , Cross validation data sets ( The new data ) The prediction accuracy is also low

Add valuable features

Under fitting shows that the model is a little simple , The reason may be that the number of input features is too small , We can mine more new features from the original data

Add the characteristics of polynomials

Sometimes it is not easy to mine features from original data , At this time, we can multiply some of the original features or square them as new features , This is equivalent to increasing the order of a model

x 1 , x 2 → x 1 2 , x 2 2 , x 1 x 2 x_1,x_2\rightarrow x_1^2,x_2^2,x_1x_2 x1,x2→x12,x22,x1x2

版权声明

本文为[Capture bamboo shoots 123]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204231835454702.html

边栏推荐

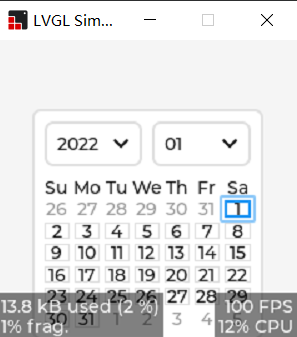

- ESP32 LVGL8. 1 - calendar (calendar 25)

- ESP32 LVGL8. 1 - slider slider (slider 22)

- 程序员如何快速开发高质量的代码?

- Introduction to quantexa CDI syneo platform

- SQL database syntax learning notes

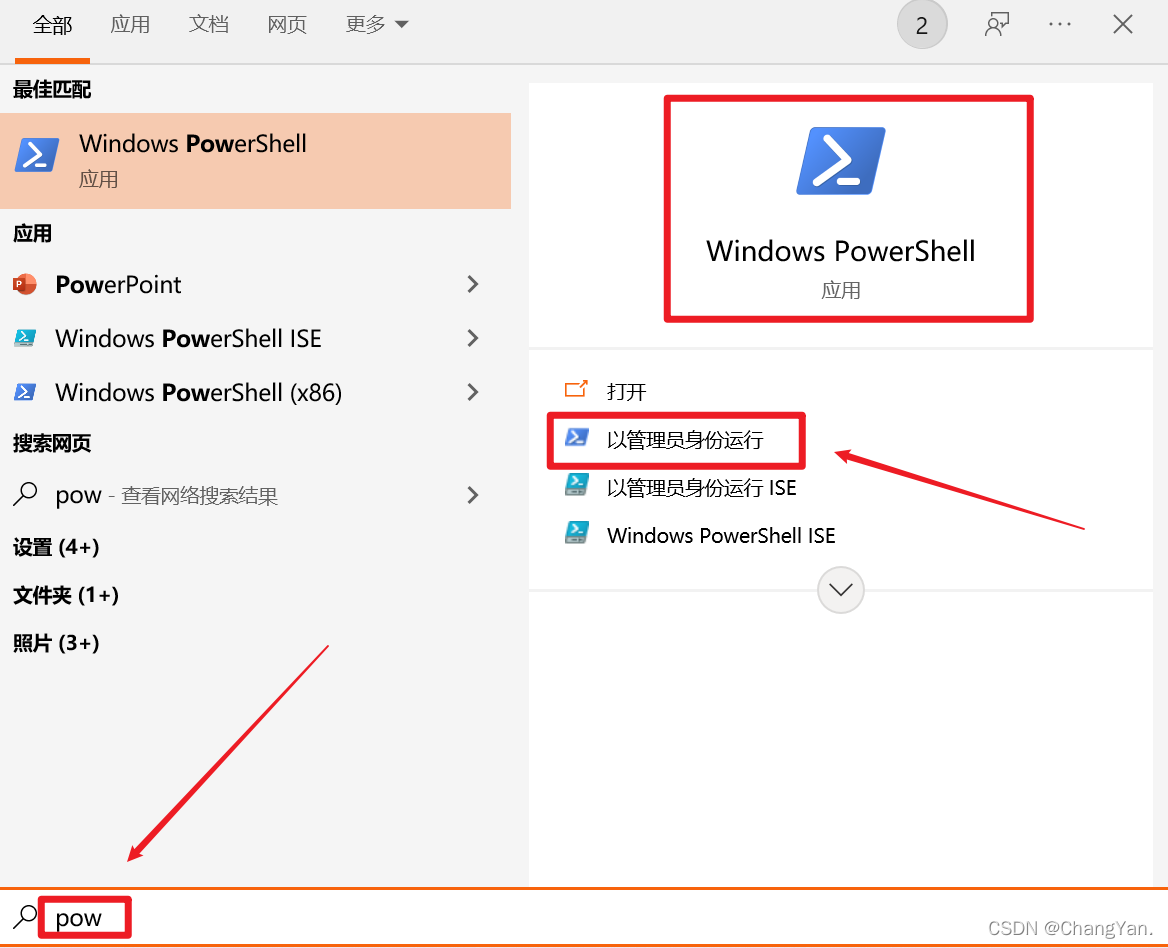

- 解决:cnpm : 無法加載文件 ...\cnpm.ps1,因為在此系統上禁止運行脚本

- CISSP certified daily knowledge points (April 19, 2022)

- If condition judgment in shell language

- Daily CISSP certification common mistakes (April 15, 2022)

- Chondroitin sulfate in vitreous

猜你喜欢

![[popular science] CRC verification (I) what is CRC verification?](/img/80/a1fa10ce6781aebf1b53d91fba52f4.png)

[popular science] CRC verification (I) what is CRC verification?

Summary of actual business optimization scheme - main directory - continuous update

视频边框背景如何虚化,简单操作几步实现

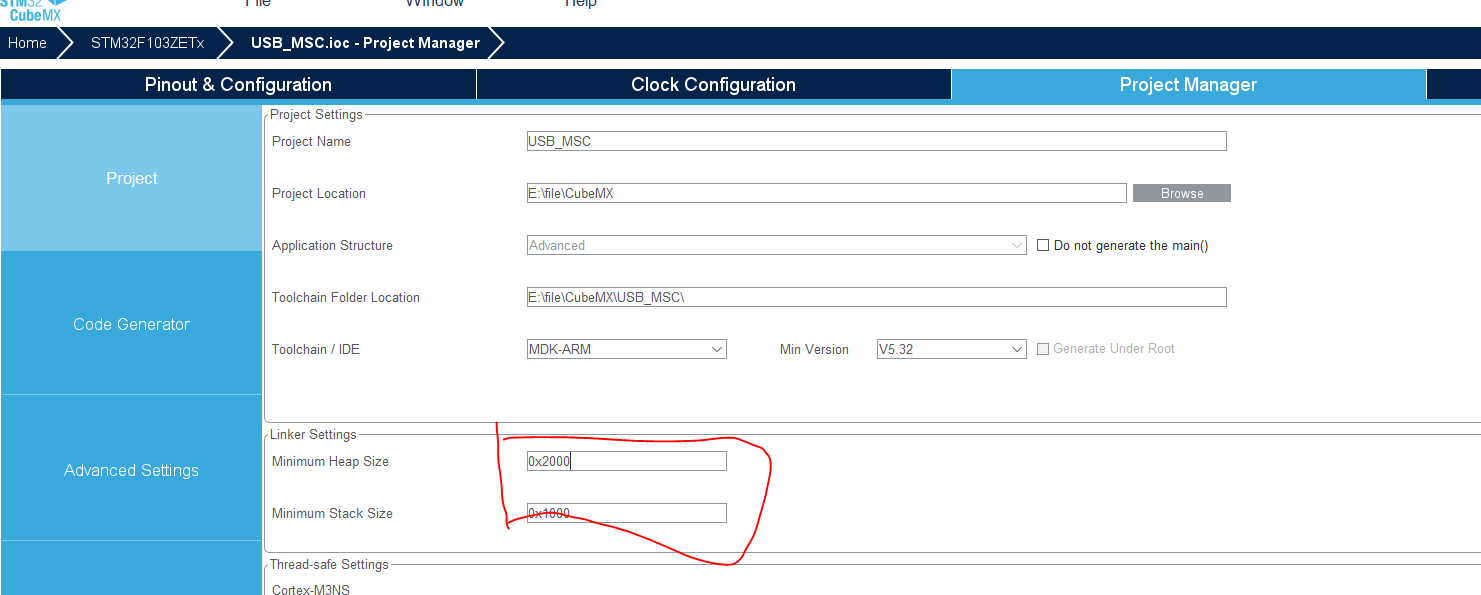

Use stm32cube MX / stm32cube ide to generate FatFs code and operate SPI flash

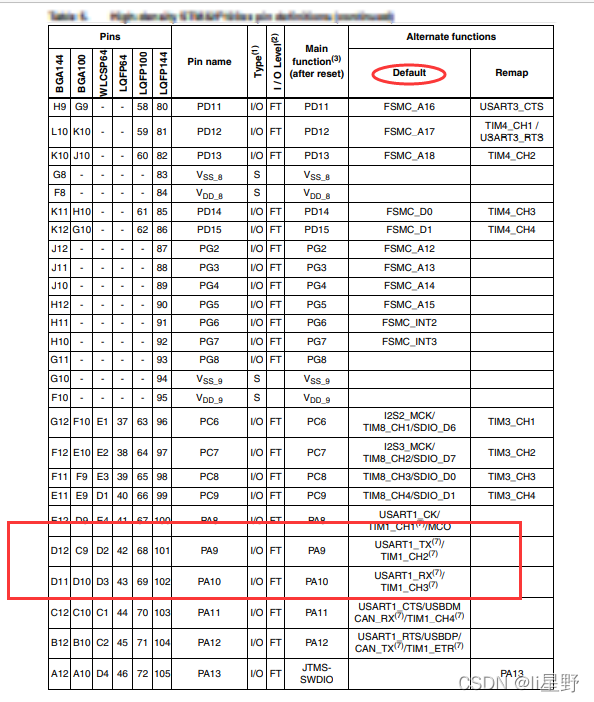

STM32 learning record 0008 - GPIO things 1

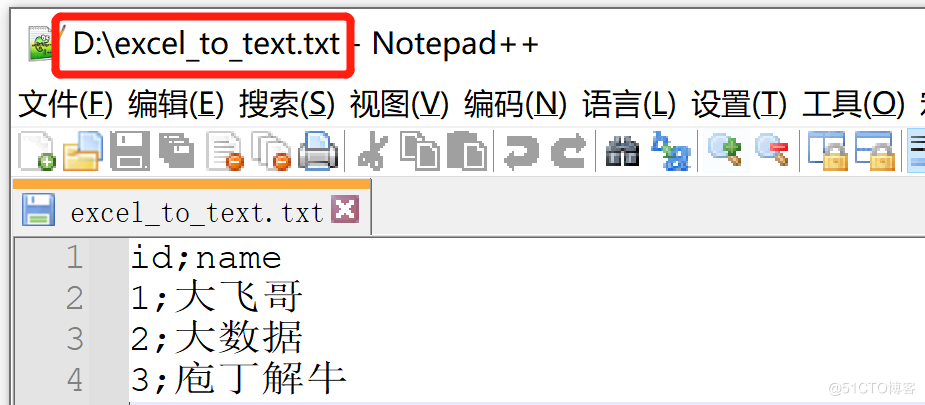

Kettle paoding jieniu Chapter 17 text file output

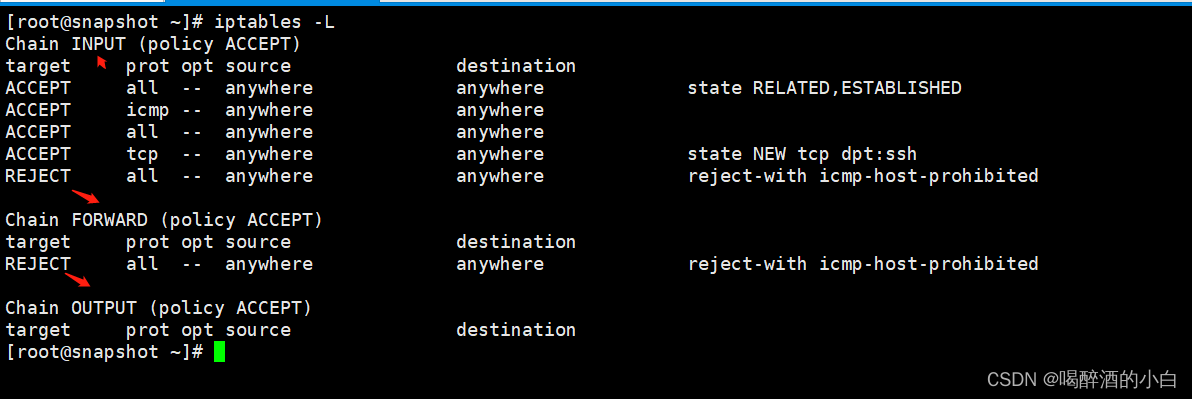

On iptables

Spark performance optimization guide

Resolution: cnpm: unable to load file \cnpm. PS1, because running scripts is prohibited on this system

ESP32 LVGL8. 1 - calendar (calendar 25)

随机推荐

Daily CISSP certification common mistakes (April 13, 2022)

Cutting permission of logrotate file

CISSP certified daily knowledge points (April 15, 2022)

iptables初探

Teach you to quickly rename folder names in a few simple steps

Dynamically add default fusing rules to feign client based on sentinel + Nacos

After CANopen starts PDO timing transmission, the heartbeat frame time is wrong, PDO is delayed, and CANopen time axis is disordered

Halo open source project learning (VII): caching mechanism

ctfshow-web362(SSTI)

ESP32 LVGL8. 1 - calendar (calendar 25)

数据库上机实验四(数据完整性与存储过程)

解决:cnpm : 無法加載文件 ...\cnpm.ps1,因為在此系統上禁止運行脚本

Daily network security certification test questions (April 18, 2022)

Imx6 debugging LVDS screen technical notes

ctfshow-web361(SSTI)

Query the logistics update quantity according to the express order number

使用 bitnami/postgresql-repmgr 镜像快速设置 PostgreSQL HA

K210 serial communication

The connection of imx6 network port is unstable after power on

Daily network security certification test questions (April 12, 2022)