当前位置:网站首页>Waymo dataset usage introduction (waymo-open-dataset)

Waymo dataset usage introduction (waymo-open-dataset)

2022-08-11 06:16:00 【zhSunw】

关于waymoThe introduction of the dataset is explained in detail in many blogs,But what data are there?waymoHow to use visualization tools?How to read this information?I looked around and found very few,这里简单做个整理,It is convenient for readers to understand and convenient for their future needs.

1. Waymo数据集下载

2. Waymo Open Dataset Tutorial

去waymoVisualize the official website to install related tools and configure the environment,这个不多赘述:

https://github.com/waymo-research/waymo-open-dataset.git

3. Loading and obtaining of data information

3.1 数据集加载

将FILENAME换成自己tfrecord的地址即可:

import os

import math

import numpy as np

import itertools

import tensorflow as tf

from waymo_open_dataset.utils import range_image_utils

from waymo_open_dataset.utils import transform_utils

from waymo_open_dataset.utils import frame_utils

from waymo_open_dataset import dataset_pb2 as open_dataset

import matplotlib.pyplot as plt

import matplotlib.patches as patches

FILENAME = "your tfrecord file"

dataset = tf.data.TFRecordDataset(FILENAME, compression_type='')

dataset中是一个tfrecordAll frame data in ,一般包含199帧,采样频率是10Hz,所以近似为20秒数据

3.2 信息读取

Iteration adopts traversaldataset,The information of each frame can be obtained,For specific information, please refer to the notes for modification

for data in dataset:

frame = open_dataset.Frame()

frame.ParseFromString(bytearray(data.numpy()))

#print(frame.laser_labels)

''' camera_labels: 5The image coordinates of the object detected by the camera,大小,类型等,from each frame0到4 context: Internal and external parameters of cameras and lidars,Beam tilt value images: 图片 laser_labels: The object in the lidar coordinate systemXYZ坐标,大小,行进方向,对象类型等等 lasers: 激光点 no_label_zones:Settings for unmarked areas(有关详细信息,请参阅文档) pose: Vehicle posture and position projected_lidar_labels: projected byLIDARImage coordinates when the object was detected timestamp_micros: 时间戳 '''

3.3 Parse the data to obtain point cloud information

(range_images, camera_projections, range_image_top_pose) = parse_range_image_and_camera_projection(frame)#解析数据帧

(point,cp_point) = convert_range_image_to_point_cloud(frame,range_images,camera_projections,range_image_top_pose)#Obtain a laser point cloud

3.4 输出label的信息

!不要用testing的tfrecord文件(Although mentally retarded,But I just forgot),原因显而易见:testing的数据没有label

for data in dataset:

frame = open_dataset.Frame()

frame.ParseFromString(bytearray(data.numpy()))

""" (range_images, camera_projections, range_image_top_pose) = frame_utils.parse_range_image_and_camera_projection(frame) points, cp_points = frame_utils.convert_range_image_to_point_cloud(frame, range_images, camera_projections, range_image_top_pose) """

for label in frame.laser_labels: # Get all of the current frameobj标签

obj_type = obj_types[label.type]

x = label.box.center_x

y = label.box.center_y

z = label.box.center_z

l = label.box.width

w = label.box.length

h = label.box.height

r = label.box.heading

v_x = label.metadata.speed_x

v_y = label.metadata.speed_y

# information of bounding box

one_obj = obj_type + str(':'+'\n'

+ 'shape : {' + str(l) + ',' + str(w) + ',' + str(h) + '}\n'

+ 'position : {'+str(x)+',' + str(y) + ','+str(z) + '}\n'

+ 'angle : {' + str(r) + '}\n'

+ 'speed : {' + str(v_x) + ',' + str(v_y) + '}\n')

print(one_obj+"\n")

顺便附上label的数据信息,Find what data you need in it and output it:

/* Copyright 2019 The Waymo Open Dataset Authors. All Rights Reserved.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

==============================================================================*/

syntax = "proto2";

package waymo.open_dataset;

message Label {

// Upright box, zero pitch and roll.

message Box {

// Box coordinates in vehicle frame.

optional double center_x = 1;

optional double center_y = 2;

optional double center_z = 3;

// Dimensions of the box. length: dim x. width: dim y. height: dim z.

optional double length = 5;

optional double width = 4;

optional double height = 6;

// The heading of the bounding box (in radians). The heading is the angle

// required to rotate +x to the surface normal of the box front face. It is

// normalized to [-pi, pi).

optional double heading = 7;

enum Type {

TYPE_UNKNOWN = 0;

// 7-DOF 3D (a.k.a upright 3D box).

TYPE_3D = 1;

// 5-DOF 2D. Mostly used for laser top down representation.

TYPE_2D = 2;

// Axis aligned 2D. Mostly used for image.

TYPE_AA_2D = 3;

}

}

optional Box box = 1;

message Metadata {

optional double speed_x = 1;

optional double speed_y = 2;

optional double accel_x = 3;

optional double accel_y = 4;

}

optional Metadata metadata = 2;

enum Type {

TYPE_UNKNOWN = 0;

TYPE_VEHICLE = 1;

TYPE_PEDESTRIAN = 2;

TYPE_SIGN = 3;

TYPE_CYCLIST = 4;

}

optional Type type = 3;

// Object ID.

optional string id = 4;

// The difficulty level of this label. The higher the level, the harder it is.

enum DifficultyLevel {

UNKNOWN = 0;

LEVEL_1 = 1;

LEVEL_2 = 2;

}

// Difficulty level for detection problem.

optional DifficultyLevel detection_difficulty_level = 5;

// Difficulty level for tracking problem.

optional DifficultyLevel tracking_difficulty_level = 6;

// The total number of lidar points in this box.

optional int32 num_lidar_points_in_box = 7;

}

// Non-self-intersecting 2d polygons. This polygon is not necessarily convex.

message Polygon2dProto {

repeated double x = 1;

repeated double y = 2;

// A globally unique ID.

optional string id = 3;

}

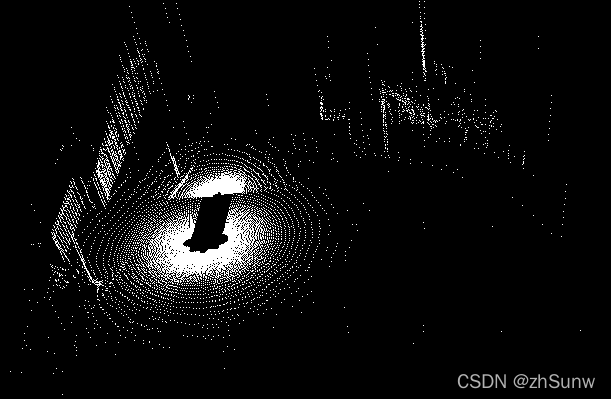

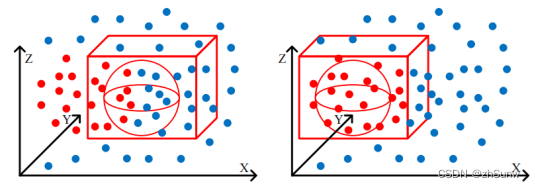

3.5 点云可视化

Get point cloud from frame information:

point包含5point cloud in each direction,Combine them to get the totalpoints_all

(range_images, camera_projections, range_image_top_pose) = frame_utils.parse_range_image_and_camera_projection(frame)

points, cp_points = frame_utils.convert_range_image_to_point_cloud(frame, range_images, camera_projections, range_image_top_pose)

points_all = np.concatenate(points, axis=0)

调用pc_show()进行显示:

def pc_show(points):

fig = mlab.figure(bgcolor=(0, 0, 0), size=(640, 500))

x = points[:,0]

y = points[:,1]

z = points[:,2]

col = z

print(x.shape)

mlab.points3d(x, y, z,

col, # Values used for Color

mode="point",

colormap='spectral', # 'bone', 'copper', 'gnuplot'

# color=(0, 1, 0), # Used a fixed (r,g,b) instead

figure=fig,

)

mlab.show()

效果:

边栏推荐

猜你喜欢

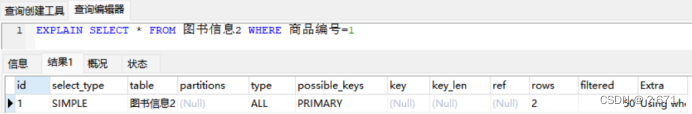

对MySQL查询语句的分析

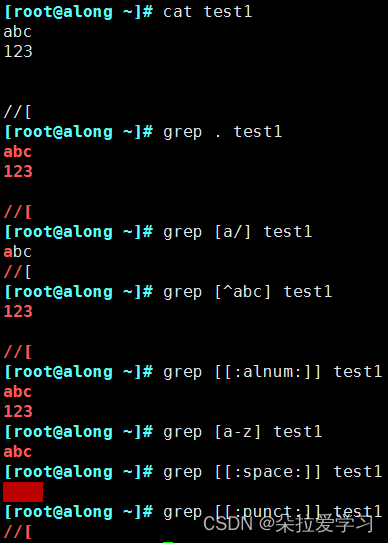

正则表达式与绕过案例

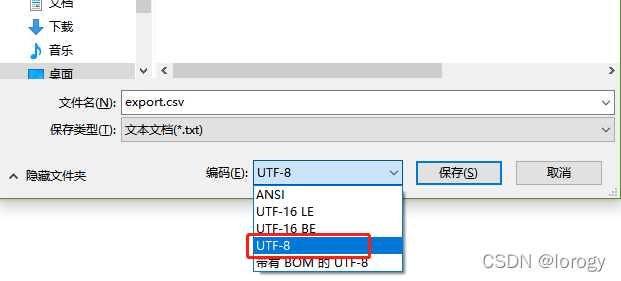

【sqlyog】【mysql】csv导入问题

AI智能图像识别的工作原理及行业应用

Reconstruction and Synthesis of Lidar Point Clouds of Spray

uniapp 在HBuilder X中配置微信小程序开发工具

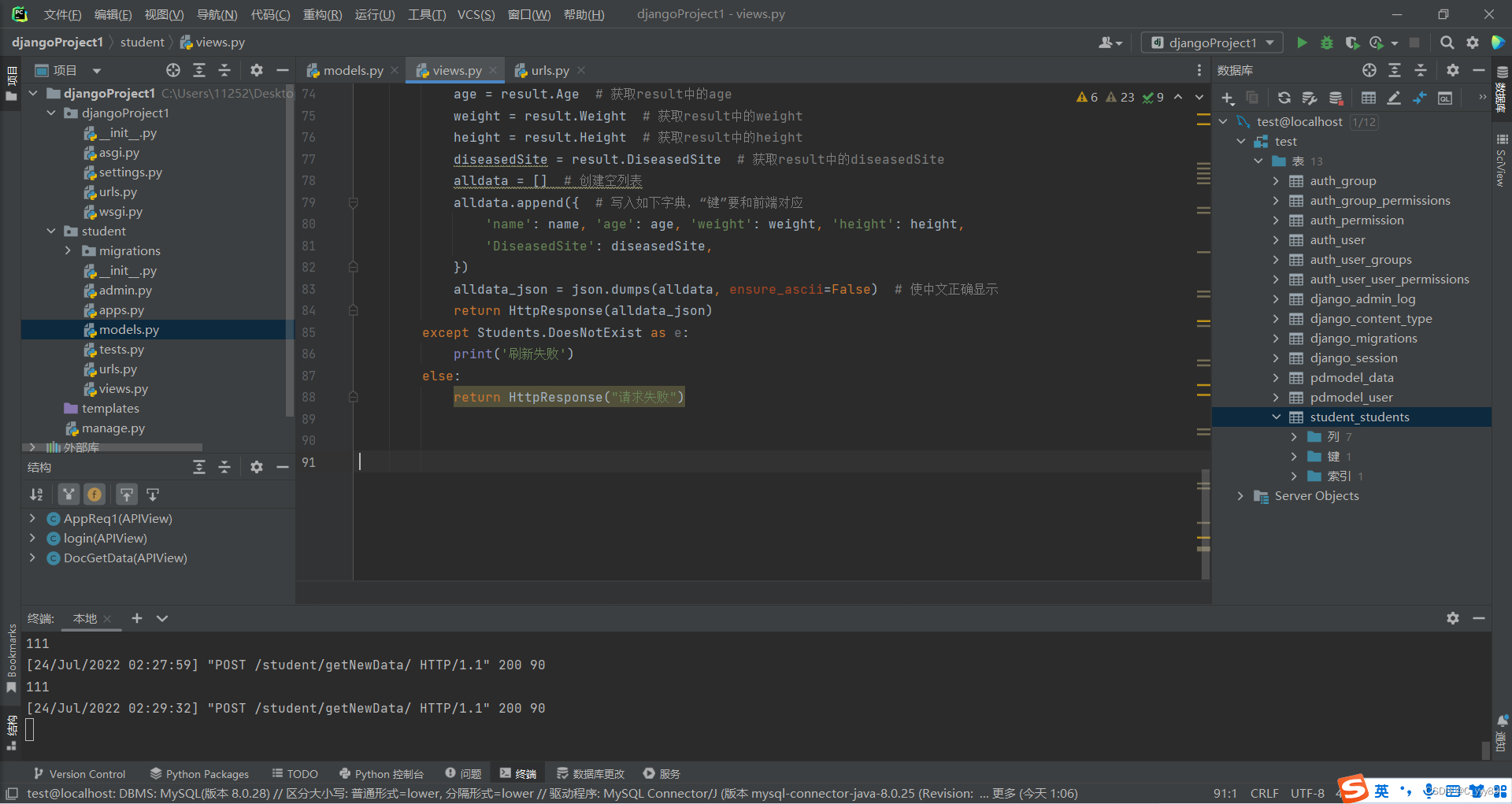

Mei cole studios - sixth DjangoWeb application framework + MySQL database training

SCNet:Semantic Consistency Networks for 3D Object Detection

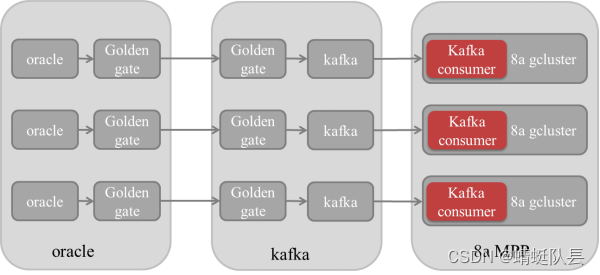

GBase 8a MPP Cluster产品高级特性

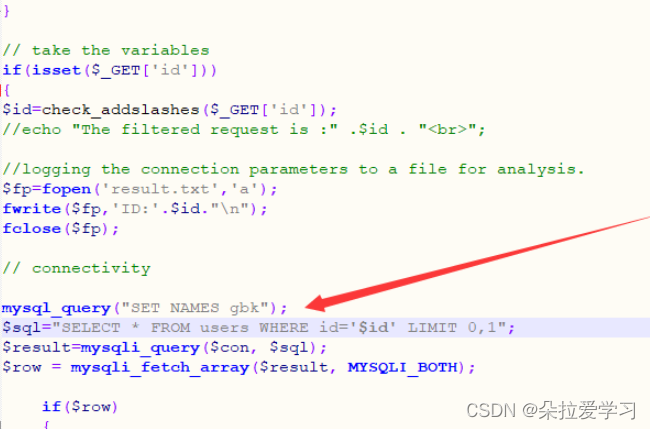

order by注入,limit注入,宽字节注入

随机推荐

GBase 8s性能简介

Zhejiang University School of Software 2020 Guarantee Research Computer Real Question Practice

解决Glide图片缓存问题,同一url换图片不起作用问题

Maykle Studio - HarmonyOS Application Development Third Training

Joint 3D Instance Segmentation and Object Detection for Autonomous Driving

CVPR2022——Not All Points Are Equal : IA-SSD

对MySQL查询语句的分析

Redis哨兵模式

梅科尔工作室-深度学习第二讲 BP神经网络

Maykel Studio - Django Web Application Framework + MySQL Database Second Training

TAMNet: A loss-balanced multi-task model for simultaneous detection and segmentation

Joint 3D Instance Segmentation and Object Detection for Autonomous Driving

动画(其二)

GBase 8a语法格式

软件架构之--MVC、MVP、MVVM

GBase 8s的多线程结构

使用TD-workbench管理tDengine数据库数据

2022年最新安全帽佩戴识别系统

@2022-02-22:每日一语

梅科尔工作室-DjangoWeb 应用框架+MySQL数据库第五次培训