当前位置:网站首页>4.1 - Support Vector Machines

4.1 - Support Vector Machines

2022-08-11 07:47:00 【A big boa constrictor 6666】

文章目录

The binary classification problem from the previous chapter

- Because the original picture on the right is yellowlossFunctions cannot do gradient descent,So we make an approximation to it(Approximation),用 l l l 来取代 δ δ δ ,此时的 l l l Many different functions can be used,比如:方差,Sigmoid+方差,Sigmoid+交叉熵(Cross entropy),铰链损失(Hinge loss)

- When there are outliers in the data(Outlier)时,铰链损失(Hinge loss)Often better than cross entropy(Cross entropy)表现得更好

一、铰链损失(Hinge loss)

- According to the derivation process in the figure below,SVM的lossThe function can be solved by gradient descent,最后并将SVMConverted to a common expression in textbooks.

二、核方法(Kernel Method)

- 对偶表示(Dual Representation):在SVM中, α n ∗ \alpha_n^* αn∗May be sparse,means there is some α n ∗ = 0 \alpha_n^*=0 αn∗=0的xn,而那些 α n ∗ ≠ 0 \alpha_n^*\neq 0 αn∗=0的xn就是支持向量(support vector).这些不是0The points ultimately determine the quality of our entire model,This is also why some outliers in the data are hard to matchSVMcause of the impact.

- 核函数(Kernel Fountion):右图中 K ( x n , x ) K(x^n,x) K(xn,x)就是核函数,也就是做 x n 和 x x^n和x xn和x的内积(inner product)

- 核方法(Kernel Trick):当我们的lossThe function can be written as the blue line on the left,We just need to calculate K ( x n ′ , x n ) K(x^{n'},x^n) K(xn′,xn),And you don't need to know the vectorx的具体值.This is the benefit of the nuclear approach,他不仅可以应用在SVM上,It can also be applied to linear regression and logistic regression.

- We can see in the derivation of the right figurex与zThe inner product after the feature transformation is very complicated,We don't need to do this when we use the kernel method,直接对x,zIt can be squared after inner product.

2.1 径向基函数核(Radial Basis Function Kernel)

- 当x与zmore like,其Kernel值就越大.如果x=z,值为1;x与zcompletely different,值为0.

- It is easy to see from the derivation of the formula in the figure belowRBF KernelIt is to do things on an infinitely multidimensional plane,Therefore, the complexity of the model will be very high,This is very easy to overfit.

2.2 Sigmoid Kernel

- Do it in the picture on the leftSigmoid Kernel时,There is only one hidden layer network,And the weight of each neuron is a piece of data,The number of neurons is the number of support vectors.

- The figure on the right explains how to directly design a kernel functionK(x,z)来代替Φ(x)和Φ(z),以及通过Mercer’s theoryto check whether the kernel function meets the requirements.

三、Support vector machine related methods(SVM related methods)

SVR(支持向量回归):When the difference between the predicted value and the actual value is within a certain range,loss=0

Ranking SVM:When something to consider is an orderinglist时

One-class SVM:He wants to belongpositive的exampleare all in the same category,negative的examplescattered elsewhere

下图是SVMand deep learning similarities between the two

边栏推荐

- opencv实现数据增强(图片+标签)平移,翻转,缩放,旋转

- PIXHAWK飞控使用RTK

- 从 dpdk-20.11 移植 intel E810 百 G 网卡 pmd 驱动到 dpdk-16.04 中

- ROS 服务通信理论模型

- Waldom Electronics宣布成立顾问委员会

- 4.1-支持向量机

- Tidb二进制集群搭建

- 1003 我要通过 (20 分)

- Redis source code: how to view the Redis source code, the order of viewing the Redis source code, the sequence of the source code from the external data structure of Redis to the internal data structu

- MindManager2022全新正式免费思维导图更新

猜你喜欢

Pinduoduo API interface

NFT 的价值从何而来

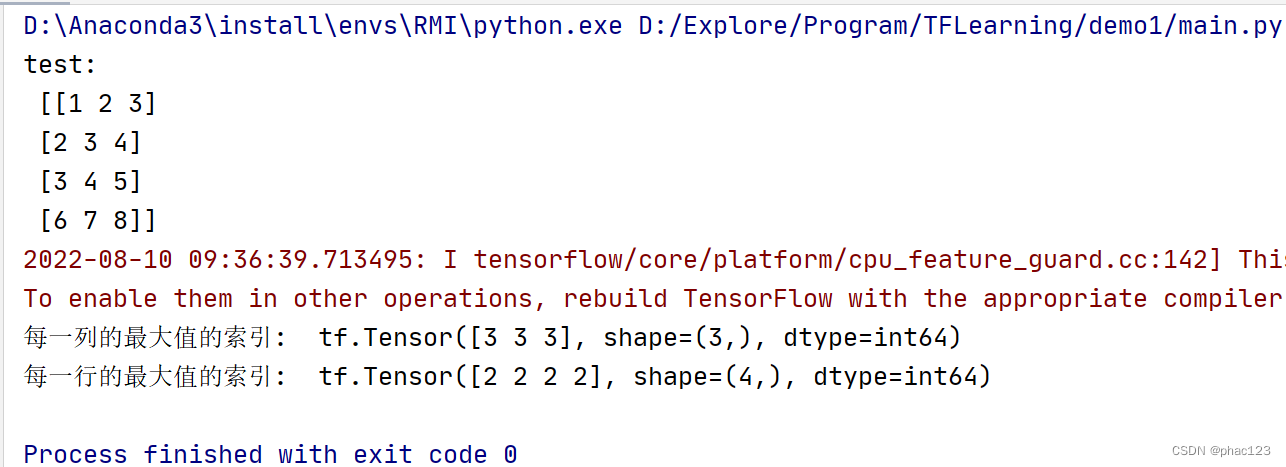

Tensorflow中使用tf.argmax返回张量沿指定维度最大值的索引

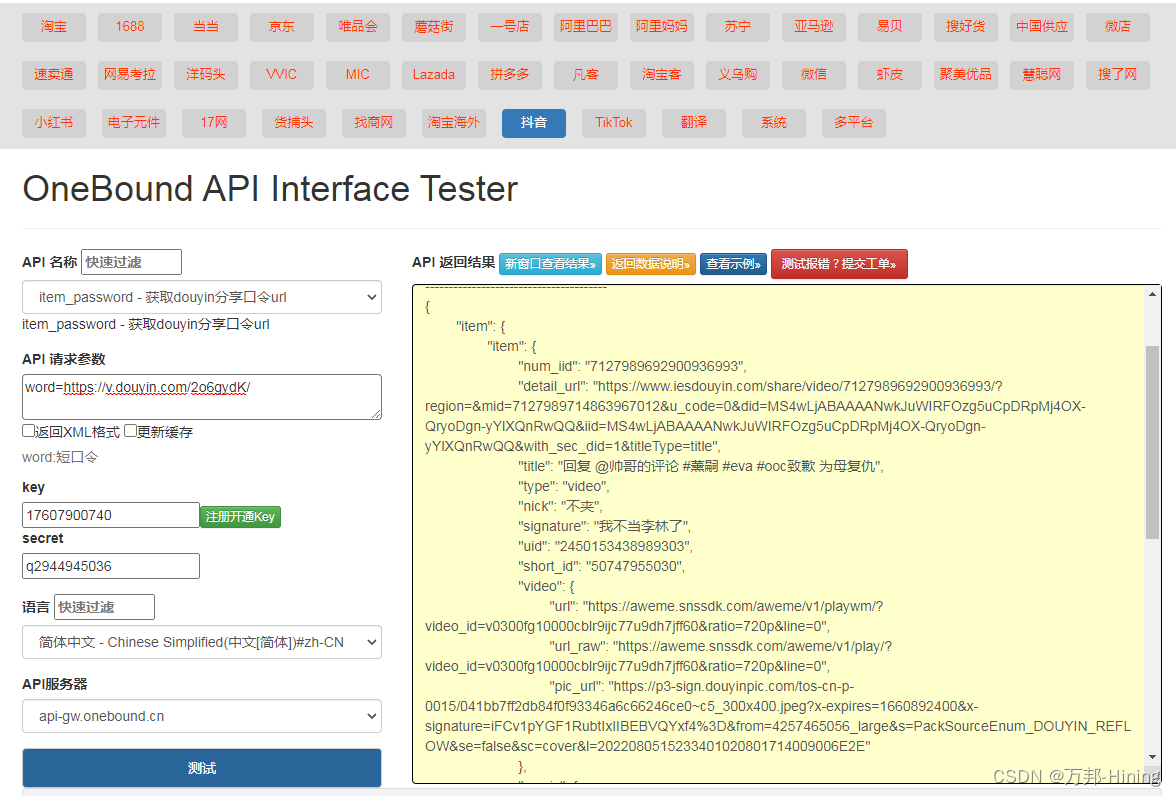

Douyin share password url API tool

Trill keyword search goods - API

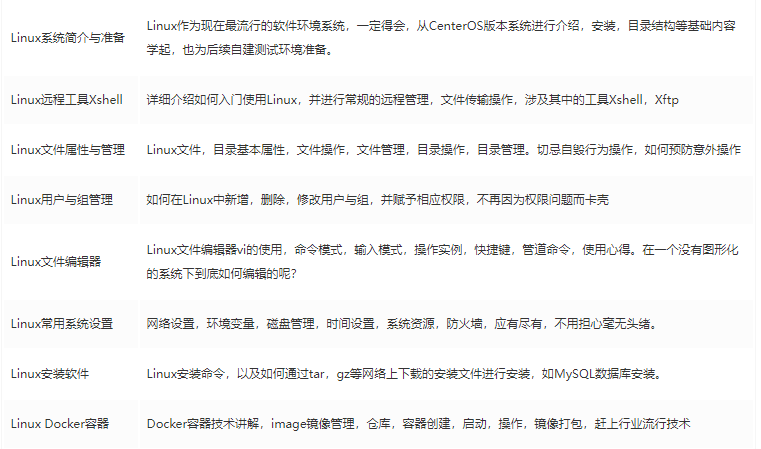

年薪40W测试工程师成长之路,你在哪个阶段?

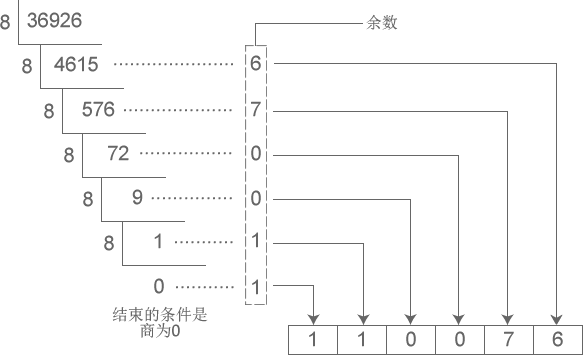

进制转换间的那点事

【latex异常和错误】Missing $ inserted.<inserted text>You can‘t use \spacefactor in math mode.输出文本要注意特殊字符的转义

如何选择专业、安全、高性能的远程控制软件

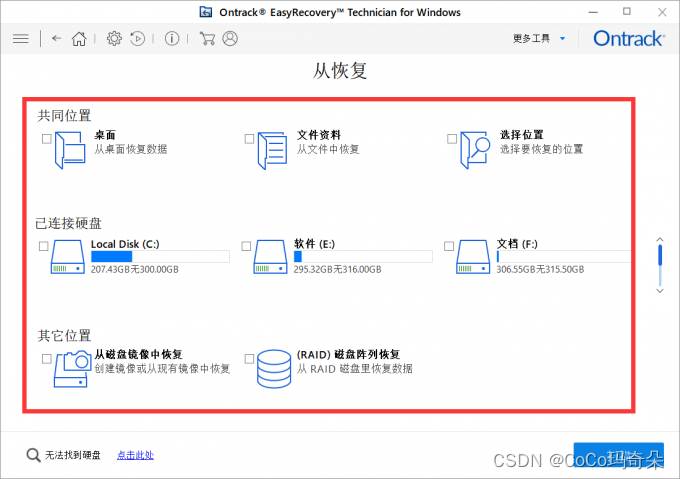

easyrecovery15数据恢复软件收费吗?功能强大吗?

随机推荐

1002 写出这个数 (20 分)

ROS 话题通信理论模型

线程交替输出(你能想出几种方法)

【Pytorch】nn.ReLU(inplace=True)

Redis源码:Redis源码怎么查看、Redis源码查看顺序、Redis外部数据结构到Redis内部数据结构查看源码顺序

golang fork 进程的三种方式

从苹果、SpaceX等高科技企业的产品发布会看企业产品战略和敏捷开发的关系

年薪40W测试工程师成长之路,你在哪个阶段?

Redis source code-String: Redis String command, Redis String storage principle, three encoding types of Redis string, Redis String SDS source code analysis, Redis String application scenarios

详述 MIMIC护理人员信息表(十五)

prometheus学习5altermanager

2022-08-09 Group 4 Self-cultivation class study notes (every day)

为什么我使用C#操作MySQL进行中文查询失败

CIKM 2022 AnalytiCup Competition: 联邦异质任务学习

机器学习总结(二)

流式结构化数据计算语言的进化与新选择

Unity3D learning route?

Service的两种状态形式

项目2-年收入判断

1036 跟奥巴马一起编程 (15 分)