当前位置:网站首页>Pyppeter crawler

Pyppeter crawler

2022-04-23 18:00:00 【Round programmer】

import asyncio

import pyppeteer

from user_agents import UA

from collections import namedtuple

Response = namedtuple("rs", "title url html cookies headers history status")

async def get_html(url, timeout=30):

browser = await pyppeteer.launch(headless=True, args=['--no-sandbox'])

page = await browser.newPage()

await page.setUserAgent(UA)

res = await page.goto(url, options={

'timeout': int(timeout * 1000)})

# stay while Forcibly query an element in the loop and wait

while not await page.querySelector('.share-box'):

pass

# Scroll to the bottom of the page

await page.evaluate('window.scrollBy(0, window.innerHeight)')

data = await page.content()

title = await page.title()

resp_cookies = await page.cookies()

resp_headers = res.headers

resp_history = None

resp_status = res.status

response = Response(

title=title,

url=url,

html=data,

cookies=resp_cookies,

headers=resp_headers,

history=resp_history,

status=resp_status

)

return response

if __name__ == '__main__':

url_list = [

"http://gxt.hunan.gov.cn//gxt/xxgk_71033/czxx/201005/t20100528_2069234.html",

"http://gxt.hunan.gov.cn//gxt/xxgk_71033/czxx/201005/t20100528_2069221.html",

"http://gxt.hunan.gov.cn//gxt/xxgk_71033/czxx/200811/t20081111_2069210.html"

]

task = (get_html(url) for url in url_list)

loop = asyncio.get_event_loop()

results = loop.run_until_complete(asyncio.gather(*task))

for res in results:

print(res.title)

版权声明

本文为[Round programmer]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/04/202204230545315893.html

边栏推荐

- Gets the time range of the current week

- Use of list - addition, deletion, modification and query

- 读取excel,int 数字时间转时间

- Thirteen documents in software engineering

- 2022江西储能技术展会,中国电池展,动力电池展,燃料电池展

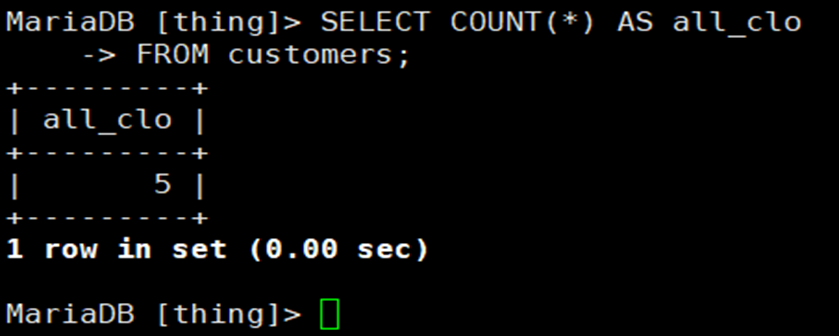

- MySQL_01_简单数据检索

- Romance in C language

- Cross domain settings of Chrome browser -- including new and old versions

- String function in MySQL

- Encapsulate a timestamp to date method on string prototype

猜你喜欢

![[UDS unified diagnostic service] (Supplement) v. detailed explanation of ECU bootloader development points (1)](/img/74/bb173ca53d62304908ca80d3e96939.png)

[UDS unified diagnostic service] (Supplement) v. detailed explanation of ECU bootloader development points (1)

2022制冷与空调设备运行操作判断题及答案

Open source key component multi_ Button use, including test engineering

Flask项目的部署详解

Anchor location - how to set the distance between the anchor and the top of the page. The anchor is located and offset from the top

Classification of cifar100 data set based on convolutional neural network

2022年上海市安全员C证操作证考试题库及模拟考试

MySQL_01_简单数据检索

2022 judgment questions and answers for operation of refrigeration and air conditioning equipment

Solving the problem of displaying too many unique values in ArcGIS partition statistics failed

随机推荐

journal

Summary of common server error codes

Build openstack platform

2022 Jiangxi energy storage technology exhibition, China Battery exhibition, power battery exhibition and fuel cell Exhibition

Click Cancel to return to the previous page and modify the parameter value of the previous page, let pages = getcurrentpages() let prevpage = pages [pages. Length - 2] / / the data of the previous pag

Random number generation of C #

C# 网络相关操作

[appium] write scripts by designing Keyword Driven files

Element calculation distance and event object

列錶的使用-增删改查

Add animation to the picture under V-for timing

Qtablewidget usage explanation

Batch export ArcGIS attribute table

Welcome to the markdown editor

_ FindText error

QTableWidget使用讲解

re正則錶達式

Selenium + phantom JS crack sliding verification 2

Classification of cifar100 data set based on convolutional neural network

Implementation of k8s redis one master multi slave dynamic capacity expansion