当前位置:网站首页>Thesis unscramble TransFG: A Transformer Architecture for Fine - grained Recognition

Thesis unscramble TransFG: A Transformer Architecture for Fine - grained Recognition

2022-08-11 06:32:00 【pontoon】

This article is the application of transformers in fine-grained fields.

Problem: Transformer has not been used in the field of image segmentation

Contribution points: 1. The input of the vision transformer divides the image into patches, but there is no overlap. The article is changed to split patches and use overlap (this can only be counted as a trick)

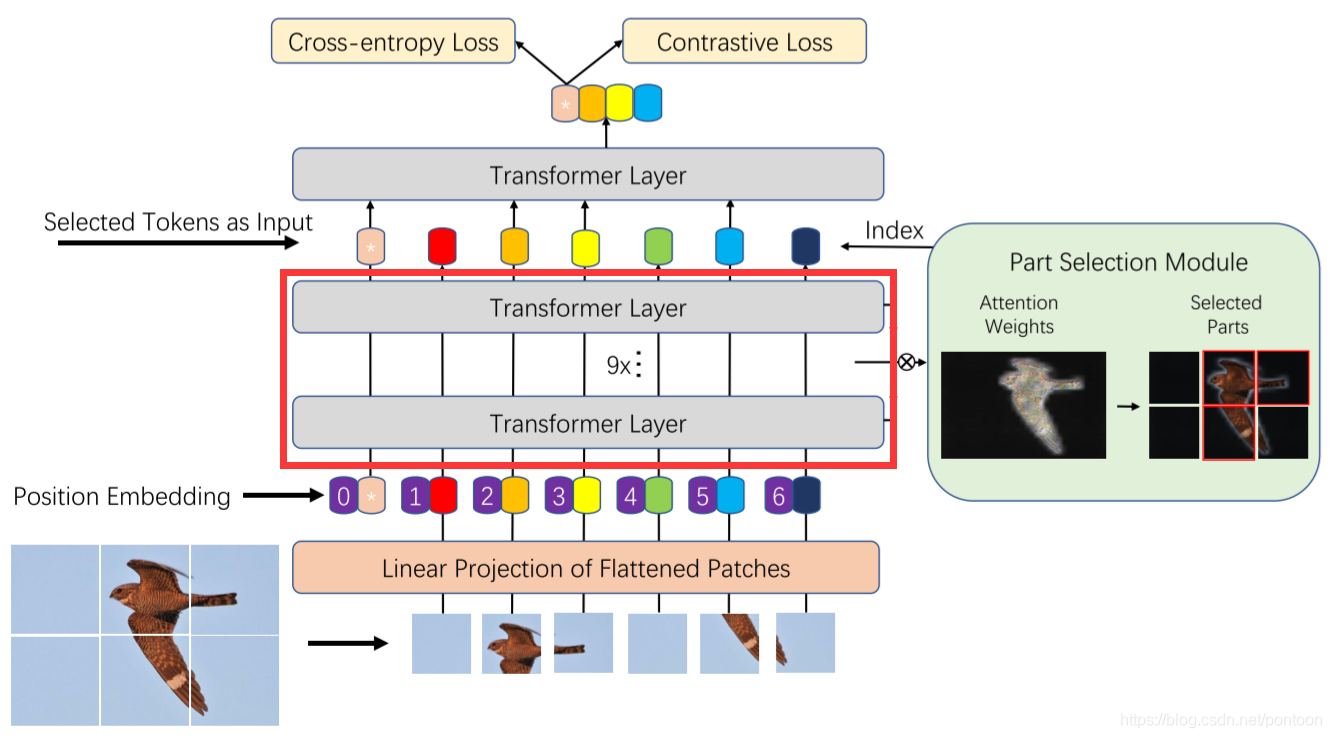

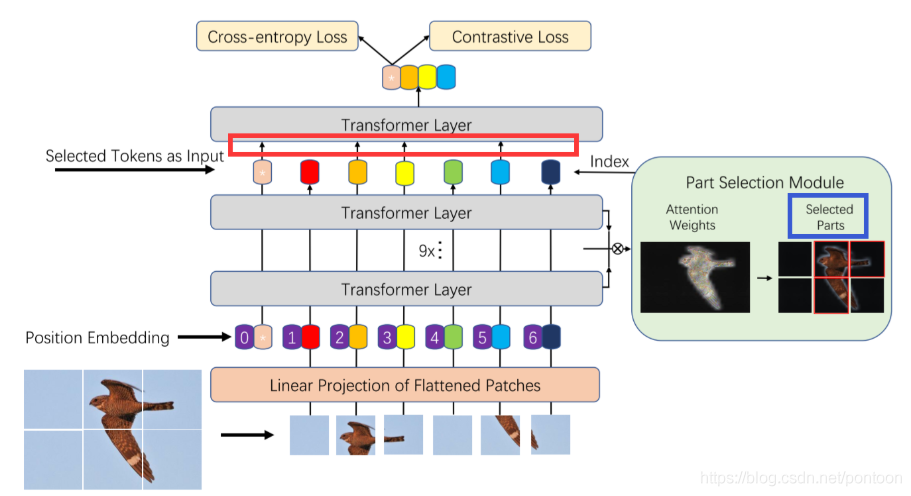

2.Part Selection Module

In layman's terms, the input of the last layer is different from the vision transformer, that is, the weights of all layers before the last layer (shown in the red box) are multiplied, and then the tokens with great weight are filtered and spliced together as the L-th layer.enter.

First of all, the output of the L-1 layer is originally like this:

![]()

The weight of a previous layer is as follows:

![]()

The value range of the subscript l is (1,2,...,L-1)

Assuming there are K self-attention heads, the weight in each head is:

![]()

The value range of the superscript i is (0,1,...,K)

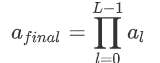

The weights are multiplied to all layers before the last layer:

Then select the A_k tokens with the largest weight as the input of the last layer.

So after processing, its input can be expressed as:

![]()

From the perspective of the model architecture, it can be found that the token with the arrow in the red box is selected, and it is also the token with a large weight after the weight is multiplied. The blue box on the right represents the patch corresponding to the selected token.

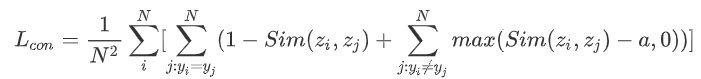

3.Contrastive loss

The author said that the features between different categories in the fine-grained field are very similar, so it is not enough to use the cross-entropy loss to learn the features. After the cross-entropy loss, a new Contrastive loss is added, which introduces the cosine similarity.(Used to estimate the similarity of two vectors), the more similar the vectors, the greater the cosine similarity.

The purpose of the author's proposal of this loss function is to reduce the similarity of "classification tokens" of different categories, and maximize the similarity of the same "classification tokens".The formula of Contrastive loss is as follows:

Where a is an artificially set constant.

So the overall function is:

![]()

Experiment:

Comparison with CNN and ViT on several datasets for sub-classification, SOTA

边栏推荐

猜你喜欢

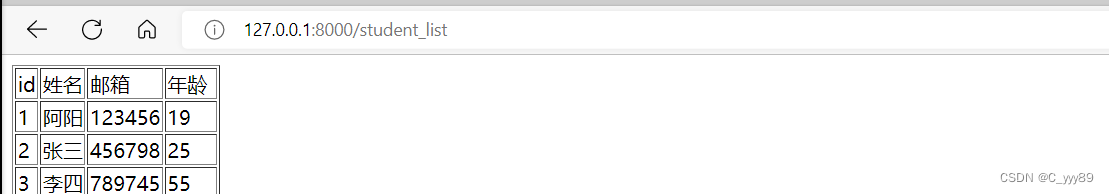

Maykel Studio - Django Web Application Framework + MySQL Database Third Training

The latest safety helmet wearing recognition system in 2022

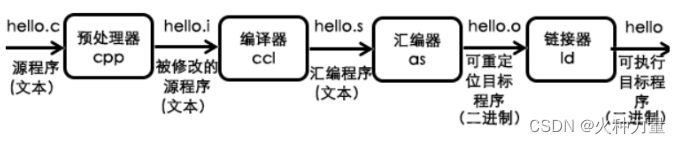

C语言的编译

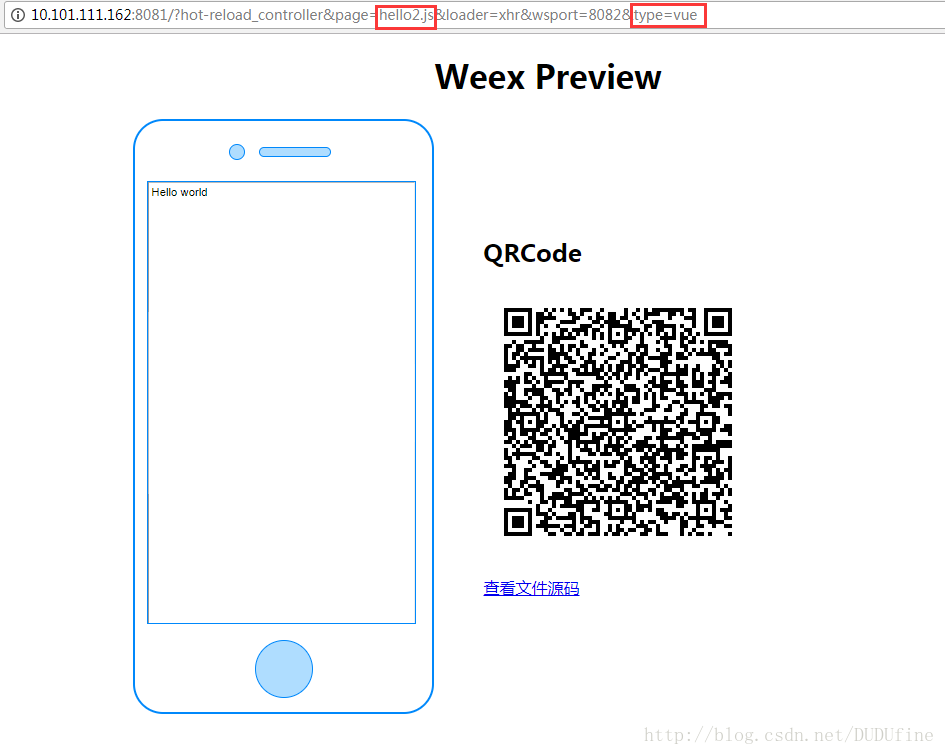

weex入门踩坑

华为云IOT平台设备获取api调用笔记

【调试记录1】提高MC3172浮点运算能力,IQmath库的获取与导入使用教程

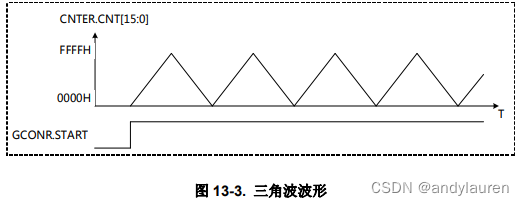

CMT2380F32模块开发10-高级定时器例程

Safety helmet identification system - escort for safe production

关于openlayer中swipe位置偏移的问题

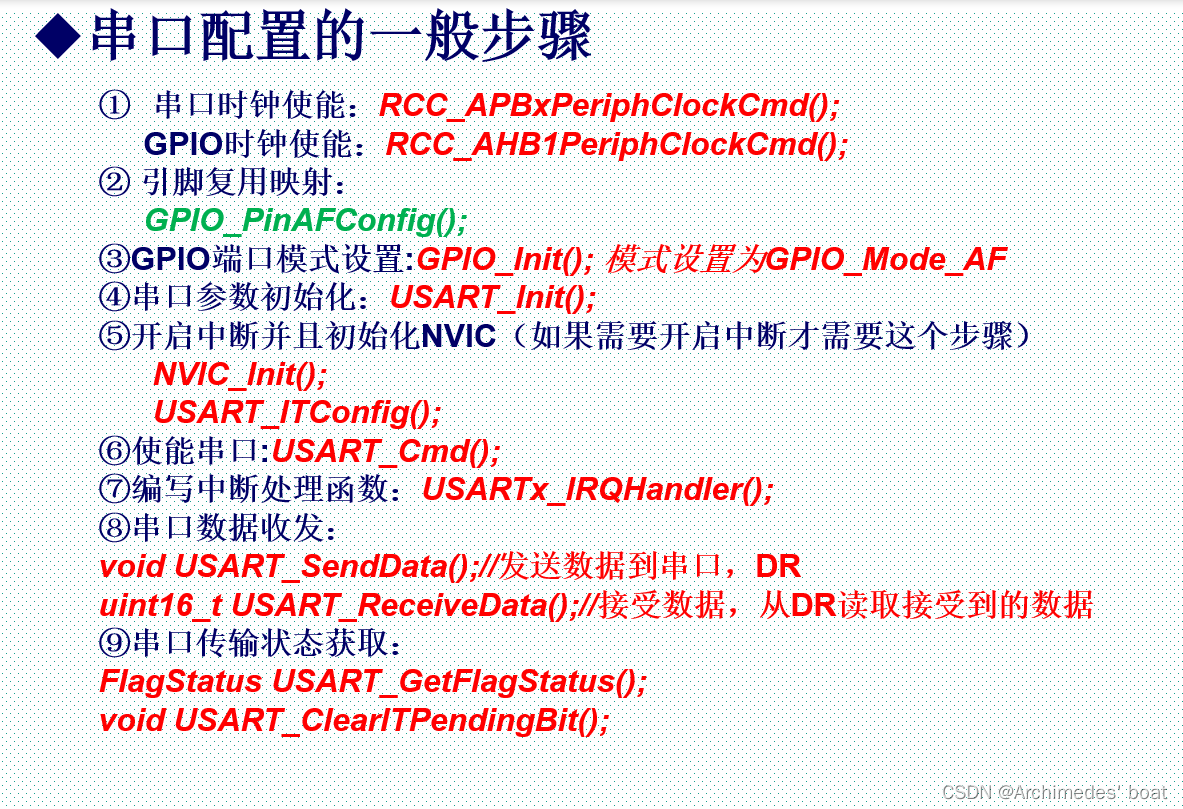

STM32-串口常用寄存器和库函数及配置串口步骤

随机推荐

STM32学习笔记(白话文理解版)—外部IO中断实验

关于openlayer中swipe位置偏移的问题

JVM调优整理

Mei cole studios - deep learning second BP neural network

NUC980-开发环境搭建

防盗链——防止其他页面通过url直接访问本站资源

Vscode远程连接服务器终端zsh+Oh-my-zsh + Powerlevel10 + Autosuggestions + Autojump + Syntax-highlighting

STM32学习笔记(白话文理解版)—按键控制

pip安装报错:is not a supported wheel on this platform

自定义形状seekbar学习--方向盘view

vscode插件开发——代码提示、代码补全、代码分析

NUC980-镜像烧录

Safety helmet recognition - construction safety "regulator"

关于mmdetection框架实用小工具说明

STM32-中断优先级管理NVIC

OpenMLDB Meetup No.2 会议纪要

论文解读:GAN与检测网络多任务/SOD-MTGAN: Small Object Detection via Multi-Task Generative Adversarial Network

产品经理与演员有着天然的相似

yolov3+centerloss+replay buffer实现单人物跟踪

红外线一认识