当前位置:网站首页>Mei cole studios - deep learning second BP neural network

Mei cole studios - deep learning second BP neural network

2022-08-11 06:18:00 【C_yyy89】

目录

前言

This article documents the second lecture of the deep learning training at Maykle Studios-BP神经网络学习笔记,Thanks for the explanation!

1.感知机

感知机是作为神经网络(深度学习)的起源的算法.因此,学习感知机的构造也就是学习通向神经网络和深度学习的一种重要思想.

1.1.什么是感知机?

感知机接收多个输入信号,输出一个信号.这里所说的“信号”可以想象成电流或河流那样具备“流动性”的东西.像电流流过导线,向前方输送电子一样,感知机的信号也会形成流,向前方输送信息.

2.什么是BP神经网络?

BP(BackPropagation)算法是神经网络深度学习中最重要的算法之一,是一种按照Multilayer Feedforward Neural Network Trained by Error Backpropagation Algorithm,是应用最广泛的神经网络模型之一.了解BP算法可以让我们更理解神经网络深度学习模型训练的本质,属于内功修行的部分.

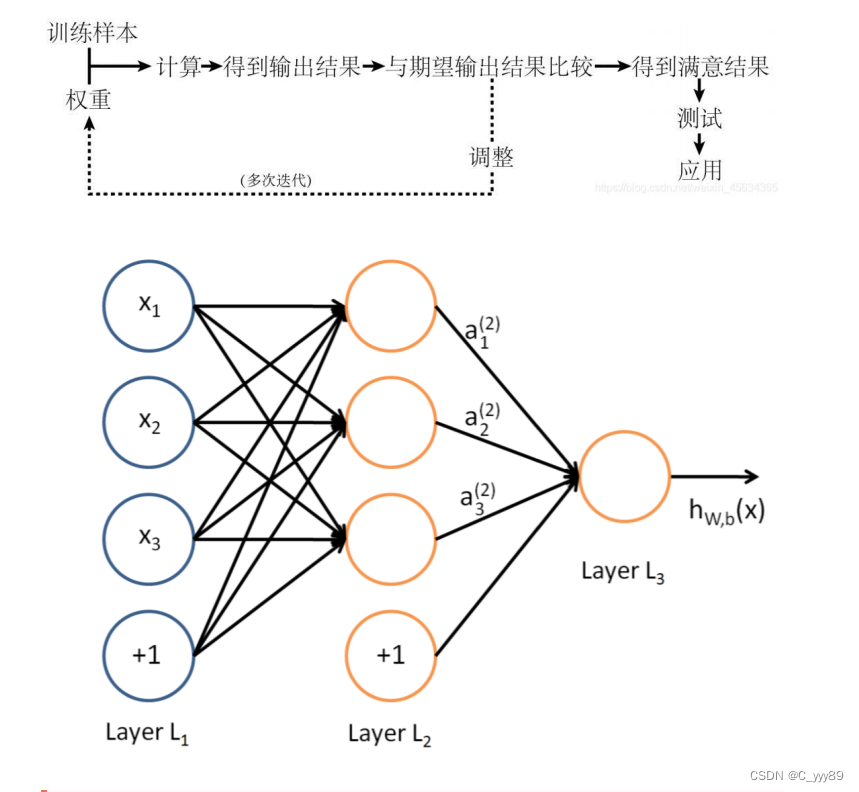

BP算法的核心思想:The learning process is signaled by正向传播和误差的反向传播两个过程组成.

2.1.正向传播

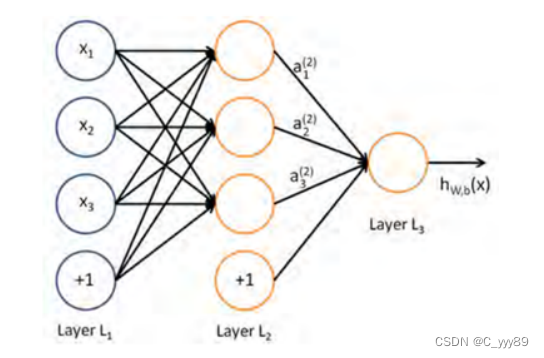

The neurons in the input layer are responsible for receiving various information from the outside world,and pass the information to the intermediate layer neurons,The neurons in the middle hidden layer are responsible for processing and transforming the received information,Process information on demand,In practical applications, the intermediate hidden layer can be set to one or more hidden layer structures.,And pass the information to the output layer through the hidden layer of the last layer,这个过程就是BPThe forward propagation process of a neural network.

2.2.反向传播

When the error between the actual output and the ideal output exceeds expectations,It is necessary to enter the back-propagation process of the error.It starts with the output layer first,The error corrects the weights of each layer according to the gradient descent method,And so to the hidden layer、输入层传播.Through constant forward propagation of information and back propagation of errors,Weights of each layer will continue to adjust,This is the learning and training of neural networks.When the output error is reduced to a desired level or a preset number of learning iterations,训练结束,BPNeural network completes learning.

2.3.Hidden layer attention

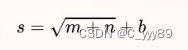

If there are too few neuron nodes in the hidden layer,As a result, the training process of the neural network may slow down or not converge..If there are too many nodes in the hidden layer,The prediction accuracy of the model will improve,But at the same time the network topology is too large,收敛速度慢,Universality will diminish.

How to set up hidden layer neurons:

如果BPThe number of nodes in the input layer of the neural network ism个,The output layer node isn个,Then the number of hidden layer nodes can be deduced from the following formula:s个.其中b一般为1-9的整数.可以用MATLABThe toolbox in the calculationb的值.

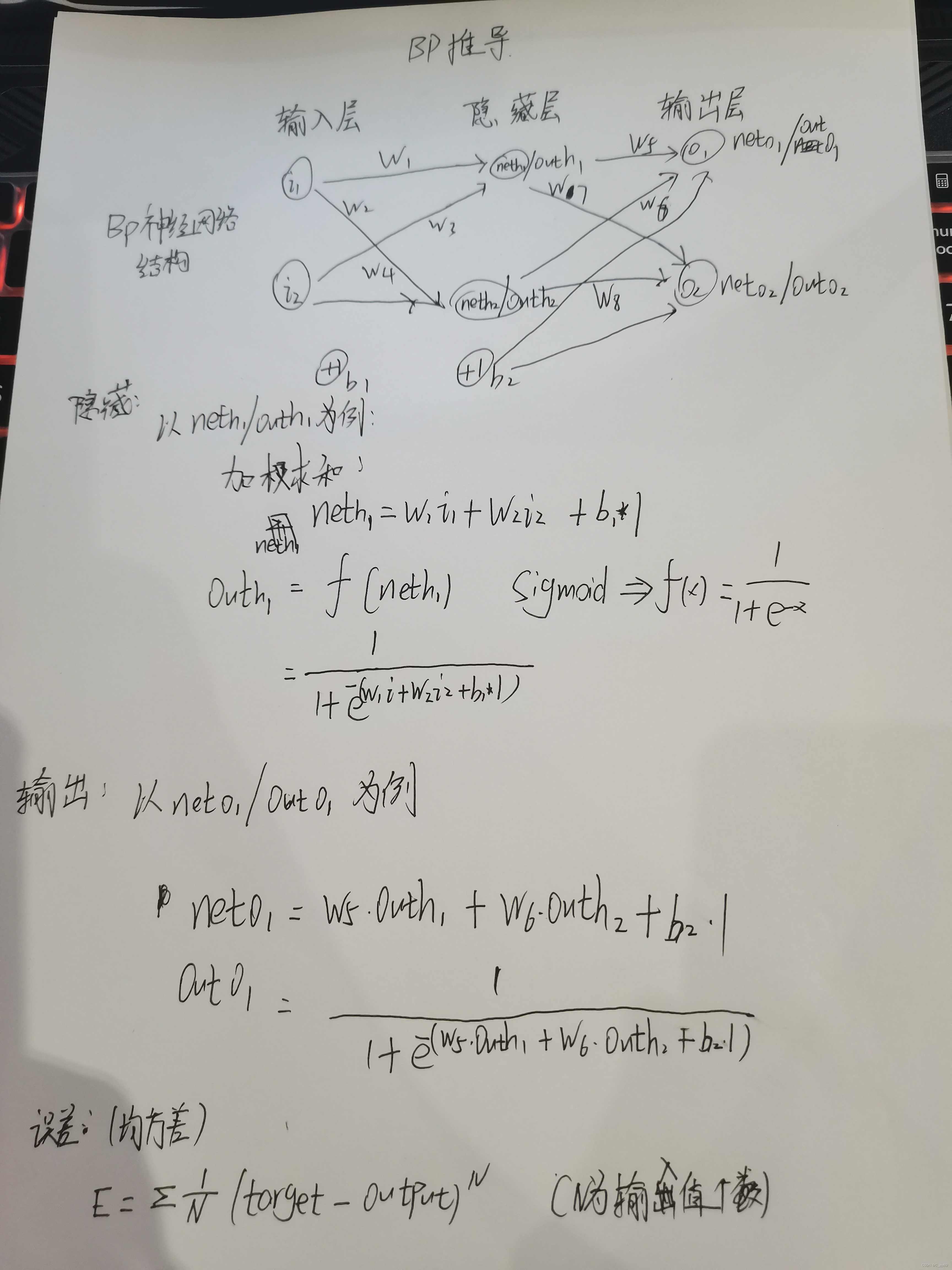

3.BP神经网络的推导

3.1.正向传播计算过程

3.2.反向传播计算过程

n为学习率,设置为0.5,You can adjust the pace of the update,合适的学习率能够使目标函数在合适的时间内收敛到局部最小值.学习率设置太小,The results of convergence is very slow;学习率设置太大,The result hovers around the optimal value,难以收敛,一般选取为0.01-0.8.

重复以上步骤,Constantly update the weight parameters,get new output value,The output value is constantly approaching the expected value.

3.3.何时结束?

1.设置最大迭代次数,比如使用数据集迭代100次后停止训练;

2.计算训练集在网络上的预测准确率,达到一定门限值后停止训练;

总结

That's all for this lesson,Mainly include perceptron andBPThe derivation process of the neural network.

边栏推荐

猜你喜欢

随机推荐

GBase 8s共享内存中的常驻内存段

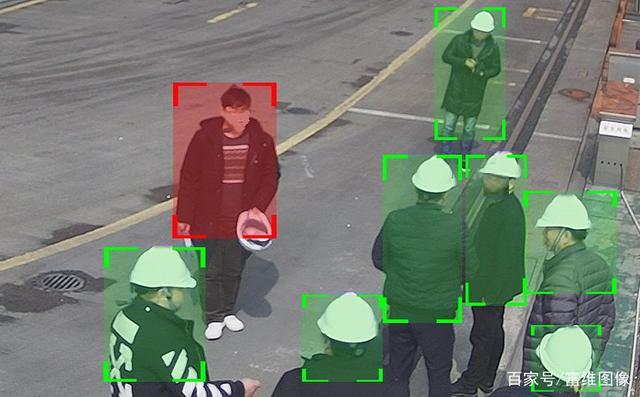

智慧工地 安全帽识别系统

梅科尔工作室-DjangoWeb 应用框架+MySQL数据库第六次培训

Severe Weather 3D Object Detection Dataset Collection

用正则验证文件名是否合法

The kernel communicates with user space through character devices

【sqlyog】【mysql】csv导入问题

【OAuth2】授权机制

梅科尔工作室-HarmonyOS应用开发的第二次培训

秦始皇到底叫嬴政还是赵政?

Mei cole studios - sixth DjangoWeb application framework + MySQL database training

安全帽识别算法

Androd 基本布局(其一)

>>技术应用:*aaS服务定义

微信小程序canvas画图,保存页面为海报

微信小程序部分功能细节

梅科尔工作室-DjangoWeb 应用框架+MySQL数据库第三次培训

OSI TCP/IP学习笔记

AI-based intelligent image recognition: 4 different industry applications

GBase 8a技术特性-集群架构